CITEULIKE: 5403638 | REFERENCE: BibTex, Endnote, RefMan | PDF ![]()

Meerbeek, B., Saerbeck, M., & Bartneck, C. (2009). Iterative Design Process for Robots With Personality. Proceedings of the AISB2009 Symposium on New Frontiers in Human-Robot Interaction Edingburgh pp. 94-101.

Iterative design process for robots with personality

1Philips Research

HTC 34 WB51, 5656AE, Eindhoven, NL

{bernt.meerbeek,martin.saerbeck}@philips.com

2Department of Industrial Design

Eindhoven University of Technology

Den Dolech 2, 5600MB Eindhoven, NL

christoph@bartneck.de

Abstract - Previous research has shown that autonomous robots tend to induce the perception of a personality through their behavior and appearance. It has therefore been suggested that the personality of a robot can be used as a design guideline. A well-defined and clearly communicated personality can serve as a mental model of the robot and facilitate the interaction. From design perspective, this raises the question what kind of personality to design for a robot and how to express this personality? In this paper, we describe a process to design and evaluate personality and expressions for products. We applied this to design the personality and expressions in the behavior of a domestic robot.

Keywords: robot, design, process

1 Introduction

1.1 Context

Traditionally, robotic technology has been used in industrial settings, for example in car manufacturing. However, more and more robots appear in the domestic area. In the near future, robots will provide services directly to people, at our workplaces and in our homes [1]. Application areas include household tasks (e.g. vacuum cleaners), security tasks, entertainment purposes (e.g. toys), and educational purposes. While nowadays a technical explanation of how an appliance works is give to the user, this will become increasingly difficult with new autonomous robots. We cannot expect users to learn about sensors, actuators and control architectures. Instead, we need to convey a mental model that helps the user to make sense out of the robot’s behaviors and to understand which actions are needed from his side. The appropriate design of the interaction between humans and robots will be a crucial factor for the understanding and acceptance of new robotic products [2]. A promising approach in this field of Human-Robot interaction [3] research is to equip robots with lifelike and social characteristics. Fong, Nourbaksh, and Dautenhahn [4] present an overview of what they call socially interactive robots, i.e. robots that exhibit human-like social characteristics. Some examples of these characteristics are the ability to express and perceive emotions, to communicate with natural language, to establish and maintain social relationships, to use natural cues in verbal and non-verbal behavior, and to exhibit distinctive personality and character.

1.2 Animacy and anthropomorphism

It is known from earlier research [5],[6],[7] that robots, will induce the perception of being life-like and having a certain personality, through their appearance and behavior. Heider and Simmel [8] already demonstrated in 1944 that people attribute motivations, intentions, and goals to simple inanimate objects, based solely on the pattern of their movements. Tremoulet and

Feldman [9] showed that even the motion of a single featureless dot is enough to convey the impression of animacy. They concluded that animacy is inferred when observable aspects of the display cannot easily be explained as ordinary inanimate motion. Recent field tests, such as the ethnographic study with the robotic vacuum cleaner Roomba conducted by Forlizzi et al. [1], revealed that already the use of an autonomous robot in a social environment (i.e. the home) had an impact on social roles and cleaning habits of the participants, even if the robot was not in particular designed for social interaction.

The cognitive process of attributing life-like features is also known as anthropomorphism (in case one attributes human-like characteristics) or zoomorphism (in case one attributes animallike characteristics). One of the most debated topics is whether designers should use anthropomorphic features in robots. For example, Ishiguro argues that robots that imitate humans as close as possible serve as an ideal interface for human [11]. Duffy, on the other hand, puts this view in perspective, arguing that anthropomorphic features have to be carefully balanced with the available technology in order to not raise too high expectations that cannot be met [12]. He stresses that the goal of using anthropomorphic features is to make the interface more intuitive and easy to use and not to copy a human. Up to now, the question to what extend to incorporate anthropomorphic artifacts remains unanswered. In line with Duffy, we believe that anthropomorphic or life-like features should be carefully designed and aim at making the interaction with the robot more intuitive, pleasant, and easy. In the next section, we explain how the concept of personality can be helpful in designing appropriate life-like features in a robot.

1.3 Personality

Reeves and Nass [6] have demonstrated with several experiments that users are naturally biased to ascribe certain personality traits to machines, to PCs, and other types of media. For a product designer, it is therefore important to understand how these perceptions of personality influence the interaction and how a coherent personality can be utilized in a product.

Personality is an extensively studied concept in psychology. As McAdams and Pals [13] point out, there is no “comprehensive and integrative framework for understanding the whole person”. Carver and Scheier [14] give an impression of the diversity of research on personality. They present an overview of personality theories categorized along seven perspectives, including the biological, psychoanalytic, neo-analytic, learning, cognitive self-regulation, phenomenological, and dispositional perspective. In outlines, these theories agree on the general characteristics of personality, amongst others that personality is tied to the physical body; helps to determine how the person relates to the world; shows up in patterns (recurrent and consistent); and is displayed in many ways (in behavior, thoughts, and feelings).

As our work concentrates on the expression of personality as a pattern of traits, personality research on dispositional traits was considered most relevant. This dispositional perspective is based on the idea that people have relatively stable qualities (or traits) that are displayed in diverse settings. Dryer [15] stresses three focus points to maintain the coherence of the characters personality: (1) cohesiveness of behavior (2) temporal stability (3) cross-situation generality. A combination of several trait theories that focused on labeling and measuring people’s personality based on the terms of everyday language (e.g. helpful, assertive, impulsive, etc.) led to an emerging consensus on the dimensions of personality in the form of the Big-Five theory.

The Big-Five is currently the theory that is supported by most empirical evidence and it is generally accepted [13]. It describes personality in five dimensions: extraversion, agreeableness, conscientiousness, neuroticism, and openness to new experiences. Table 1 provides a comprehensive overview of the five dimensions and some of their facets. These facets indicate the scope of each dimension and the variety of aspects within a dimension. Recent studies have used personality theories such as the Big Five to assess people’s perceptions of robot personality (e.g. [16][17]). However, the Big-Five theory of personality can also be used as a framework to describe and design the personality of products, and in particular of robots. Norman [5] describes personality as: ‘a form of conceptual model, for it channels behavior, beliefs, and intentions into a cohesive, consistent set of behaviors.’. Although he admits this is an oversimplification of the complex field of human personality, the statement indicates that deliberately equipping a robot with a personality, it helps to provide people with good models and a good understanding of the behavior.

| Personality dimension | Personality facets |

|---|---|

| Extraversion | warmth, gregariousness, assertiveness, activity, excitement-seeking, positive emotion |

| Agreeableness | trust, straightforwardness, altruism, compliance, modesty, tender-mindedness |

| Conscientiousness | competence, order, dutifulness, achievement, striving, self-discipline, deliberation |

| Openness | fantasy, aesthetics, feelings, actions, ideas, values |

| Neuroticism | anxiety, angry hostility, depression, self-consciousness, impulsiveness, vulnerability |

Table 1 Five-factor model: dimensions and facets

1.4 Research questions

In sum, appropriate design of the human-robot interaction is an increasingly important research topic as robots move into domestic settings. The important questions that arise when explicitly designing a personality for a robot in a given application are what kind of personality is appropriate for the robot and facilitates the interaction, and how to express the personality in the behavior of the product? Our research investigates how personality can be addressed in the design process.

2. Previous work

Careful design of robotic behavior appears to be a crucial factor for the acceptance and success of a robot application. However, up to now there is no consensus on general design rules for character design, nor a unified design process. Several approaches have been reported on the process to design personalities for expressive autonomous products. In this section we summarize some of the existing approaches relevant to character design. Traditionally, there have been three main perspectives on designing the expressive behavior of a robotic product: (1) technology driven, (2) artistic design (3) user centered. We illustrate each of these approaches next.

2.1 Technology driven

When the first robots were constructed, the behavior was fully determined from a technological, functional point of view. The behavior was implicitly implemented by engineers, who had the technological insight to control the hardware. Hence, the behavior resulted from functional requirements such as navigating via the shortest path to a certain location, as well as hardware constraints such as maximum speed or correction movements for compensating hardware inaccuracies. Several architectures for designing the behavior of interactive robotic characters have been proposed [17][19]. In the subsumption-architecture proposed by Brooks [20], the overall behavior of the robot is explicitly an emergent feature, composed from simpler basic actions.

How the user perceives a certain behavior had only later been taken into account. For example, Kawamura et al. [21] stressed the necessity for ease of use of a service robot, but bases multiple design decisions on technical constraints of a particular robotic platform. Loyall [22] presents a complete architecture to construct autonomous and believable agents that encompassed among others a specialized language to describe the behavior for believable agents.

Neubauer [23] takes a more analytical approach to design artificial personalities. He explores the application of Carl Jungs theory of personality in design of artificial entities such as chat bots or avatars on the web. He classifies personalities according to the classification scheme of Jung and categorizes them according to what personality type is implementable with a computer, given our current understanding of artificial life.

The main characteristic of these approaches is the focus on specific technical implementations. Even though the underlying technology is an essential factor for the feasibility of a robotic application, they tend to narrow the design space by technical limitations, rather than by user insights.

2.2 Artistic design

In contrast to a technical approach, the artistic approach is mainly concerned with the expression of a behavior. The focus is not on the functionality of the robot, but on how people perceive the behavior. The underlying idea of conveying messages through expressive behavior is borrowed from the field of movies and animations. The most cited set of design guidelines are the 12 design principles of Disney Animation by Thomas and Johnson [24] listed in Table 2.

| 1. Squash and Stretch 2. Anticipation 3. Staging 4. Straight Ahead Action and Pose to Pose 5. Follow Through and Overlapping action 6. Slow In and Slow out |

7. Arcs 8. Secondary Action 9. Timing 10. Exaggeration 11. Solid Drawing 12. Appeal |

Table 2: 12 Animation principles of Disney animations by Thomas and Johnson

The design principles serve as a tool that focuses on creating believable expressions and behavior in short sequences of a movie. The overall personality of the character is determined by a central movie script.Van Breemen [25] was one of the first to apply animation technology to the development of robots. He illustrated that by simply adhering to some of the animation principles, the behavior of a robot appears to be more life-like.

In general, however, approaching the design of robotic behavior from an artistic point of view requires good artistic skills of the designer. Several guidelines have therefore been developed that support the designer to make and justify choices, but they do not take away the need for creativity and inspiration. Dautenhahn, for example, refers to comic design and identifies two design dimensions: (1) universal design (2) abstract design [26]. On the first dimension, the designer abstracts out universal features of behavior or an expression, so that people can recognize and identify themselves with the character. On the second dimension the designer has artistic freedom to add specific features that can best be described by an artistic style.

2.3 User centered

In the process of investigating design rules for interactive robotic characters, many of the design principles have been borrowed from the field of human-computer interaction [27]. The user centered approach is characterized by a strong focus on the user. The key principle is an iterative design cycle to evaluate and refine the interface. One of the most cited references for design principles in human-computer interaction is Gould et. al [28]. The three proposed principles are: (1) early focus on users and task (2) empirical measurement (3) iterative design. The first principle focuses on understanding the user and task, by having close contact with the user. One suggestion for learning from the users is to use interviews. These initial interviews should be constructed before the first design prototype. The second principle demands to carefully investigate how people interact with the device at hand. The authors warn the designers not only to present a system to the users but also measuring usability data. The assumption for the third principle is that it is almost impossible to get a system interface right the first time, hence promoting an iterative design cycle. Lately, however, these principles were target of critique [29]. A main point in the argumentation was that the success of their design could not be attributed to these principles but was founded on more general design principles.

Many more user-centered design approaches have been reported in literature. For example, Ljungblad et al. [30] surveyed participants that own exotic pets to investigate in what kind of forms and roles of characters people are interested. They used the concept of personas [31] to guide their design process for creating personalities for artificial agents. From the interviews they generalized use cases and scenarios, pointing out that the interviewed persons are not necessarily the intended users of the system.

The notion of designing and validating scenarios rather than focusing on personalities for character design also proved to be useful for designing a personality for the personal robot PaPeRo [32]. The scenarios construct a basic set of interactions with the user, placed in the context of an application. During validation the authors found that due to different colors of the robots, users attributed different personalities and roles. For example, the blue PaPeRo was perceived as the leader of the other PaPeRos, and the yellow one was perceived as if it were the youngest. This feedback was taken into account by changing the behavior to enhance these role perceptions e.g. by changing the utterances of the robot. This way, gradually a personality of the robots could be designed.

Despite the focus on the user, the creative element of the designer still plays an important role in the design process. Friess examined real world practice of a design process and found that during everyday interaction not only usability evidence is used to defend design decisions, but very often also pseudo evidence and simply common sense [33]. Höök [34] proposes a user-centered process and applies it to three case studies. She investigates how affective user interfaces can be designed and how they can be evaluated. She criticizes formal approaches of user-studies, since they do no capture the fine grained facets of personality and affective design. She proposes a two layered design approach. The first level focuses on the usability, by verifying whether basic design intentions such as emotional expressions are understood by the user. On the second level, it is verified whether affective aspects in the design contribute to the experience of the user. The user becomes an integral part of the design process, but instead of formal evaluation of the system, the user should be able to give a broad interpretation of his or her experience. Furthermore, she points out that traditional user studies search for an average user that does not exist. Instead of generalizing, affective design should focus on how the individual interacts with the system.

3. Personality design process

Although several approaches to design personalities for expressive autonomous products have been proposed, we miss a practical process that integrates a user-centered, artistic, and technical approach to designing personalities. In this section, we describe the process that we followed to design personality and expressions for a domestic robot and propose this as a way to design personality and expressions for autonomous products in general. The process consists of five main steps, namely creating a personality profile, getting inspiration for the expressions, sketching a scenario, visualizing it in 3D animation, and evaluating it using a think-out-loud protocol. The focus of this section will be on the general process, rather than on the application specific results.

3.1 Create a personality profile

In the design process we propose, we use the notion of personality as a central design guideline to create consistent and understandable behavior (mental model), to facilitate natural (social) interaction, and to make the product more appealing. Therefore, the first question that needs to be addressed is what kind of personality should be designed for a robot.

We used a user-centered approach to create a personality profile for the domestic robot. As a starting point, we used the most widely accepted personality model in psychology (“Big-Five”, see section 1). For each personality dimension, we selected several traits (i.e. personality characteristics) to be used as triggers for potential end-users to talk about the desired personality of a product.

Many questionnaires based on the Big-Five are available (both commercial and non-commercial), which typically consist of a large number of items [35],[36],[37]. Several authors have used single adjectives instead of phrases as personality descriptors [38], [39]. All questionnaires are used to assess one’s personality. However, in our case we want to use the items to get people to talk about particular aspects of the product personality.

We decided to select a subset of traits from all the questionnaires that we reviewed. We selected those items that we expected to be useful triggers for obtaining qualitative feedback from users on the desired personality for the robot, given the applications and tasks of the domestic robot. Furthermore, we made sure that we selected at least two positive and two negative markers for each of the five dimensions. In total, we selected 21 items, since using more items would lead to an unacceptably long session with our participants.

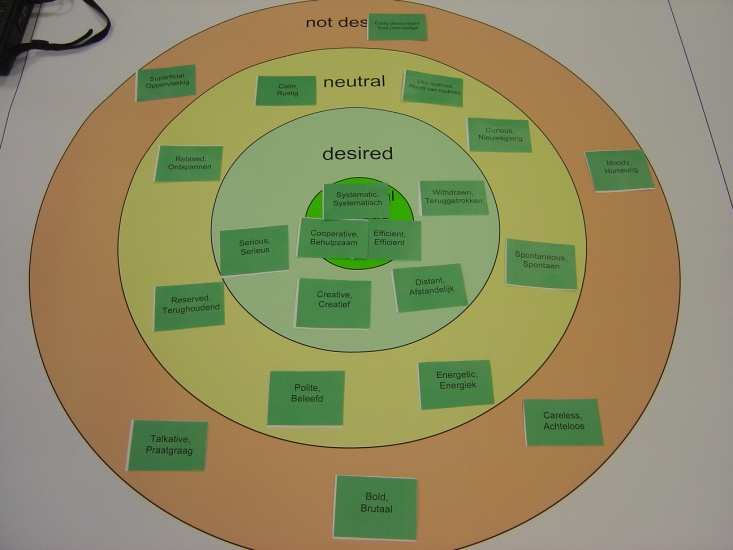

The traits were presented on cards to participants (potential end-users of the domestic robot) and they were asked to explain to the interviewer what the characteristics would mean for the behavior of the robot. Next, they were asked to place the cards with personality characteristics on an A0 sheet to indicate how desired this characteristic was for their preferred robot (see Figure 1). An example: A participant was shown the card with the word ‘polite’ (agreeableness). She explained that this could mean that “when the robot wants to move in the same direction as you do, it will wait and let you go first.”. “Yes, that is a desired behavior. I put it close to the center.” This method resulted in detailed qualitative and quantitative feedback on the personality for a domestic robot. For each trait, the percentage of participants that considered it to be either undesired, neutral, desired, or ideal was calculated. Furthermore, the rationale (why something was desired or undesired) was recorded and analyzed. The subjective rationale provided more insight into what kind of robot behavior people prefer and therefore addressed aspects of the application that have not been anticipated before. Also, users’ gave many examples of robot behavior for each of the presented personality characteristic that on the one hand yield insights on how users interpret robotic behavior and on the other hand can be used to narrow the design space for prototyping of behaviors.

Based on the user feedback, a descriptive personality profile was created. This profile is a narrative description of about 300 – 400 words illustrating the character of the robot. This personality profile can be used in a similar way as personas [31]. While personas are often used to describe users in the target group and communicate it to a development team, the personality profile describes what (‘who’) the product is. This profile provides a frame of reference for later stages in the development of the product behavior.

Figure 1: User-created personality profile

3.2 Get inspiration for expressions

In order to get ideas and inspiration for designing life-like and expressive behavior for robots, a theatre workshop was organized. During the workshop, four actors from an improvisational theatre group acted out possible behavior of a domestic robot on the basis of the personality profile. We used the workshop to explicitly address the creative and artistic aspect of the design process for a robotic application. Acting out the behavior of a robot makes sense because it helps to build a basic understanding of the personality. This method has proved successful in acting for movie and theatre for decades [40]. This holds especially for emotional expressions due to the interrelated nature of emotion experience, emotion expression and readiness for action [41]. Experience and expressions reinforces each other. For example, expressing a smile when feeling sad will cheer you up [42]. Translating these results to a design process offers the possibility not only to design emotions in a top-down approach but also bottom-up. The actor workshop was held in a realistic living room setting in the Home area of the ExperienceLab facility at the High Tech Campus in Eindhoven [43]. The session was recorded with 4 ceiling-mounted cameras and one mobile camera. First, the actors studied the personality profile to identify with the character. After that, the actors showed behavior (focusing on its movements and sounds, but no talking) of the robot in particular situations in various ‘exercises’ that are commonly used in improvisational theater. The moderator of the workshop presented a situation to the actors, e.g. ‘You are being switched on’, ‘You are exploring the room’, ‘You encounter an obstacle.’. The actor that had an idea how to act in this situation stepped forward and acted out the robot behavior. The scene ended with a buzzer sound made by the moderator, after which the next actor could show his/her expressions. In another exercise, one actor freely acted out some behaviors, while another actor had to give ‘live commentary’ what he or she was seeing. Some scenes were played individually and some in teams.

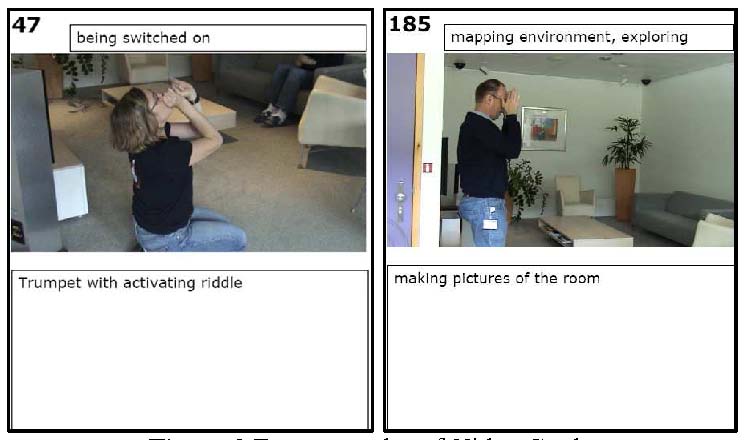

Over 200 scenes were recorded. Video cards ([44]) were used to group, compare, and analyze the large amounts of video material (see Figure 2). The clustered video cards with descriptions of the behaviors and example video clips were discussed in the project team. During these discussions, additional ideas for expressions were generated.

Figure 2: Two examples of Video Cards.

3.3 From actor to robot expressions

The video clips with the expressions of the actors were translated into expressions for the domestic robot. Since human expressions cannot be mapped one-on-one to expressions of the robot, we abstract the human expressions first before we could design concrete expressions for the domestic robot. For example, an actor was looking around and pretending to make pictures of the room to express that he was exploring the environment. This was translated as repetitive turns of the robot to the left and to the right (‘looking around’), flashing white lights (‘camera flash light’), and a click sound (‘picture taken’).

The designed expressions were sketched in a written scenario and an animated storyboard. This scenario and storyboard was used to communicate the expressions within the project team. Although presentation of animated behavior on paper is difficult, people inside the project team were able to give initial feedback on the cartoon-like drawings showing the robot behavior. The final storyboard served as input for the visualization of the behaviors in 3D animations.

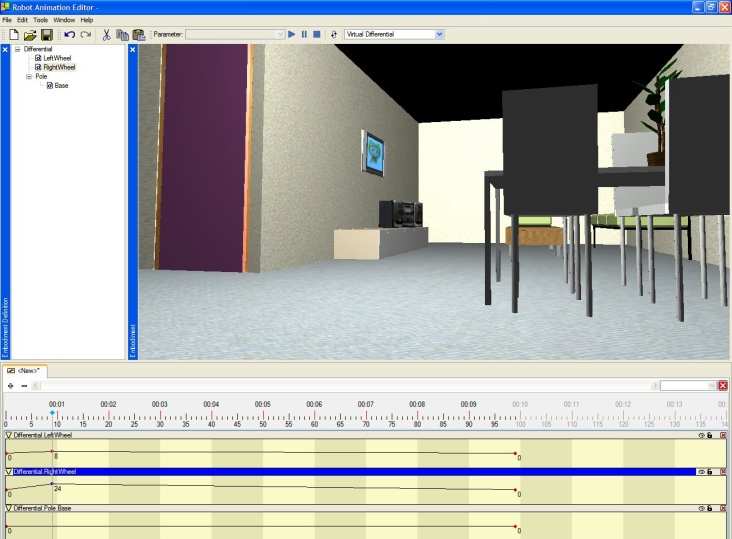

3.4 Visualize in 3D animation

We used virtual 3D graphical simulations for prototyping and testing scenarios of robotic applications because 3D simulations offer the designer the possibility to present a concrete instance of a particular behavior or scenario to the participants, and gather feedback from users without the hassle of building a fully functional hardware prototype. In product development, designers commonly use sketches and cartoons to visualize certain concepts. While sketches can only show a static representation, a 3D simulation gives an impression how a dynamic behavior will look like. The timing of movements and behaviors is a crucial element for the meaning of an expression [45].

Nowadays, several software packages are being used to simulate robotic behavior, each with their own advantages and disadvantages [46]. In general, robotic simulations can be approached in two ways, either by simulating the physical properties of the hardware and control software, or by scripting the behavior and allowing for artistic freedom without real-world constraints. From our studies we learned that for a first impression, animation technologies are sufficient, because a designer can make a behavior reasonably realistic, while focusing on conveying a message. Animation technologies offer the designer the freedom to implement certain behaviors to give a life-like impression of the robot [25]. However, a close resemblance of the real hardware behavior proofs useful during later stages in the design process, because behaviors can be ported to the real hardware for experiments. Although simulations give a realistic impression of the behavior, research has shown that there are important subtle differences in the perception between virtual and physical embodiment [48].

We used the Open Platform for Personal Robotics (OPPR) framework as described in [49] to develop visual impressions of the robotic behavior in a realistic setting and recorded these in several movie clips. One particular strength of the OPPR framework is that it uses physical simulations for rendering animated behavior. Therefore, the virtual simulation closely resembles the real hardware platform, so that the behaviors can be developed and tested with users and at a later stage reused on a real robot.

Figure 3: Screenshot of Animation Editor

3.5 Evaluate with think-out-loud

The 3D animations were used in a think-out-loud evaluation. The objective of the evaluation was to find out how people perceive the expressions of the robot and obtain user feedback as input for redesign and/or implementation of these behaviors. Questions we were interested in were: How do people interpret the designed behaviors? What behaviors do people consider life-like and why? Why is some behavior preferred over other behavior?

In total, 12 participants were invited to participate individually in a session of approximately one hour. A video clip of about 8 minutes was shown to the participants with animations of the expressive robot behavior. While watching the video clip, participants were asked to continuously describe what they saw, thought, and felt and why. What do you think the robot is doing or what is it trying to tell you? After the video clip, participants were asked to fill out a questionnaire and were interviewed about their impression of the robot. Audio and video recordings were made of the sessions to allow for verbal protocol analysis. Remarks of the participants were clustered and analyzed per segment of the video clip (typically a segment consisted of one behavior).

The think-out-loud evaluation and verbal protocol analysis resulted in valuable qualitative user feedback on the designed expressions. The results clearly indicated which expressions could be easily interpreted and which expression were appreciated by participants. The results are used as input for the next iteration of designing the expressions.

With the think-out-loud evaluation, we finished the first iteration of our design cycle. We have a clear view on the desired personality for the product and gathered user feedback on the designed behavior. During the next steps of the design process, the results are used as input to design the final robot behavior. We therefore propose to use at this stage an iterative design approach to refine and evaluate the designed behaviors.

4. Discussion

The design process that we proposed integrates technical, artistic and user-centered approaches to design a personality for a robotic application. We started with a user centered perspective to find out what kind of personality people would like a robot to have. Based on this user knowledge, an artistic perspective was taken and ideas for expressions and behaviors of a robot with the particular personality were created. Later in the process, a more technological perspective was taken and the expressions and behaviors were translated into concrete and implementable solutions for a particular robot embodiment (taking into account its requirements and constraints). In the remainder of this section, we summarize the main lessons learned for each of these steps.

4.1 Lessons: Create a personality profile

In order to gather input from users on the desired personality for a domestic robot, we used cards describing personality traits. Upfront, we were uncertain whether people would be able to relate the personality traits to a robot. However, people seemed to have little problems explaining what certain personality characteristics would mean for the behavior of the robot and whether they would appreciate this or not.

Our approach assumes that the application context for the autonomous product is known, because we expect that the task or role of the robot will have a large effect on the personality preference of users. For example, a surveillance robot is expected to have a different personality than a robot that plays games with the user.

Finally, we selected a subset of the Big-Five character traits for our user study that we believed to match the behavior of a domestic robot. We missed, however, a more systematic selection procedure.

4.2 Lessons: Get inspirations for expressions

To get inspiration for expressions we organized a theatre workshop in which actors acted out a domestic robot in various situations. This artistic approach proved useful in inspiring the design of robot behavior. However, we observed that the invited actors who participated in improvisational theatre competitions, were used to express themselves mainly using language and via interactions with the public. Since our main interest is in the expressive movements, we would rather use dancers or mime actors next time. Furthermore, the personality profile restricted the actors in their expression. Of course, the intention of using the personality profile was to guide the actors in their expressions in order to fit the desires of the users. However, it might have limited their creativity.

4.3 Lessons: From actor to robot expressions

The anatomy of the human actors is rather different from the anatomy of the domestic robot we envision. Therefore, it is difficult to map expressions of the actors directly on the robot. However, by abstracting the expression of the actor and keeping the essential characteristics of his movement, we were able to translate it into concrete expressions for the robot.

The sketched storyboard proved to be a fast and useful way to discuss the behavior of the robot. It helped in quickly deciding on a scenario with behaviors to be implemented on the robot. Obviously, the sketches on paper have some limitations. Movements and sounds cannot be realized and require some imagination from the design team.

4.4 Lessons: Visualize in 3D animation

Our main goal for using 3D visualization was to gather qualitative feedback from the user in an early design phase. Animating a virtual version of the domestic robot required less effort than implementing hardware prototypes.

By using physical simulation we gained more realistic behavior that in later stages can be more easily reused on a physical embodiment, but also inherited some the problems of dealing with real world conditions. For example, while in virtual worlds the path of a mobile robot can be repeated exactly in successive runs, physical simulations add some random inaccuracies to the motion, for example due to slip of the wheels. Because successive runs of the same behavior resulted in different output, we chose to show recorded movies of the virtual environment.

Next to these practical experiences, we also want stress some of the more fundamental considerations of this approach that have to be taken into account. Using a virtual simulation of the robot gives the designer the same artistic freedom as in traditional cinematography. The designer has control over the whole scene, including for example lighting, camera angle and other objects in the scene. The camera angle alone can have a significant impact on the perception of the character. In our experiments, we therefore tried to keep the camera in the height of an average person and keep the lighting and objects in the scene as neutral as possible. In reality however, these parameters cannot be controlled, so tests with virtual representations will not substitute for testing the behavior on the physical hardware. This strengthens the argument to create virtual behavior, that can be translated to a physical embodiment.

4.5 Lessons: Evaluate with think-out-loud

From the feedback that we received, we concluded that the participants were able to imagine how the behavior will look like on a physical embodiment, which confirms our assumption that 3D simulations are a good approximation of the physical robot. The qualitative study using a think-out-loud protocol at this early stage of the product development is in our opinion preferred over more quantitative methods. The results give in-depth information about how participants perceived the robot behavior and provide input for redesign of the behavior.

However, the use of a virtual representation of the robot for evaluation has some limitations compared to evaluations with physical robots. For example, simulation of the (physical) interaction between a user and the robot is not possible when using movie clips with animations.

5. Conclusion

We have described a process to design the behavior of a domestic robot and proposed it as a way to design a personality and appropriate expressions for autonomous products. The process consists of five main steps, namely creating a personality profile, getting inspiration for the expressions, sketching a scenario, visualizing it in 3D animation, and evaluating it using a think-outloud protocol. The proposed process combines proven methods from HCI and translates it to the field of HRI. It integrates technical, artistic and user-centered approaches to develop the personality of a robot in an iterative design process. In next steps, we want to improve the process and investigate its applicability in designing a broader range of consumer electronic products. Furthermore, we want to compare our process with existing and widely used product design processes.

Acknowledgements

Our thanks go to Flip van den Berg, Peter Jakobs, Albert van Breemen, Dennis Taapken, and Ingrid Flinsenberg for their valuable contributions to parts of the work described in this paper.

6. References

- Forlizzi J. and DiSalvo, C. (). Service robots in the domestic environment: a study of the roomba vacuum in the home. In: Proceedings HRI ’, pages –, New York, NY, USA. ACM.

- Wrede, B., Haasch, A., Hofemann, N., Hohenner, S., Hüwel, S., Kleinehagenbrock, M., Lang, S., Li, S., Toptsis, I., Fink, G. A., Fritsch, J. and Sagerer, G. (). Research issues for designing robot companions: BIRON as a case study. In Proc. IEEE Conf. on Mechatronics & Robotics, volume , –, Aachen, Germany,

- Goodrich, M.A. and Schultz, A.C. (). Human-robot interaction: a survey. Found. Trends Hum.-Comput. Interact., ():–.

- Fong, T., Nourbaksh, I., Dautenhahn, K. (). A survey of socially interactive robots, Robotics and Autonomous Systems , –

- Norman, D. (). How humans might interact with Robots, Retrieved Sep. th from http://www.jnd.org/dn.mss/how_might_human.html

- Reeves, B. & Nass, C. (). The media equation: How people treat computers, televisions, and new media like real people and places, New York: Cambridge University Press

- Meerbeek, B., Hoonhout, J., Bingley, P. and Terken, J. (). The influence of robot personality on perceived and preferred level of user control. Human and Robot Interactive Communication, Dautenhahn, K. (ed.), –.

- Heider, F, & Simmel, M. (). An experimental study of apparent behavior. American Journal of Psychology, , -.

- Tremoulet, P., Feldman, J.(). Perception of animacy from the motion of a single object. Perception, volume , -

- Breazeal, C. (). Designing Sociable Robots. MIT Press, Cambridge, MA, USA.

- Ishiguro, H. (). Interactive humanoids and androids as ideal interfaces for humans. In Proceedings IUI ’, pages – , New York, NY, USA. ACM Press.

- Duffy, B.R. (). Anthropomorphism and the social robot. Robotics and Autonomous Systems, (–):–.

- McAdams, D., Pals, J. (). A New Big Five: Fundamental Principles for an Integrative Science of Personality, American Psychologist vol. , nr. , -

- Carver, C. & Scheier, M. (). Perspectives on personality, Boston, USA: Allyn and Bacon

- Dryer, D.C. (). Getting personal with computers: How to design personalities for agents. Applied Artificial Intelligence, ():–.

- Kiesler, S.B. & Goetz, J. (). Mental models of robotic assistants. CHI Extended Abstracts: -

- Walters, M.L., Syrdal, D.S., Dautenhahn, K., Te Boekhorst, R., Koay, K.L. (). Avoiding the uncanny valley: robot appearance, personality and consistency of behavior in an attention-seeking home scenario for a robot companion. Autonomous Robot, volume ()

- Duffy, B.R., Dragone, M. and O’Hare, G.M. (). The social robot architecture: A framework for explicit social interaction. In Android Science: Towards Social Mechanisms, CogSci Workshop, Stresa, Italy.

- Snibbe, S., Scheeff, M., and Rahardja, K. (). A layered architecture for lifelike robotic motion. In Proceedings of The th International Conference on Advanced Robotics

- Brooks, R.A. (). Architectures for Intelligence; VanLehn K. (ed.), chapter How to build complete creatures rather than isolated cognitive simulators, pages –. Lawrence Erlbaum Assosiates, Hillsdale, NJ.

- Kawamura, K., Pack, R., and Iskarous, M. (). Design philosophy for service robots. In Systems, Man and Cybernetics. Intelligent Systems for the st Century, volume , pages –.

- Loyall, A. (). Believable Agents: Building Interactive Personalities. PhD thesis, Stanford University.

- Neubauer, B.J. (). Designing artificial personalities using jungian theory. J. Comput. Small Coll., ():–.

- Thomas, F. and Johnson, O. (). The Illusion of Life -Disney Animation. Walt Disney productions.

- Van Breemen, A. (). Bringing robots to life: Applying principles of animation to robots. In Proceedings of the Workshop on Shaping Human-Robot Interaction Understanding the Social Aspects of Intelligent Robotic Products (CHI), Vienna.

- Dautenhahn, K. (). Design spaces and niche spaces of believable social robots. In Robot and Human Interactive Communication, pages –.

- Benyon, D., Turner, P. and Turner, S. (). Designing interactive systems. Addison-Wesley New York.

- Gould, J.D. and Lewis, C. (). Designing for usability: key principles and what designers think. Commun. ACM, ():–.

- Cockton, G. (). Revisiting usability’s three key principles. In CHI ’: CHI ’ extended abstracts on Human factors in computing systems, pages –, New York, NY, USA. ACM.

- Ljungblad, S., Walter, K., Jacobsson, M. and Holmquist, L. (). Designing personal embodied agents with personas. In Robot and Human Interactive Communication, ROMAN ., pages – .

- Pruitt, J. and Grudin, J. (). Personas: Practice and theory. In Proceedings of the Conference on Desiging for User Experiences.

- Osada, J., Ohnaka, S. and Sato, M. (). The scenario and design process of childcare robot, papero. In proceedings ACE ’, page , New York, NY, USA. ACM.

- Friess, E. (). Defending design decisions with usability evidence: a case study. In CHI ’: extended abstracts on Human factors in computing systems, pages –, New York, NY, USA. ACM.

- Höök, K. (). From Browse to Trust: evaluating embodied conversational agents, volume of Human-Computer Interaction Series, chapter User-Centred Design and Evaluation of Affective Interfaces: A Two-tiered Model, pages –. Springer Netherlands.

- Srivastava, S. (). Measuring the Big Five Personality Factors. Retrieved Sep.th from http://www.uoregon.edu/~sanjay/bigfive.html.

- John, O.P. et al., The Big-Five Trait Taxonomy: History, Measurement, and Theorethical Perspectives, In: Pervin, L. and O.P. John, Handbook of personality: Theory and research, New York,

- Costa, P. and R. McCrae (). NEO Personality Inventory – Revised, Retrieved Sep. th from http://www.parinc.com/products/product.aspx?Productid=N EO-PI-R

- Boeree, G. (). Big Five Mini Test. Retrieved Sep. th from http://www.ship.edu/~cgboeree/bigfiveminitest.html

- Saucier, G. (). Mini-Markers: A Brief Version of Goldberg’s Unipolar Big-Five Markers, Journal of Personality Assessment, (), -

- Stanislavski, C. and Hapgood, E. R. T. (). Building a Character. Routledge.

- Trappl, R., Petta, P. and Payr, S. editors, . Emotions in Humans and Artifacts. The MIT Press.

- Strack, F., Martin, L. and Stepper, S. (). Inhibiting and facilitating conditions of the human smile: A nonobtrusive test of the facial feedback hypothesis. Journal of Personality and Social Psychology, ():–.

- Van Loenen, E., De Ruyter, B. & Teeven, V. (). ExperienceLab: Facilities. In Aarts, E. & Diederiks, E. (Eds.), Ambient Lifestyle: From Concept to Experience (pp. – ). Amsterdam, The Netherlands: BIS Publishers

- Buur, J. & Søndergaard, A. (). Video card game: an augmented environment for user centred design discussions. Designing Augmented Reality Environments : -

- Ruttkay, Z., Hendrix, J., Ten Hagen, P., Leli`evre, A., Noot, H. and De Ruyter, B.(). A facial repertoire for avatars. In Proceedings of CELE-Twente Workshops on Natural Language Technology, pages –.

- Saerbeck, M. and Van Breemen, A.J. (). Design guidelines and tools for creating believable motion for personal robots. In Robot and Human interactive Communication, . RO-MAN . The th IEEE International Symposium on, pages –.

- Kidd, C. and Breazeal, C. (). Effect of a robot on user perceptions. In Intelligent Robots and Systems, . (IROS ). Proceedings. IEEE/RSJ International Conference on, volume , pages – . IEEE.

- Powers, A., Kiesler, S., Fussell, S. and Torrey, C. (). Comparing a computer agent with a humanoid robot. In HRI ’: Proceeding of the ACM/IEEE international conference on Human-robot interaction, pages –, New York, NY, USA. ACM Press.

- Van Breemen, A.J. (). Scripting technology and dynamic script generation for personal robot platforms. In Intelligent Robots and Systems, . (IROS ). IEEE/RSJ International Conference on, pages – . IEEE CNF.

This is a pre-print version | last updated August 11, 2009 | All Publications