DOI: 10.1145/1753326.1753567 | CITEULIKE: 7042595 | REFERENCE: BibTex, Endnote, RefMan | PDF ![]()

Saerbeck, M., Schut, T., Bartneck, C., & Janse, M. (2010). Expressive robots in education - Varying the degree of social supportive behavior of a robotic tutor. Proceedings of the 28th ACM Conference on Human Factors in Computing Systems (CHI2010), Atlanta, pp. 1613-1622.

Expressive Robots in Education - Varying the Degree of Social Supportive Behavior of a Robotic Tutor

2Department of Industrial Design

Eindhoven University of Technology

Den Dolech 2, 5600MB Eindhoven, NL

m.saerbeck@tue.nl, christoph@bartneck.de

1Philips Research

High Tech Campus 34. 5656 AE Eindhoven

martin.saerbeck@philips.com, tom.schut@philips.com, maddy.janse@philips.com

Abstract - Teaching is inherently a social interaction between teacher and student. Despite this knowledge, many educational tools, such as vocabulary training programs, still model the interaction in a tutoring scenario as unidirectional knowledge transfer rather than a social dialog. Therefore, ongoing research aims to develop virtual agents as more appropriate media in education. Virtual agents can induce the perception of a life-like social interaction partner that communicates through natural modalities such as speech, gestures and emotional expressions. This effect can be additionally enhanced with a physical robotic embodiment. This paper presents the development of social supportive behaviors for a robotic tutor to be used in a language learning application. The effect of these behaviors on the learning performance of students was evaluated. The results support that employing social supportive behavior increases learning efficiency of students.

Keywords: Social interaction, Education, Tutoring, Human-robot interaction

Introduction

The interaction between teachers and students is inherently social. Tiberius phrased it in 1993 as: “Relationships are essential to teaching as flour in the cake” (par. 1) [37]. Currently, virtual agents and animated characters are being investigated as media appropriate for educational technology [20]. These technologies are based on the natural tendency of people to interact socially with all types of media [32]. Parise et al. showed that already the degree of anthropomorphization in appearance of the interface has a significant impact on the behavior of the user [30]. They argued that the appearance influenced the participants’ social expectations, hence stressing the importance for a framework for social interaction. Furthermore, research has shown that the effect of being perceived as a social interaction partner can additionally be enhanced by a physical robotic embodiment [31, 42]. For example, participants tend to disclose less personal information when interacting with a perceived social character. However, this also means that not every application benefits from social interactive interfaces.

It is still an open debate whether robots that work together with humans in a cooperative team like setting should be designed merely as tools or as social interaction partners [15, 14]. For example, in the field of search and rescue, there seems to be a preference to treat the robot as a tool [7].

Also Strommen and Alexander make an essential distinction between interfaces with the mere character of a tool and interfaces that engage socially with the user [36]. With social interaction the simple use of a tool becomes a partnership or collaboration. More specifically, the authors argue that including emotions in educational interfaces for children actively support the learning goals for the educational product. They found a positive effect of adding emotions to the audio interface of their educational system and offered the explanation that this manipulations supported the interpretation of a social character. However, it remains unclear to what degree the interface should engage in social interaction.

In this study we investigated whether social engagement of a robot interface can effectively be applied in an educational context. We developed an application in which the robot takes the role of a tutor to support students with a language learning task. The main questions is then whether social supportive behaviors rendered by a robotic interface have an effect on the learning performance of students. To address this question, two versions of the language tutor were created: (1) a socially supportive tutor that engages in a social dialog and (2) a neutral tutor that focuses on a plain knowledge transfer.

1.1 Social supportive behavior in education

In literature social support has been analyzed in different contexts, for example in the context of the clinical domain [23]. It is still in debate which aspects exactly define social supportive behavior. Relevant aspects are, for example, reflected in the development of assessment scales [2]. Some of the items on these scales, such as “let you know you did something well”, can directly be translated to behavior in an educational context, while others, such as “Gave you over $25”, only apply in a more general context of interpersonal relationships.

Tiberius and Billson analyze social supportive behavior in the context of education. They offer different explanations why social supportive behavior has a positive impact on the learning performance of students [38]. Among others, they compare two paradigms that are used to describe the interaction in a learning scenario (1) teaching as knowledge transfer and (2) teaching as a social dialog. They argue that a dialog is more appropriate, hence more effective, and support this with evidence from educational research. According to Tiberius and Billson, an effective teacher creates a relationship of trust in order to improve the students’ motivation. Assuming that learning is an active process in which the student has to invest work, the motivation is a driving force that has an impact on the learning performance. Through a good relationship, a teacher can influence the motivation of which he otherwise has no direct control.

Furthermore, educational literature gives ample evidence that social skills of teachers and the way they interact with students are crucial for achieving optimal educational success of students [19, 8, 13, 41, 6]. We refer to this type of behavior as social supportive behavior in an educational context. That is, the degree by which teachers engage in social supportive behavior determines their effectiveness. Teachers that engage more in social supportive behavior are expected to achieve higher student learning performances than those who purely focus on knowledge transfer.

In the line of this reasoning, animated pedagogical agents have been proposed as a medium to offer social feedback in an educational context.

1.2 Animated pedagogical agents

Technological advances offer new ways of interacting with learning content. For example, Jackson and Fagan explored immersive virtual reality environments using a head-mounted display as a media for learning [18]. However, even though interactive technologies add new modalities, the interaction still resembles the usage of tools. Animated pedagogical agents have been proposed as a promising interface paradigm that allows human-like social interaction due to their ability to give non-verbal and emotional feedback [20]. It has been shown that animated agents can give the impression of being life-like creatures with emotions, desires and intentions [39]. Dehn and Mulken define interface agents as computer programs that aid a user in accomplishing a task carried out at the computer [10]. They summarize the main arguments of advocates of social interaction for educational applications, i.e., animated agents are more engaging and motivating, facilitates interaction with familiar interaction modalities and thus support cognitive functions. Opponents criticize that animated agents might raise too high expectations, might be distracting or lead to misinterpretations. Dehn and Mulken analyzed systematically existing studies that evaluate the effect of an animated interface agent. Overall, interfaces with an animated agent appeared to be more entertaining. However, a clear advantage of anthropomorphized interfaces could not be found. Furthermore, the relationship between the type of animation and domain in which they are employed remained unclear.

Moreno et al. report a series of experiments employing an animated pedagogical agent [28]. Their hypothesis is that students learn more efficiently when students engage socially with the interface. To investigate this hypothesis they constructed a series of conditions in which they varied the presence of a mediating interface agent, level of interactivity, text and speech modality, and visual appearance of the agent. For example, they found effects on transfer and interest ratings when they compared conditions with and without an animated agent. Interestingly they found no effects on retention tests. Also the visual appearance did not have an effect on the task performance. Furthermore, it remained inconclusive to what degree the degree of social behavior influences the learning performance.

Johnson et al. reviews a variety of animated pedagogical agents, such as Steve (Soar Training Expert for Virtual Environments), Adele (Agent for Distance Learning: Light Edition) or Gandalf. All of these agents inhabit a virtual environment, of which some require specialized hardware such as immersive 3D while others integrate in a web browser. They all have in common that they enable the use of non-verbal behaviors. Non-verbal behavior can be used, for example, to guide the student’s attention, control the flow of the dialog, or give feedback. One advantage of non-verbal behavior is that such feedback does not disrupt the student’s train of thoughts. For example, a nod or shaking of the head can be used to steer the student in the right direction.

Lester et al. describe a system with a screen based animated pedagogical agent whose main function is to motivate students, so that they are willing to spend more time on a learning task [25]. In the study it was observed that the agent had not only a positive effect on the perception of students but also increased the learning performance. They coin this effect as the “persona effect”. A strong positive effect was obtained even if the agent was not expressive. In contrast, Hongpaisanwiwat and Lewis could not find a significant effect of an animated agent within a multimedia presentation on participants’ attitude [17]. Nevertheless, they reported that participants were willing to view more slides with an animated character and felt more comfortable. Hardré found that motivational interventions can significantly improve the effectiveness of tutoring, but that only few educational programs meet these requirements [16].

Several methods have been proposed to generate behaviors for animated agents. For example, Johnson et al. describe a system to generate a dialog for a tutoring system [21]. They applied a politeness framework to compute appropriate responses during the tutoring interaction. For our case, however, direct control over the expressiveness of iCat is required in order to assess the impact of social supportive behavior. Interactive animations, as described in [33] provide high level control over the expressivity while also maintaining a high level of control in adapting the animation to the context of a specific state.

1.3 Robots in education

While virtual pedagogical agents inhabit a virtual on-screen environment, robots in contrast share the same physical reality with the user. One of the main arguments for using a robotic embodiment is that the effect of being perceived as a social communication partner is amplified by a physical embodiment [31]. However, Shinozawa et al. found that robots are not always superior to screen based embodiments when they compared the influence of a virtual and physical embodiment of a recommender system on human decisions [35].

Most research that has investigated natural interaction, focuses on the knowledge transfer direction from the human to the machine, developing AI capabilities of the robot. In the educational domain this relationship is reversed and the robot uses social interaction capabilities to support the human with a learning task. In the Human-Robot Interaction (HRI) community it is still under debate what characteristics define a social robot [9, 12]. One of the difficulties in this endeavor is that the attitude towards robots varies greatly across cultures [4]. However, researchers in the field of social robots often refer to the social context of education as a potential task scenario for social robots, independent of cultural background. Feil-Seifer and Matarić consider tutoring even as a defining task example for socially assistive robots [11].

In the field of education, Shin and Kim analyzed the reactions of students to robots in class [34]. In their study they interviewed pupils on their attitude of learning about, from and with robots. They found that in general younger children like to work with robots and that they expect robots to take the role of a private tutor rather than a learning companion. In this role, the ability of emotional expression appears to be a key feature for acceptance of the tutor. Whether social robots have an advantage due to their physical presence is till in debate for a tutoring application. For example, Bartneck et al. reports evidence that there is no significant difference between a screen character and a robot [3].

Nowadays, robots in education are mostly used to learn about robots, i.e., about sensors and actuators and how to program a robot. One of the most popular examples is Lego®; Mindstorms [24]. These robotic kits provide a practical ‘hands-on’ approach and lower the threshold for students to try out their ideas. As such, they enable a teaching style that is referred to in educational literature as active learning [26]. The active learning teaching style increases students’ motivation and learning performance [26] as they can actively influence the order of presented material by asking questions and creating a meaningful context for the learning material. This implies that implementing social interaction in addition to a mere presentation of materials is likely to be more effective and robots can serve as a means to realize such social interfaces.

2 Tutoring application design

We implemented a tutoring application with the robotic research platform “interactive Cat” (iCat) from Philips Research [5], depicted in Fig. 1.

Figure 1: iCat: Robotic research platform for human-machine interaction developed by Philips Research

The iCat robot has the shape of a cat with a mechanical rendered face and is approximately 40 cm high. With 13 degrees of freedom to animate parts of the head it is able to express basic facial expression and emotions. It has a camera, a microphone and four touch sensors which are located in the ears and paws, respectively. Furthermore, it contains an infrared distance sensor in the left paw to sense the approach of an interaction partner. In our scenario iCat takes the role of a language tutor. The following section describes the details of the tutoring application.

2.1 Language learning task

Given the iCat embodiment and given the focus on interaction, we chose a language learning task for the tutoring application. The major requirements for the selection of the language were that it should be relatively easy to learn, so that students would be able to form simple sentences after an introductory lesson of about 30 min, and that they were not familiar with it. The artificial language “Toki Pona” [22] that has been developed by Sonja Elen Kisa and been published on the Internet fulfilled both of these requirements.

2.1.1 Toki Pona

Toki Pona has a small vocabulary of only 118 words, but it is still powerful enough to use it in simple every day communication. Toki Pona achieves this reduction of words by grouping words with similar meaning in one construct (e.g in Toki Pona the word suli means big, tall, long, adult, important, enlarge and size) and identifying the meaning of the word through the context. Based on the learning material on the web, we created a short introductory lesson consisting of pronunciation, basic vocabulary, grammar and a final practice in the form of a small conversation. However, for simplicity, we modified the existing grammar by removing the grammatical particle li from our examples. In the scope of our experiment it simplifies the sentences, avoiding complexity that is not needed to correctly understand and translate very simple sentences.

2.1.2 Learning interaction

To support the student with the learning task, learning materials were developed, consisting of sets of cards that explained the pronunciation, the grammar, and that provided example sentences. A total of 29 cards was used, 15 for vocabulary, 2 for pronunciation, 3 for grammar, and 9 cards with example sentences that are used during the lesson. During the lesson students can refer to the cards for look-up and for presenting answers to iCat. For example, in some exercises the students are required to pronounce a word, while in others they are asked to present the correct card to iCat. This way, the students not only learn the pronunciation, but they also have a visual and tangible mode of input during the lesson.

2.1.3 Lesson script

Based on the learning material we developed a tutor script similar to a movie script in which utterances of iCat were put in a sequence with descriptions of accompanying animations. However, unlike a movie script, an interactive tutoring application contains conditional execution of the dialog. Therefore, we modeled the tutor script in a state based representation. A state is a basic building block, which defines a behavior sequence of iCat. Depending on input of the student, different transitions between these states are executed. For example, initially iCat is in a “sleeping” state. The student wakes the iCat robot up by touching its ear, which triggers a transition to a “wake-up” sate. Consecutively, basic elements of the lesson such as explaining and practicing vocabulary are executed. In total, the lesson script contains 109 states. Some of the transitions are implicitly triggered when a state is finished, while others have to be explicitly triggered by an action of the user.

2.1.4 Application architecture

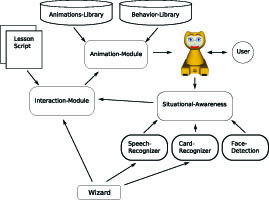

For implementing the state based script on iCat, we developed an XML-based interaction language that we call “Robot Interaction and Behavior Markup Language” or “RIBML” in short. The script is interpreted by a specialized module that we named “Interaction-Module”. Figure 2 gives an overview of the architecture of the tutoring application, that we implemented on top of the OPPR framework as described in [40].

Figure 2: Architecture of the tutoring application.

The architecture also illustrates the information flow from iCat’s sensors in the application. The interaction module is the central component in the architecture that triggers the behavior of iCat as specified in RIBML scripts. Next to commands for triggering animations and displaying expressions, the RIBML also contains specialized commands that bind to a “Situational-Awareness” component. The Situational-Awareness component offers a transparent interface to sensor data, without having to know the details of the underlying hardware or recognition modules. In this case, three recognitions modules are indicated that can be accessed through the situational awareness interface, namely a speech recognizer, a card recognizer and a face detector.

2.2 Varying the degree of social supportiveness

In order to define what it means for a robotic tutor to be socially supportive we extracted aspects of social supportive behavior from a study of Tiberius and Billson and used these as a design guideline for our implementation [38]. They distinguish concrete teacher behaviors that we could translate to our robotic tutor. For example, the social context is determined by behaviors such as smiling, a sense of humor, sensitivity to feelings and the teachers enthusiasm. We implemented the following five behavior dimensions to vary the social supportiveness of iCat for its role as tutor: role model, non-verbal feedback, attention guiding, empathy and communicativeness.

Role model In order to define more detailed behavior characteristics of a social robotic tutor, we have to define the general role that the robot takes in the relationship. For example, the robot could take the role of a authority to whom the student obeys or the role of a learning companion that follows and supports the student through the learning tasks. Following the argumentation of Tiberius and Billson, a teaching authority that fully controls the students is not desired. They argue that “External control, in the form of bribes, rewards, and threats, may have some immediate desirable effects on performance, but it may also have unfortunate consequences, as far as motivation is concerned” (p.71)[38]. Instead reciprocal learning in which a teacher first explains a subject and then engages in a dialog with the student proved to be more effective.

Hence, the role of an individual tutor rather than a teaching authority was chosen for the robot. That is, the tutor is closer to the student and can explain the learning content attuned to the student’s needs. The tutor has the knowledge authority, but we varied the degree by which the tutor motivates and bonds to the student. For example, a neutral iCat uses the word “you”, while a more social supportive iCat uses “we”. Furthermore, we added motivational sentences such as “Don’t worry. I will help you” or “It was not easy for me either”.

Non-verbal feedback Gestures and non-verbal feedback are a natural and non-obtrusive ways of communication without interrupting the student’s trail of thoughts. Tiberius and Billson stress the importance of feedback as a central scheme for teaching when analyzing the social context from a communication theory perspective and supports this with evidence that students prefer teachers who respond to their input over televised lectures to which students referred to as “talking heads”. Nevertheless, they also noted that this general statement only holds under the condition that provider and receiver of feedback share the same performance goal. Given the social context, the feedback also must be given appropriately in the context of the situation.

To achieve this, the quality of feedback given by iCat was varied in the implementation. In its most neutral form, feedback is only verbally for example by the sentence: “This is the wrong answer” followed by the repetition of the task. A social supportive iCat, on the other hand, annotates these answers with natural gestures such as nodding and shaking of the head.

Attention guiding In an effective teaching scenario it is one of the responsibilities of the teacher to actively guide the student through the learning material. The question is to what extent the teacher exercises control. According to Tiberius and Billson teachers should minimize external control in order to let students develop their internal control.

In our tutoring application we modeled these two extremes in a scaled down version by the form of orders given to the students. A neutral iCat exhibits high external control, demanding the student to look at the vocabulary or grammar material. A social supportive iCat, in contrast, merely suggests to look at these materials and uses gaze behavior to guide the students attention.

Empathy Empathy is another factor that nurtures student’s success. According to Tiberius and Billson smiling and sensitivity to students feelings had a positive influence on the interpersonal relationship and increased the effectiveness of learning. Furthermore, they noted that students responded much more enthusiastically to teachers who showed active personal commitment.

In our tutoring application we implemented basic empathic responses with the display of emotional expressions. The aim of these expressions is to convey that the tutor has an internal stake in the learning performance of the student. Therefore, a social supportive iCat shows a happy face in case of a correct answer of the student and a sad face in response to a mistake. This way iCat annotates progress in the learning task with affective responses, while a neutral iCat does not show this personal involvement.

Communicativeness According to Tiberius and Bilson, students rate the quality of a teacher not only based on their technical competence, but also on their competence to relate to students. That is, it is easier to relate to someone if this person is liked and consequently that it is easier to learn from someone if you can relate to this person.

Based on this observation we varied the communicativeness of iCat. For a neutral iCat we designed a machine-like personality reflecting the robotic embodiment by using static and predictable animation. For a social supportive iCat we designed a life-like, extrovert personality by using dynamic idle motions and included subtle light and sound expressions.

3 Evaluation of the robot tutor application

To assess the effect of social supportive behavior of the robot tutor on the learning performance of students we conducted an experiment with the following experimental setup.

3.0.1 Social supportiveness

We implemented two conditions of the independent variable Social Supportiveness for the iCat language tutor: (1) Neutral and (2) Social Supportive. In the neutral condition the behavior of the iCat tutor conforms to the neutral expressions of the five behavior dimensions, i.e., role model, non-verbal feedback, attention building, empathy and communicativeness. In the social supportive condition, the behavior of the iCat tutor conforms to the highest level of social supportive behavior of the five behavior dimensions. In terms of the analysis of Tiberius and Billson [38] the focus for the neutral condition is solely on knowledge transfer while for the social supportive condition the focus is on active dialog and positive social supportive behaviors. For both conditions we used the same language tutoring content.

3.0.2 Assessment

First, to assess the performance of the participants, we developed a language test according to the guidelines for language test design as given in [1, 29]. As Strommen and Alexander noted, “Emotions in educational interfaces for children therefore can do more than just improve the interface’s quality. They can play an important role in achieving the learning goals of the product itself.” (p. 1 [36]). Hence, the interface actively contributes to the task performance. The test contained 15 multiple choice questions that test pronunciation, vocabulary and grammar. For every question four different options to answer it were provided. It was possible for participants to select multiple answers if convinced that multiple answers are correct.

Second, a questionnaire was administered to assess the effect of social supportive behavior of the robot tutor on the motivation of the participants. This questionnaire distinguished between intrinsic motivation and external motivation. For rating intrinsic motivation we selected items from the subscale of “Interest and Enjoyment” of the “Intrinsic motivation inventory” [27]. For evaluating external motivation the questionnaire contained items related to the motivation to perform the tutoring task and to assess the usability of the application. The items were scored on a continuous ruler on which the participants could indicate with a cross to what degree they agreed or disagreed with a statement of the questionnaire.

Third, we logged data from the touch and distance sensors of the iCat robot during the tutoring sessions. Furthermore, we recorded the time per state, the number of correct and incorrect responses per exercise and the number of repetitions of explanatory states. These parameters were recorded, because we expected that the different levels of social supportive behavior of the robot tutor would affect their value. For example the number of touches and the physical distance between persons are in a social context typically depended on the relation between the interacting partners.

Lastly, we conducted a short interview in which we collected qualitative feedback from the participants with regard to their general impressions of the application, what they liked and disliked and why, and how they would explain their experience with the robot tutor to a friend.

3.0.3 Subjects

School children constitute a potential target group for a robotic tutor. They can use it at home to rehearse the material of the last lesson, to learn for an exam or to learn complementary material. In this domain, an interactive social tutor could actively motivate them to spend more time on the learning task and make the learning experience more engaging and fun. Following the advice of Shin [34], that in general younger children are more enthusiastic about robots, and with the requirement that the children should be able to work autonomously with iCat and should be able to recognize its social feedback, we selected 10 to 11 year old children for our test. In addition we required the children to have good reading skills in English and be unfamiliar with the Toki Pona language. All participating children were students from the Primary International School of Eindhoven. In total, 9 girls and 7 boys participated in our experiment. Most of the children of the Primary International School Eindhoven have a migration background, are fluent in English and most of them speak at least one other language.

3.0.4 Procedure

Participants were randomly assigned to either of the two conditions, i.e. the neutral and social supportive condition of the iCat language tutor. A between subject design was used. The experiment was conducted at the premises of the Primary International School Eindhoven. Formal consent forms were signed by the parents and the participants. A classroom was set-up for this purpose. The iCat robot was placed in the middle of a table and waited in a sleeping state for a participant to start the tutoring session, i.e. showing slow breezing motions with closed eyes. The learning materials were placed in-front of iCat. The experimenter was placed during the training in a corner of the room, so that he could react in case the child would ask for help but would otherwise not interfere with the tutoring session. A typical interaction during the training session is depicted in Fig. 3.

Each session took approximately one hour and consisted of the following components: 1) intake (5 minutes); 2) learning the Toki Pona language with the aid of iCat tutor (35 minutes); 3) assessment of the learning performance of the participants (7 minutes); 4) administering the questionnaire (7 minutes); and 5) final interview (10 minutes). The experiment was conducted during normal school hours. The participants were allowed to leave their normal lesson for the duration of the experiment and would rejoin their class afterwards.

When the participants entered the room they were invited by the experimenter to take a seat at a table in front of the iCat robot. The experimenter introduced himself, explained the test, gave a short outline of the activities during the experiment, and explained the lesson materials that were prepared in front of iCat. After the participants did not have any further questions the experimenter asked the participant to wake the iCat robot up. iCat then took over, greeting the participant and the experimenter left the scene for the time of the training, leaving the participant alone with iCat.

The whole interaction was recorded by two cameras. Every participant got as much time as needed to complete the lesson script. At the end of the lesson, iCat asked the participants to inform the experimenter that the lesson has finished, bid farewell and went back to a sleeping state. The experimenter returned and asked the participants to give a first impression of their experience. After that, the language assessment and the questionnaire were administered, followed by a semi-structured final interview. Before the participants left, they received a small gift for their participation.

Figure 3: A typical interaction during the tutoring experiment: A participant shows the translation of a word during vocabulary training.

4 Results

The dependent variables were language test score, intrinsic motivation, task motivation, duration, proximity, the number of touches and the number of state repetitions. Table 1 shows the mean and standard deviation for all measurements across the two conditions. We conducted an independent sample t-test in which the degree of social supportiveness of the robot was the independent factor with the two conditions: neutral and social supportive. The gender of the participants had no influence on the results. Gender was therefore no longer considered in the following analysis.

Unlike Moreno et al. [28], we found that the scores on the language test were significantly higher in the social supportive condition (3.09) than in the neutral condition (2.59) (t(15)=-3.819, p=0.002). That is, we found a significant effect on a retention test induced by the degree of social supportive behavior. Also the intrinsic motivation and the task motivation were significantly higher in the social supportive condition (7.68 and 6.59 respectively) than in the neutral condition (6.80 and 5.86 respectively), (t(15)=-2.549, p=0.022)and (t(15)=-2.507, p=0.024), respectively.

The duration or ’time of engagement with the robot’, as measured from the logging data, did not significantly differ for both conditions, i.e., 2374.74 seconds for social supportive and 2127.56 seconds for the neutral conditions (t(15)=-1.102, p=0.289). In other words, the participants learned more and were higher motivated in the social supportive condition, while they spent the same time with the robot tutor in the social supportive condition. Furthermore, there was no effect on the number of repetitions between the conditions, i.e., 32.00 and 30.78 for social supportive and neutral condition, respectively (t(12)= -0.194, p=0.850).

With regard to proximity, i.e., the physical distance that the participants allowed between them and the robot tutor, was significantly less for the social supportive condition than for the neutral condition (63.91 and 73.58 respectively) (t(15)= 2.465, p=0.030). However, the number of ear and paw touches ((t(12)= -2.135, p=0.054) and (t(12)= 0.886, p=0.393), respectively) did not significantly differ between the 2 conditions, even though there is a strong tendency in the number of ear touches, which is higher for the social supportive condition than for the neutral condition. This can be explained by the high standard deviation and by the fact that the ear and paw touches also have a functional meaning in the application. Additionally, due to technical problems, we could only use the log file data of ear and paw touches for 12 participants.

During the final interview, the participants were asked how they would describe the lesson with the iCat tutor to others who don’t know it. Participants consistently told that it was a fun exercise and that vocabulary learning felt more like a game than real work, i.e. “It is like a game” (participant 10), “The cards felt more like learning, but iCat was more like a game” (participant 7). In particular, they liked how iCat reacted to them and described iCat as intelligent. They also liked the combination of showing cards to iCat and pronouncing the words: “The most I liked was how she taught you and what she taught you and how you had to put the things in front of her and touch her” (participant 2).

| neutral condition | social supportive condition | |||

| mean | std. dev. | mean | std. dev | |

| language test score | 2.59 | 0.28 | 3.09 | 0.27 |

| intrinsic motivation | 6.80 | 0.82 | 7.68 | 0.55 |

| task motivation | 5.86 | 0.63 | 6.59 | 0.54 |

| duration | 2127.56 | 476.54 | 2374.71 | 399.22 |

| proximity | 73.58 | 7.05 | 63.91 | 7.01 |

| ear touches | 69.33 | 20.50 | 95.00 | 23.53 |

| paw touches | 46.56 | 23.59 | 35.80 | 17.51 |

| state repetitions | 30.78 | 12.05 | 32.00 | 9.67 |

Table 1: Mean and standard deviation for the measurements of the language assessment and questionnaire.

All participants could imagine also to learn other things than the presented Toki Pona lesson with iCat such as other languages and especially mathematics. For mathematics, they would expect iCat to be able to explain the material and, of course, also to help with their homework. iCat was perceived more as a friend than a teacher. In line with this perception, all participants would like to play games with iCat. Some even stated they would spend the whole evening with iCat.

We also asked participants what we should improve. Most often they referred to the voice of iCat and that it did not always react immediately. Some indicated that they were a little bit afraid of iCat when she made rough movements, but that they also lost this fear pretty soon.

5 Discussion

For our experimental setup we used general findings on best practices of teaching behaviors as design guidelines to vary the social supportiveness of our tutor and translated these findings in concrete behaviors of a robotic tutor. Our results showed a clear positive effect of the robot’s behavior on the learning performance of the participants. This is remarkable, since in both conditions the actual learning content and training time stayed the same. This means that implementing and varying the behavioral dimensions, i.e., role model, non-verbal feedback, attention guiding, empathy and communicativeness, in a tutor application is very promising for achieving improved learning results. Furthermore, the results support the approach to engage socially with the student. Currently, most learning materials and educational technology focus solely on a mere knowledge transfer and hardly on the dialogue and social supportiveness aspects. The evaluation results of the robot tutor application indicate that the use of social supportiveness might be very promising for implementing in educational media.

The fact that we found a significant decrease in the distance that the participants kept from the robot in the social supportive condition, comparing the neutral condition to the social supportive condition, further supports that a different degree of socialness was perceived by the participants. From social science it is known that the personal distance is strongly correlated to the quality of a social relationship, i.e., the shorter the physical distance, the closer the relationship is. However, we could not find a significant effect between the conditions in the number of touch events. This is explainable by the fact that in both conditions the touch sensors had a semantical meaning. In particular, touching the ears meant to repeat the last explanation, while touching the paws meant to continue in the lesson. Nevertheless, we observed a decrease in the number of paw touches, meaning that in the social supportive condition participants would let iCat continue more often on her own. We also observed an increase of the ear touch events. This was mainly due to the number of caresses that the participants gave to iCat and as exemplified by the recorded video data. Even though there was a semantical meaning, the quality of how the participants caressed iCat changed between the conditions.

From the questionnaire we concluded that the degree of socialness has an effect on the the students’ motivation. Both, internal motivation and task motivation showed a significant increase from the neutral to the social supportive condition. Consequently, implementing social supportiveness has a positive effect on motivation and hence on the learning process and eventually on the learning efficiency. This is also supported by the fact that time spent on the task remained the same. One participant specifically mentioned that the Toki Pona language was “a bit weird and not useful” (participant 6, supportive condition), but that it was fun to learn with iCat. However, further studies are needed to compare the actual effectiveness of a robotic interface with other embodiments such as vocabulary learning programs or more traditional methods, including pen and paper.

Further studies are also needed to assess student engagement when using a robotic tutor over a longer time period. One of the reasons for applying robotic interfaces in education was that it makes the interaction more fun and engaging. However, in our short term experiment, participants of both conditions reported that it was fun when we asked them to give a first impression on the application during the final interview. We suspect a strong novelty effect to amplify a positive attitude towards the application. Additionally, there might be a social factor included when the children were asked to rate a robot by the experimenter, who supposedly built the robot. Nevertheless, given the results of the motivation questionnaires we expect students to be more engaged with a social supportive interface, hence spending more learning time with iCat as they would with a non-social interface.

A more long term experiment could also be used to further extend the list of characteristics that defines social supportive behavior in education. For example, one characteristic that can be added to the list but was not considered in this experiment is the ability to adapt to the mood of the learner. Following Tiberius and Billson, a good teacher should have a sense for the student and adapt to his needs. In our experiment, we did not expect this characteristic to have a major effect because of the short time frame of the experiment.

Based on our experiences during the experiment, we propose to use social robots also to investigate which particular behavior patterns are most effective in a tutoring scenario. Social robots provide an ideal research platform to investigate the effect of behaviors in a controlled and structured manner. The results could in return be translated into best practice and guidelines for implementing tutoring applications.

6 Conclusion

Social supportive behavior of a robotic interface, implemented in a tutoring application, has a positive impact on student’s learning performance. Five characteristics of social supportive behavior were derived from the educational domain,i.e., role model, non-verbal feedback, attention building, empathy and communicativeness. These characteristics were translated and operationalized into concrete behaviors of a robotic tutor. Manipulating the levels of these variables induced social supportive behaviors for the robotic tutor that showed very promising results for the improvement of teaching media, in particular for tutoring applications. It has been shown that the perceived social supportiveness could successfully been varied by varying the expressive behavior of the robot. Furthermore, an architecture was presented that has the potential of suiting a class of applications that can be implemented using scripting technology.

Finally, the results extend the research findings on teacher behavior from the educational domain to robotic interfaces. Engaging in social interaction seems to be more effective than focusing on a mere knowledge transfer. Therefore, it can be argued argue that robotic user interfaces have a potential to be an effective media and could be meaningfully integrated in the educational process. Participants in the social supportive condition were significantly more motivated, which is essential for the success for any educational technology to be effective also in the long term.

7 Acknowledgments

We want to thank the Regional International School of Eindhoven for their generous support for this experiment. In particular we want to thank “Groep 8” for their participation and valuable feedback. We also want to thank Omar Mubin for assisting us in the development of the language assessment.

References

[1] J. Alderson, C. Clapham, and D. Wall. Language test construction and evaluation. Cambridge University Press, 1995.

[2] M. Barrera, I. Sandler, and T. Ramsay. Preliminary development of a scale of social support: Studies on college students. American Journal of Community Psychology, 9(4):435–447, 1981.

[3] C. Bartneck, J. Reichenbach, and A. van Breemen. In your face, robot! The influence of a character’s embodiment on how users perceive its emotional expressions. In Proceedings of the Design and Emotion 2004 Conference, Ankara, Turkey, pages 32–51, 2004.

[4] C. Bartneck, T. Suzuki, T. Kanda, and T. Nomura. The influence of people’s culture and prior experiences with AIBO on their attitude towards robots. AI & Society The Journal of Human-Centred Systems, 21(1), 2006.

[5] A. v. Breemen. Bringing robots to life: Applying principles of animation to robots. In Proceedings of the Workshop on Shaping Human-Robot Interaction - Understanding the Social Aspects of Intelligent Robotic Products. In Cooperation with the CHI2004 Conference, Vienna, Apr. 2004.

[6] J. Brophy. Research linking teacher behavior to student achievement: Potential implications for instruction of chapter 1 students. In Designs for Compensatory Education: Conference Proceedings and Papers, page 60p., 1986.

[7] J. Casper and R. Murphy. Human-robot interactions during the robot-assisted urban search and rescue response at the world trade center. IEEE Transactions on Systems, Man, and Cybernetics, Part B, 33(3):367–385, 2003.

[8] L. Darling-Hammond. Teacher quality and student achievement:A review of state policy evidence. Education Policy Analysis Archives, 8(1):1–48, 2000.

[9] K. Dautenhahn. Socially intelligent robots: Dimensions of human-robot interaction. Philos Trans R Soc Lond B Biol Sci., 362(1480):679 – 704, Apr. 2007.

[10] D. Dehn and S. van Mulken. The impact of animated interface agents: a review of empirical research. International Journal of Human-Computers Studies, 52(1):1–22, 2000.

[11] D. Feil-Seifer and M. Mataric. Defining socially assistive robotics. In Rehabilitation Robotics, 2005. ICORR 2005. 9th International Conference on, pages 465–468, 2005.

[12] T. Fong, I. Nourbakhsh, and K. Dautenhahn. A survey of socially interactive robots. Robotics and Autonomous Systems, 42(3-4):143–166, March 2003.

[13] D. Goldhaber. The mystery of good teaching. Education Next, 2(1):50–55, 2002.

[14] M. A. Goodrich, T. W. McLain, J. D. Anderson, J. Sun, and J. W. Crandall. Managing autonomy in robot teams: Observations from four experiments. In HRI, pages 25–32, 2007.

[15] M. A. Goodrich and A. C. Schultz. Human-robot interaction: A survey. Found. Trends Hum.-Comput. Interact., 1(3):203–275, 2007.

[16] P. Hardre. Beyond two decades of motivation: A review of the research and practice in instructional design and human performance technology. Human Resource Development Review, 2(1):54, 2003.

[17] C. Hongpaisanwiwat and M. Lewis. The effects of animated character in multimedia presentation: Attention and comprehension. In Systems, Man and Cybernetics, 2003. IEEE International Conference on, volume 2, pages 1350–1352, Oct. 2003.

[18] R. Jackson and E. Fagan. Collaboration and learning within immersive virtual reality. In Proceedings of the third international conference on Collaborative virtual environments, pages 83–92. ACM New York, NY, USA, 2000.

[19] A. Jasman. The role of teacher educators in the promotion, support and assurance of teacher quality. http://www.atea.schools.net.au/atea/papers/jasman.pdf, 2001.

[20] W. Johnson, J. Rickel, and J. Lester. Animated pedagogical agents: Face-to-face interaction in interactive learning environments. International Journal of Artificial Intelligence in Education, 11(1):47–78, 2000.

[21] W. Johnson, P. Rizzo, W. Bosma, S. Kole, M. Ghijsen, and H. Van Welbergen. Generating socially appropriate tutorial dialog. Lecture Notes in Computer Science, 3068:254–264, 2004.

[22] S. E. Kisa. Toki pona. Online source: http://www.tokipona.org (accessed Jan. 2009), 2007.

[23] C. Langford, J. Bowsher, J. Maloney, and P. Lillis. Social support: A conceptual analysis. J Adv Nurs, 25(1):95–100, 1997.

[24] Lego®;. Mindstorms NXT. Online source: http://mindstorms.lego.com, 2007.

[25] J. C. Lester, S. A. Converse, S. E. Kahler, S. T. Barlow, B. A. Stone, and R. S. Bhogal. The persona effect: Affective impact of animated pedagogical agents. In CHI ’97: Proceedings of the SIGCHI conference on Human factors in computing systems, pages 359–366, New York, NY, USA, 1997. ACM.

[26] S. P. Linder, B. E. Nestrick, S. Mulders, and C. L. Lavelle. Facilitating active learning with inexpensive mobile robots. Journal of Computing Sciences in Colleges, 16(4):21–33, 2001.

[27] E. McAuley. Psychometric Properties of the Intrinsic Motivation Inventory in a Competitive Sport Setting: A Confirmatory Factor Analysis. Research Quarterly for Exercise and Sport, 60(1):48–58, 1989.

[28] R. Moreno, R. Mayer, H. Spires, and J. Lester. The case for social agency in computer-based teaching: Do students learn more deeply when they interact with animated pedagogical agents? Cognition and Instruction, pages 177–213, 2001.

[29] O. Mubin, S. Shahid, C. Bartneck, E. Krahmer, M. Swerts, and L. Feijs. Using language tests and emotional expressions to determine the learnability of artificial languages. In CHI 2009 Conference on Human Factors in Computing Systems, pages 4075–4080, 2009.

[30] S. Parise, S. Kiesler, L. Sproull, and K. Waters. Cooperating with life-like interface agents. Computers in Human Behavior, 15(2):123–142, 1999.

[31] A. Powers, S. Kiesler, S. Fussell, and C. Torrey. Comparing a computer agent with a humanoid robot. In Proceeding of the ACM/IEEE international conference on Human-robot interaction, pages 145–152, New York, NY, USA, 2007. ACM Press.

[32] B. Reeves and C. Nass. The Media Equation : How People Treat Computers, Television, and New Media like Real People and Places (CSLI Lecture Notes (Hardcover)). The Center for the Study of Language and Information Publications, September 1996.

[33] M. Saerbeck and A. J. van Breemen. Design guidelines and tools for creating believable motion for personal robots. In Robot and Human interactive Communication, 2007. RO-MAN 2007. The 16th IEEE International Symposium on, pages 386–391, 2007.

[34] N. Shin and S. Kim. Learning about, from, and with Robots: Students’ Perspectives. In Robot and Human interactive Communication, 2007. RO-MAN 2007. The 16th IEEE International Symposium on, pages 1040–1045, 2007.

[35] K. Shinozawa, F. Naya, J. Yamato, and K. Kogure. Differences in effect of robot and screen agent recommendations on human decision-making. International Journal of Human-Computer Studies, 62(2):267–279, 2005.

[36] E. Strommen and K. Alexander. Emotional interfaces for interactive aardvarks: designing affect into social interfaces for children. In Proceedings of the SIGCHI conference on Human factors in computing systems: the CHI is the limit, pages 528–535. ACM New York, NY, USA, 1999.

[37] R. Tiberius. The why of teacher/student relationships. Essays on teaching excellence, Professional and Organizational Development (POD) reading packet 10(4), 1993.

[38] R. Tiberius and J. Billson. The social context of teaching and learning. New Directions for Teaching and Learning, 45:67–86, 1991.

[39] X. Tu. Artificial Animals for Computer Animation: Biomechanics, Locomotion, Perception, and Behavior, volume 1635/1999 of Lecture Notes in Computer Science. Springer Berlin, 1999.

[40] A. van Breemen and Y. Xue. Advanced animation engine for user-interface robots. In Intelligent Robots and Systems, 2006 IEEE/RSJ International Conference on, pages 1824 – 1830. IEEE CNF, Oct 2006.

[41] A. Wayne and P. Youngs. Teacher characteristics and student achievement gains: A review. Review of Educational Research, 73(1):89, 2003.

[42] J. Yamato, R. Brooks, K. Shinozawa, and F. Naya. Human-robot dynamic social interaction. NTT Tech Rev., 1(6):37–43, 2003.

This is a pre-print version | last updated July 7, 2010 | All Publications