DOI: 10.2478/s13230-010-0011-3 | CITEULIKE: 8005059 | REFERENCE: BibTex, Endnote, RefMan | PDF ![]()

Bartneck, C., Bleeker, T., Bun, J., Fens, P., & Riet, L. (2010). The influence of robot anthropomorphism on the feelings of embarrassment when interacting with robots. Paladyn - Journal of Behavioral Robotics, 1(2), 109-115.

The influence of robot anthropomorphism on the feelings of embarrassment when interacting with robots

Department of Industrial Design

Eindhoven University of Technology

Den Dolech 2, 5600MB Eindhoven, NL

christoph@bartneck.de, [t.bleeker, p.p.m.fens, j.a.i.bun, l.c.p.v.riet]@student.tue.nl

Abstract - Medical robots are expected to help with providing care in an aging society. The degree to which patients experience embarrassment in a medical examination might be influenced by the robots’ level of anthropomorphism. The results of our preliminary study show that young, healthy, Dutch university students were less embarrassed when interacting with a technical box than with a robot. Highly human-like robots might therefore not be the best choice for a medical robot. This result also shows that the robot was perceived as a person more so than the technical box. The next step is to compare the robot to a real nurse or doctor. If patients are less embarrassed when interacting with a robot, then, potentially, patients will be less likely to defer important medical examinations when carried out by medical robots.

Keywords: medical robots, embarrassment, anthropomorphism, emotions

Introduction

Medical robots are used for diagnosis, surgery, therapy, and rehabilitation. Diagnostic robots can “generate consistent, accurate information for further human or machine-based interpretation of patient data” [1]. According to the World Robotics report [1], 4371 units of medical robots (worth 1160 Million USD) were in use at the end of 2007, and this number is expected to increase in 2008-2011 by 3420 units (worth 1698 Million USD). This places medical robotics in third place for expected growth, right after field robotics (4166 Million USD) and defense/rescue & security robotics (2142 Million USD).

The UMass Amherst’s Laboratory for Perceptual Robotics developed the uBot-5 that, within the framework of the ASSIST system, allows doctors to perform basic medical diagnosis and consultancy at the home of the patient without actually being there [2]. In an aging society, such services are expected to gain importance. This robot will enable medical personnel to provide better service to the growing number of people requiring care, since it also has the potential to reduce stress under nursing staff and patients [3]. Health robots also allow the care receivers to live independently at home for a longer time [4].

Other health robots include the RemotePresence-7 robot by InTouch. It demonstrated that it can be successfully used as a telehealth system [5]. This robot may become a new modality for doctor-patient interactions, particularly in areas where access to medical expertise is limited. The Estele robot, built by Robosoft, is a tele-operated robotic system allowing an expert clinician to perform echographic diagnosis remotely. A bidirectional video-conferencing system allows the patient and specialist to communicate. Another example is Gecko Systems’ CareBot, a robot specifically designed to provide monitoring, automatic reminders, companionship, and emergency notification for care receivers.

Most currently available health robots require a doctor to control the robot. The CareBot demonstrated that health robots can also include autonomous behavior. The more tasks the robot can execute without the direct control of a doctor, the more efficient the robot will be. It is already normal that simpler tasks, such as measuring blood pressure and temperature, are not carried out by doctors, but by nurses or family members. We expect that health robots will be able to conduct such simple examinations. This study therefore focused on health robots that do not require the presence of an operator. In other words, we investigated the interaction of patients with a robot and not with a remote doctor.

An important design decision for the development of robots is their appearance [6]. They may be designed to look more or less like a human being. The CareBot, for example, is designed to have a certain resemblance to humans, while the Estele robot is certainly not. The different levels of anthropomorphism evoke different expectations and behaviors in their human users [7]. Kahn et al. [8] proposed the psychological benchmarks of autonomy, imitation, intrinsic moral value, moral accountability, privacy, and reciprocity that in the future may help to deal with the question of what constitutes the essential features of being human in comparison with being a robot. The aspect of privacy is of particular importance in the context of medical robots, since patients are very sensitive to embarrassment. In Harris’s [9] study, 57% of the participants reported being deterred from seeking medical care for complaints they believed to be serious by the fear of embarrassment. The fact that a potential embarrassment leads people to risk their own health is striking. This does not only hold true for examinations that are potentially embarrassing (e.g. Papanicolaou test) but it is particularly tragic for illnesses that can best be treated at an early stage, such as cancer.

Several competing definitions of embarrassment exist, and a discussion about their merits is available [9]. A particularly relevant definition for health robots might be the social evaluation model, championed by Rowland Miller [10]. He proposes that the root of embarrassment is the anticipation of negative evaluation by others. This model might explain Harris’s striking results. The patients might feel that the others (e.g. doctor, family, friends) would look down on them if the symptoms turned out to have a trivial cause. It also explains why patients might feel uneasy about undressing in front of a nurse. The main research question of this study is the degree to which this model of embarrassment translates to robots. Will patients also feel embarrassed to undress in front of a robot? Will the different levels of anthropomorphism influence this experience of embarrassment?

The writer Douglas Adams has already predicted embarrassing situations in human-robot interaction in his last book [11] in where Nettle wakes up in her bed. A robotic lamp talks to her and she replies angrily: ‘Will you turn around while I get dressed!’ said Nettle. The lamp turned around obediently. It was the same on the other side. ‘Will you please go away?’ she said.

This short excursion into literature illustrates that privacy and embarrassment are not limited to medical applications, but that a robot should be able to comply with social norms and standards if it is to be accepted in the social structure of our homes. Robots should know when not to enter the bedroom or bathroom. The relevance of our research question therefore extends beyond purely medical applications.

Method

We conducted a between-participants experiment in which the embodiment of the health system had the experimental conditions: technical box, technical robot, and lifelike robot. These three different embodiments represent three different levels of anthropomorphism. The lifelike robot is the most anthropomorphic, followed by the technical robot and the technical box. We use the term lifelike to clearly label this experimental condition, but we do not intend to suggest that the robot used in this condition would be humanoid. Riek [12] pointed out that the range of anthropomorphism is extensive and on her scale our robot might not qualify as being ”lifelike”. It is certainly far less anthropomorphic than Hiroshi Ishiguro’s androids.

The participants were asked to participate in a usability study of a health system, and had to perform three increasingly embarrassing examinations. The experiment followed the ethics approval procedure at the Department of Industrial Design at the Eindhoven University of Technology .

Measurements

We observed the behavior of the participants, and we asked them to fill in questionnaires in order to measure the embarrassment they experienced during the experiment. Following Keltner [13], we coded the following seven behaviors as indicators for embarrassment: hand movement (not directed toward the face); posture shift (complex movements involving trunk, hands, or legs); nervous smile (a nervous non-Duchenne smile); gaze shift (a lateral eye movement not accompanied by head movement); gaze down (an eye movement directed downwards); head away (lateral movement); and face touch (hand movements toward the face). In addition, we coded the behavior of undressing (taking off of clothes). Undressing consisted of the following categories: all clothes on; vest/sweater/jacket taken off; as previous plus shoes and socks; as previous plus T-shirt; as previous plus trousers/skirt; and all clothing removed. We recorded how often each behavior occurred for each participant.

The embarrassment questionnaire was based on Costa et al. [14], and consisted of the items embarrassment, shame, anxiety, disgust, joy, interest, and surprise. The participants were asked to rate their emotions during the experiment on a 7-point scale with anchors labeled “not at all” and “very much”. The average of the first three items is taken as a measurement of the level of perceived embarrassment in a certain situation. As a control for the individual susceptibility to embarrassment of the participants, we used the susceptibility to embarrassment scale (SES) that consists of 25 items [15]. To enable us to distinguish between the two embarrassment concepts, we use the term Personality Embarrassment to label the concept of susceptibility to embarrassment, and the term Situation Embarrassment to label the concept of the perceived level of embarrassment in a certain situation. Both questionnaires were carefully translated into Dutch using the back translation method.

Setup

The experiment room was approximately 3x7 meters. On one side, a table was placed on which the health system was set up (see Figure 1 ). On the other side of the room, a line on the ground indicated where the participants should stand for the vision test. We placed a carpet between the line and the table, so that the participants would feel comfortable walking barefoot. A weighing scale was placed in front of the table. This scale was connected to the health system by cable. A thermometer was placed on the table, right in front of the health system. We removed the display and button of the thermometer to prevent the participants from actually operating the device. The thermometer was inserted into a disposable thermometer sheath that we exchanged after each use. Furthermore, the thermometer was disinfected after each use.

Fig. 1. Setup of the experiment room

The embodiment factor consisted of three experimental conditions for the health system. The technical box embodiment used the Biopac MP-150 System (see Figure 2 ). In addition, we placed two speakers next to it. Several cables were attached to the Biopac System, and the blinking LEDs at the front indicated activity. Since this system is used for measuring biofeedback signals, it can be assumed that it would be a plausible box for a health system. Some roboticist may have a restricted definition of “embodiement”. Our definition of “embodiement” includes talking technical boxes, since our definition is not based on the question on how to define a robot, but on how humans perceive characters. A talking technical box constitutes a type of character that has a certain embodiment.

Fig. 2. The technical box embodiment using the Biopac MP-150 system

The two robotic embodiments used the Philips’ iCat robot (see Figure 3 ). We attached a band with a red cross around the torso of the iCat to clearly indicate its medical purpose. In the lifelike robot experimental condition, the iCat tracked the face of the participant and moved its head and eyes to follow the participant. The eyes, eyelids, eyebrows, and lips were fully animated and the robot used normal human sentences to communicate with the participant (e.g. “Can you please read the first line for me”). In the technical robot experimental condition, the iCat did not move at all and used short verbal commands (e.g. “Read first line”). While the physical aspects of the two robots were the same, their behaviors were different. The lifelike robot showed more humanlike behavior and can therefore be considered to be more anthropomorphic than the technical robot that showed much less humanlike behavior. For their speech synthesis, all three embodiments used the Sofia voice made by the Acapela Group.

Fig. 3. The health iCat robot

For practical reasons, we decided to use a Wizard of Oz method to control the interaction between the health system and the participants. We hid two cameras in the experiment room and showed the live video stream on monitors in the control room. A similar procedure was used by Costa et al. [14]. They showed nude pictures to participants who were either alone or in the presence of two unfamiliar individuals. They hid a camera, and the participants were not aware of its presence. This setup allowed them to observe more-natural behavior. Their study showed that the presence of unfamiliar persons changes the experience of embarrassment. It was therefore necessary in our study to hide the cameras, so that the participants were only aware of the robot. Otherwise, the experimenters themselves would have influenced the experience of the participants.

The control room was located right next to the experiment room. The video and audio from the cameras allowed the operator to see and hear the participants at all times. The operator followed a strict protocol to trigger the actions of the health system using a graphical user interface that we built for the purpose. It enabled the operator to quickly access all the relevant actions at a given stage of the experiment.

A second experimenter used the live video to perform live scoring of the participant’s behavior. We used the Noldus Observer system to perform all behavior analyses. The live video was also recorded onto the hard disk, but was deleted immediately if the participant did not sign the video release form. A third camera was hidden under the table, pointing at the display of the scale. It allowed the operator to read the weight of the participant in order to include this value in the dialogue. The participants were not told that cameras were present, and none of the participants detected the cameras.

Procedure

The participants were invited to join a usability test of a health system. When they arrived at the experiment room, they were told only that they would participate in an experiment, and were asked to read and sign a consent form. The consent form explicitly mentioned that they could leave the experiment at any time without any negative consequences. The individual participants were then shown into the experiment room. Before leaving the room, the experimenter instructed the participant to lock the door from the inside to avoid any accidental disturbances.

Next, the health system was activated, and it asked the participant to undertake a vision test. The participant had to stand behind a line on the ground and cover first the left eye and then the right eye. The health system asked the participant to read out letters from a standard vision test poster that was attached to the wall in front of them.

Next, the health system asked the participants to measure their weight. For a correct measurement it would be necessary for theparticipants to undress. If the participant refused to undress, then the health system repeated the request up to three times. The health system did not explicitly tell the participant to get completely naked, it rather repeated the request to undress up to three times. The participants were then instructed to stand on the scales. The health system told the participants their weight before asking them to measure their temperature. For an accurate measurement it would be necessary to insert the thermometer into the rectum, which is common practice in Western Europe, in particular for children. However, infrared aural thermometers have become very popular in recent years. An informal interview amongst the participants and the experimenters revealed that most of them had experienced traditional thermometer.

If the participant did not comply, the health system would repeat the request up to three times. If the participant completed the temperature measurement, the health system reported the result and asked the participant to throw the disposable thermometer sheath into the waste bin. If the participant did not complete the temperature measurement, the health system proceeded to the next step: the health system thanked the participants and asked them to leave the room.

The experimenter welcomed the participants back, and instructed them to fill in the two questionnaires. Afterwards the experimenter explained that video cameras were present in the experiment room and explained the reasons for the secrecy. He then asked the participant to sign a video release form. Lastly, the experimenter thanked the participant.

Pilot

We conducted a small pilot test. One significant result was the importance of allowing the participants to lock the door from the inside. In the pilot, the door remained unlocked, and the participants were afraid that somebody might enter the room while they were undressed. Hence they were reluctant to follow the instructions of the health system. We initially also used a cardboard box that was labeled with a red cross as an alternative health system, but the participants did not experience this as plausible. We therefore decided to use the Biopac system.

Participants

44 subjects, aged 18-22 (mean 19.5), participated in the study. They were all students at the Eindhoven University of Technology. Table I shows the distribution of male and female participants across the experimental conditions. 37 participants signed the video release form.

| Female | Male | Total | |

|---|---|---|---|

| Technical robot | 3 | 12 | 15 |

| Lifelike robot | 3 | 11 | 14 |

| Technical box | 2 | 13 | 15 |

| Total | 8 | 36 | 44 |

TABLE I Distribution of participants across the experimental conditions

Results

All participants signed the consent form, joined the experiment, and filled in the questionnaires. None of them spotted the hidden video cameras and all of them kept their underwear on during the experiment. 37 signed the video release form, and seven did not. The video recordings of the seven participants who did not sign the video release form were deleted. Before excluding these seven participants from the further analysis, we wanted to ensure that leaving them out would not introduce a bias. It is conceivable that these seven participants might have been the most embarrassed, and that excluding them might have influenced the results. We therefore conducted a statistical test based on the coding of the participant’s behavior during the experiment and the data from the questionnaires.

We conducted an independent sample t-test to check whether the participants who did not sign the video release form were more embarrassed (situation and personality) in comparison with the participants who did sign the form. There was no significant difference. We performed a Chi-square test to investigate if more women than men refused to sign the video release form. We could not find a significant relationship (χ2=0.604, df=1, p=0.437). We performed a second Chi-square test to investigate whether participants who undressed further signed the video release form less often. For this test we split the sample into two groups of approximately the same size (see Table II ) - participants who undressed to their underwear (N=26) and participants who did not (N=18). We could not find a significant relationship (χ2=0.524, df=1, p=0.469).

| Category - Wearing: | Undressing | Number |

|---|---|---|

| more than underwear | all clothes on | 3 |

| vest/sweater/jacket taken off | 1 | |

| everything above taken off plus shoes and socks | 9 | |

| everything above taken off plus T-shirt | 13 | |

| only underwear | everything above taken off plus trousers/skirt | 18 |

| everything taken off | 0 |

TABLE II Count of undressing behavior

Nine out of 44 completed the temperature measurement. We conducted a third Chi-square test to explore whether participants who completed the temperature measurement signed the video release form less often. We could not find a significant relationship (χ2=0.337, df=1, p=0.562).

The decision to sign the video form was not influenced by gender, the degree to which the participants undressed, whether they completed the temperature measurement, or whether they were more embarrassed. We may therefore conclude that the exclusion of the seven participants from the further analysis does not introduce a bias. The remaining 37 participants were spread reasonably equally across the experimental conditions (see Table III ).

| Female | Male | Total | |

|---|---|---|---|

| Technical robot | 2 | 8 | 10 |

| Lifelike robot | 3 | 11 | 14 |

| Technical box | 1 | 12 | 13 |

| Total | 6 | 31 | 37 |

TABLE III Distribution of participants across the experimental conditions

For the remaining 37 participants, the experimenter who performed the live scoring refined it through a review of his original scoring. He watched all videos again and corrected all mistakes that he had made. In addition, a second experimenter coded the videos independently of the first experimenter.

According to Garson [16], Intraclass correlation (ICC) is preferred over Pearson’s r correlation only when the sample size is small (e.g. < 15), given that there are just two raters. We have a sample size of 37 and two raters, and hence we use Pearson’s correlation to test the inter-rater reliability.

| Pearson Correlation | Sig. 2 tailed | |

|---|---|---|

| Hand movement | 0.582 | > 0.001 |

| Posture shift | 0.710 | > 0.001 |

| Nervous smile | 0.800 | > 0.001 |

| Gaze shift | 0.275 | 0.071 |

| Gaze down | 0.336 | 0.026 |

| Head away | 0.698 | > 0.001 |

| Face touch | 0.569 | > 0.001 |

TABLE IV Pearson’s correlation between the two raters.

The average Pearson’s correlation is 0.57 across all behaviors. There was low agreement for the gaze shift and gaze down behavior (see Table IV ). Closer inspection revealed that the quality of the videos did not allow an accurate and reliable judgment of the eye movement. The results of the analysis of gaze shift and gaze down need to be carefully considered and put into perspective. The average Pearson’s correlation, excluding gaze shift and gaze down, is 0.67, which gives us sufficient confidence in the judgments of the raters.

A reliability analysis across the 25 Personality Embarrassment items resulted in a Cronbach’s Alpha of 0.885, which gives us sufficient confidence in the reliability of the questionnaire. The same analysis was used on the three Situation Embarrassment items, which resulted in a Cronbach’s Alpha of 0.769, which is well above the threshold of 0.7 that was suggested by Nunally [17].

| technical robot | lifelike robot | technical box | ||||

|---|---|---|---|---|---|---|

| mean | std. dev. | mean | std. dev. | mean | std. dev. | |

| Hand movement | 2.73 | 1.94 | 2.46 | 1.39 | 0.60 | 0.83 |

| Posture shift | 2.73 | 3.86 | 3.08 | 3.20 | 0.27 | 0.59 |

| Nervous smile | 0.67 | 1.23 | 2.69 | 2.46 | 1.53 | 1.30 |

| Gaze shift | 1.67 | 1.23 | 1.23 | 0.73 | 0.73 | 0.70 |

| Gaze down | 4.27 | 3.84 | 4.00 | 1.83 | 0.60 | 0.63 |

| Head away | 4.20 | 3.65 | 3.15 | 2.34 | 1.80 | 1.61 |

| Face touch | 1.67 | 1.91 | 1.46 | 1.39 | 1.07 | 1.16 |

| S. Embarrassment | 3.16 | 1.41 | 2.77 | 0.88 | 2.22 | 0.60 |

TABLE V Mean and standard deviation for all measurements across all experimental conditions

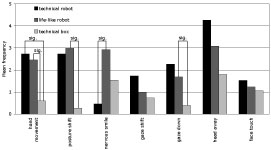

We conducted an analysis of covariance (ANCOVA) in which the embodiment was the between-participants factor. It had three experimental conditions: technical box, technical robot, and lifelike robot. The dependent variables were hand movement, posture shift, nervous smile, gaze shift, gaze down, head away, face touch, and Situation Embarrassment. Personality Embarrassment was the covariant. The mean and standard deviation for all variables are shown in Table V . The mean count of the behaviors per experimental condition is represented graphically in Figure 4 .

The embodiment had a significant influence on hand movement (F(2,32) = 11.413,p < 0.001), posture shift (F(2,32) = 5.589,p < 0.008), nervous smile (F(2,32) = 5.274,p < 0.010), and gaze down (F(2,32) = 5.274,p < 0.010). It is approaching significance for Situation Embarrassment (F(2,32) = 2.478,p < 0.100) and gaze shift (F(2,32) = 2.809,p < 0.075). A bonferroni correct alpha-pair-wise comparison revealed several significant differences in the measurements that are illustrated in Figure 4 .

Fig. 4. Count of behaviors across the experimental conditions. “sig.” indicates significant differences.

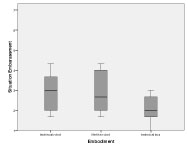

The embodiment had an almost significant influence on the Situation Embarrassment; and the behavior clearly indicated that participants were less embarrassed in the case of the technical box, particularly in comparison with the lifelike robot (see Figure 5 ).

Fig. 5. Situation Embarrassment across the embodiment conditions.

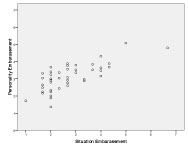

A scatter plot suggests a relationship between Situation Embarrassment and Personality Embarrassment (see Figure 6 ). We therefore decided to investigate this relationship further.

Fig. 6. Scatter plot of Situation Embarrassment against Personality Embarrassment.

A correlation analysis revealed that Personality Embarrassment and Situation Embarrassment are highly correlated (Pearson correlation= 0.743,p < 0.001). The presence of multi-colinearity can be excluded, since the Tolerance is 1.000, and thereby well above the threshold of 0.1. The Variance Inflation Factor is also 1.000 and thereby well below the threshold of 10. The normal probability plot of regression standardized residuals (see Figure 7 ) suggests no major deviations from normality. The values in the residual scatter plot are scattered around the zero point of the graph with no obvious patterns. Hence no major deviation from normality can be assumed.

Fig. 7. Regression standardized residual.

The linear regression analysis revealed an R2 value of 0.552. Therefore approximately half of the variance is accounted for by the regression, which has the formula of Personality Embarrassment = -0.546 + 0.743 * Situation Embarrassment.

Discussion and Conclusions

Nine of the 44 participants (20%) completed the temperature measurement, the most embarrassing examination in the experiment. 37 participants signed the video release form. All participants kept their underwear on during the whole experiment. The level of embarrassment in the experiment therefore seemed to be appropriate. We neither encountered a ceiling effect in which none of the participants would have completed all tasks nor a floor effect, in which all the participants would have completed all tasks.

The different embodiments had a significant effect on the participants’ behavior, and it approached significance for Situation Embarrassment. We may therefore conclude that the young, healthy, predominantly male, Dutch university students experienced the technical box experimental condition as less embarrassing than the two robotic experimental conditions. We speculate that the robot was experienced as more anthropomorphic and therefore more like another person than the technical box. Since the robot was more like another person, it might have increased the anticipation of a negative evaluation following Miller’s model [10]. Sherry Turkle already suggested that robots increasingly become “sort-of-alive” and form a class of beings that resides between the animate and the inanimate [18].

This would mean that a more technical looking robot might be more suitable for a health system than a highly human-like robot, at least when we focus our attention on embarrassment. However, highly anthropomorphic robots might have other positive effects on the human-robot interaction that outweigh the potential for increased embarrassment. Patients might, for example, have a more positive attitude towards the robot compared to a screen agent [19]. More studies on the relationship between health robots and patients are necessary to provide a full picture of their interaction and the benefits.

Our results emphasize the importance of observation data, since the self-report method may be vulnerable to participants trying to comply to social norms and standards [14]. They may not want to admit that they have been embarrassed. Therefore, to be able to assess embarrassment, it is a good approach to complement self reports with observational data.

The results also show that participants who were susceptible to embarrassment were also more embarrassed in the experiment. This relationship was to be expected, and the fact that the measurement instruments were able to detect the relationship strengthens their validity. It would have been difficult to explain if no connection had been found.

We would now like to discuss some of the limitations of this study. First, we have to acknowledge that more men than women participated in the study. The proportion mirrors the general distribution at a technical university in the Netherlands. Women are more easily embarrassed [9], and we may therefore expect that our results might be slightly different for the general population. This study has to therefore be considered preliminary. A more balanced set of participants and possibly also additional embodiments are necessary to confirm our results.

Although the level of embarrassment for the three tasks has been appropriate for the sample at hand, the level might be perceived differently in other cultures. The Dutch are well known for their liberal approach towards nudity and sex, and participants from a more conservative culture might have acted differently. Moreover, all the participants were rather young, and their attitude towards nudity might be very different in comparison with that of senior citizens. Given that one goal for medical robots is to help in an aging society, it will be necessary to repeat this experiment with older participants. Ideally, such an experiment should take place at the actual home of the elderly person. We expect that a different social context, such as home or a hospital, might also influence the experience of embarrassment. Another step would be to also compare the health robot to the presence of a real nurse. There is a chance that patients might feel less embarrassed towards a robot than towards a real human. This may encourage them not to delay medical examinations, and thereby help prevent rapidly escalating medical problems.

References

- United Nations, World Robotics 2008. Geneva: United Nations Publication, 2008.

- P. Deegan, R. Grupen, A. Hanson, E. Horrell, S. Ou, E. Riseman, S. Sen, B. Thibodeau, A. Williams, and D. Xie, “Mobile manipulators for assisted living in residential settings,” Autonomous Robots, vol. 24, no. 2, pp. 179–192, 2008.

- K. Wada, T. Shibata, T. Saito, and K. Tanie, “Effects of robot-assisted activity for elderly people and nurses at a day service center,” Proceedings of the IEEE, vol. 92, no. 11, pp. 1780–1788, 2004.

- I. Rosofsky, “The robots have dawned: Meet the carebot,” June 28, 2009 2009.

- R. Agarwal, A. W. Levinson, M. Allaf, D. V. Makarov, A. Nason, and L.-M. Su, “The roboconsultant: Telementoring and remote presence in the operating room during minimally invasive urologic surgeries using a novel mobile robotic interface,” Urology, vol. 70, no. 5, pp. 970–974, 2007.

- C. Bartneck and J. Forlizzi, “Shaping human-robot interaction - understanding the social aspects of intelligent robotic products,” in The Conference on Human Factors in Computing Systems (CHI2004), E. Dykstra-Erickson and M. Tscheligi, Eds. Vienna: ACM Press, 2004, pp. 1731–1732.

- C. Bartneck, T. Kanda, O. Mubin, and A. A. Mahmud, “The perception of animacy and intelligence based on a robots embodiment,” in Humanoids 2007. Pittsburgh: IEEE, 2007, pp. 300 – 305.

- P. Kahn, H. Ishiguro, B. Friedman, and T. Kanda, “What is a human? - toward psychological benchmarks in the field of human-robot interaction,” in The 15th IEEE International Symposium on Robot and Human Interactive Communication, ROMAN 2006. Salt Lake City: IEEE, 2006, pp. 364–371.

- C. Harris, “Embarrassment: A form of social pain,” American Scientist, vol. 94, no. 6, pp. 524–533, 2006.

- R. S. Miller, Embarrassment : poise and peril in everyday life. New York: Guilford Press, 1996.

- T. Jones and D. Adams, Douglas Adams’s Starship Titanic : a novel. London: Pan Books, 1997.

- L. D. Riek, T.-C. Rabinowitch, B. Chakrabarti, and P. Robinson, “How anthropomorphism affects empathy toward robots,” in Proceedings of the 4th ACM/IEEE international conference on Human robot interaction. La Jolla, California, USA: ACM, 2009, pp. 245–246.

- D. Keltner, “Signs of appeasement: Evidence for the distinct displays of embarrassment, amusement, and shame.” Journal of Personality and Social Psychology, vol. 68, no. 3, pp. 441–454, 1995.

- M. Costa, W. Dinsbach, A. S. R. Manstead, and P. E. R. Bitti, “Social presence, embarrassment, and nonverbal behavior,” Journal of Nonverbal Behavior, vol. 25, no. 4, pp. 225–240, 2001.

- K. M. Kelly and W. H. Jones, “Assessment of dispositional embarrassability,” Anxiety, Stress & Coping: An International Journal, vol. 10, no. 4, pp. 307 – 333, 1997.

- D. G. Garson, “Quantitative research in public administration,” May 27th, 2009 2009.

- J. Nunally, Psychometric Theory. New York: Mc-Graw Hill, 1967.

- S. Turkle, “Cyborg babies and cy-dough-plasm: Ideas about life in the culture of simulation,” in Cyborg babies : from techno-sex to techno-tots, R. Davis-Floyd and J. Dumit, Eds. New York: Routledge, 1998, pp. 317–329.

- A. Powers, S. Kiesler, S. Fussell, and C. Torrey, “Comparing a computer agent with a humanoid robot,” in Proceedings of the ACM/IEEE international conference on Human-robot interaction. Arlington, Virginia, USA: ACM, 2007, pp. 145–152.

This is a pre-print version | last updated October 13, 2010 | All Publications