DOI: 10.5898/JHRI.5.2.Yogeeswaran | CITEULIKE: 14249119 | REFERENCE: BibTex, Endnote, RefMan | PDF ![]()

Yogeeswaran, K., Zlotowski, J., Livingstone, M., Bartneck, C., Sumioka, H., & Ishiguro, H. (2016). The Interactive Effects of Robot Anthropomorphism and Robot Ability on Perceived Threat and Support for Robotics Research. Journal On Human Robot Interaction, 5(2), 29-47. doi:10.5898/JHRI.5.2.Yogeeswaran

The Interactive Effects of Robot Anthropomorphism and Robot Ability on Perceived Threat and Support for Robotics Research

University of Canterbury

PO Box 4800, 8410 Christchurch

New Zealand

christoph@bartneck.de

ATR Media Information Science Laboratory

2-2-2 Hikaridai, Seika-cho

Soraku-gun, Kyoto 619-0288, Japan

Abstract - The present research examines how a robot’s physical anthropomorphism interacts with perceived ability of robots to impact the level of realistic and identity threat that people perceive from robots and how it affects their support for robotics research. Experimental data revealed that participants perceived robots to be significantly more threatening to humans after watching a video of an android that could allegedly outperform humans on various physical and mental tasks relative to a humanoid robot that could do the same. However, when participants were not provided with information about a new generation of robots’ ability relative to humans, then no significant differences were found in perceived threat following exposure to either the android or humanoid robots. Similarly, participants also expressed less support for robotics research after seeing an android relative to a humanoid robot outperform humans. However, when provided with no information about robots’ ability relative to humans, then participants showed marginally decreased support for robotics research following exposure to the humanoid relative to the android robot. Taken together, these findings suggest that very humanlike robots can not only be perceived as a realistic threat to human jobs, safety, and resources, but can also be seen as a threat to human identity and uniqueness, especially if such robots also outperform humans. We also demonstrate the potential downside of such robots to the public’s willingness to support and fund robotics research.

Keywords: human-robot interaction, anthropomorphism, ability, threat, uncanny valley

Introduction

For years now, robots have been quite visible in popular culture. This is evident going back decades to classic films, such as Star Wars (1977), Star Trek (1987), Blade Runner (1982), and Terminator (1984), as well as more recent films, including Ex-Machina (2015), Robot & Frank (2012), and Surrogates (2008). However, recent technological advancements have turned what was once an imaginative creation of Hollywood into an impending reality. While robots already play an active role in patient care, factories, and military operations, prominent roboticists (Kiesler & Hinds, 2004) argue that robots will soon become fully integrated into human society, in what is dubbed the ‘robot revolution’ (Ripley, 2014; Stewart, 2011). Robots are expected to serve with an increasing presence in our homes, healthcare, elderly care, rescue operations, and combat. Currently, hundreds of millions of dollars are spent worldwide on robotics research to design these technologies. However, despite scientific advances to create the technology, questions remain about the acceptance of robots by the general public. While some scientists develop technology to make these advancements possible, others try to understand what factors impact how people think, feel, and behave toward such technology. The present research examines the interactive effect of the following two such factors that impact how people feel about robots and robotics research, including: (a) a robot’s physical anthropomorphism, and (b) its ability relative to humans. Using research from the psychology of intergroup relations, we examine how exposure to robots that vary on these dimensions impact people’s perceptions of robots as posing a realistic threat to humans (i.e., a threat to human physical safety, jobs, and resources), presenting an identity-based threat to humans (i.e., a threat to human identity and uniqueness), and how these factors affect their support or opposition to robotics research.

Robot Anthropomorphism

People commonly perceive human shapes in their surroundings such as clouds, rocks, and trees (Aggarwal & McGill, 2007). Anthropomorphism refers to the tendency to attribute human characteristics, behaviors, and feelings to non-human entities (Duffy, 2003). In the context of robotics, researchers have examined the relationship between the degree of physical anthropomorphism of a robot and people’s reactions toward it (Złotowski, Proudfoot, Yogeeswaran, & Bartneck, 2015). The relationship between the human-likeness of a non-human object and affinity toward it was first proposed by Mori (1970) in what is called the uncanny valley. According to this hypothesis, increased human-likeness of a non-human object also increases affinity toward it. However, when the appearance closely resembles a human but is not a perfect copy of it, people's reactions turn strongly negative. When the appearance becomes indistinguishable from a human, the affinity reaches a level close to that of a human. The uncanny valley hypothesis is often used to explain the rejection of humanlike robots and virtual agents. The existence of the uncanny valley continues to be a topic of active discussion (Bartneck, Kanda, Ishiguro, & Hagita, 2007; Kätsyri, Förger, Mäkäräinen, & Takala, 2015; MacDorman & Chattopadhyay, 2016; Rosenthal-von der Pütten & Krämer, 2015; Wang, Lilienfeld, & Rochat, 2015; Złotowski, Proudfoot, & Bartneck, 2013). Several explanations have been proposed as to why people reject highly-humanlike robots. These include neurological explanation (Saygin, Chaminade, Ishiguro, Driver, & Firth, 2012), perception of experience (Gray & Wegner, 2012), empathy (MacDorman, Srinivas, & Patel, 2013), threat avoidance (Mori, 1970), and terror management (MacDorman & Ishiguro, 2006).

How does the physical anthropomorphism of a robot impact people’s attitudes toward it? People tend to build robots with more humanlike features as they facilitate human-robot interaction (Fasola & Mataric, 2012; Feil-Seifer & Mataric, 2011; Guillian et al., 2010; Wade, Parnandi, & Mataric, 2011). Anthropomorphic robots are vandalized less and treated less harshly than machine-like robots (Bartneck, Verbunt, Mubin, & Al Mahmud, 2007). People also view humanlike interfaces more favorably than machine-like interfaces (Gong, 2008), and people's distance between themselves and a robot is influenced by its physical appearance (Kanda, Miyashita, Osada, Haikawa, & Ishiguro, 2005).

Despite numerous benefits of humanlike appearance in robots, there are also cons of building robots that closely resemble humans. People feel more embarrassed by a humanlike robot than a machine-like robot during a medical check-up, which suggests that for such tasks, machine-like appearance would be more suitable (Bartneck, Bleeker, Bun, Fens, & Riet, 2010). Humanlike appearance also leads to expectations that a robot can follow human social norms (Syrdal, Dautenhahn, Walters, & Koay, 2008). However, a robot’s capabilities not meeting these expectations leads to decreased satisfaction from the interaction. People also reported being calmer while being rescued by a robot with machine-like appearance than humanlike appearance (Bethel, Salomon, & Murphy, 2009).

Humanlike robots may, therefore, be preferred over machine-like robots sometimes, while at other times, machine-like robots maybe preferred over humanlike robots. The appearance of a robot needs to be applied in a manner that is appropriate for the given situation (Duffy, 2003). The current study examines whether media exposure to very humanlike robots (androids) vs. less humanlike robots (humanoids) lead people to perceive differential levels of threat to humans depending on a key variable yet to be explored in the literature—the robot’s ability relative to humans’ ability.

Since culture plays an important role in shaping human preferences for robots, we focus the present research on only one cultural context. Specifically, cultural differences seem to be especially prominent in references to highly humanlike robots. For example, Japanese disliked androids more than Americans (Bartneck, 2008) or Australians (Haring, Silvera-Tawil, Matsumoto, Velonaki, & Watanabe, 2014) disliked androids. Similarly, another study showed that Chinese participants have more negative attitudes toward robots in general than did Americans (Wang, Rau, Evers, Robinson, & Hinds, 2010). However, MacDorman, Vasudevan, and Ho (2008) did not find many differences in implicit and explicit attitudes toward robots between American and Japanese participants. To avoid the potential impact of culture on human-robot interaction, the present research involves only participants from the United States.

Robot Ability

Robots are designed with varying levels of ability depending on the roles they are created to fulfill. While some robots can already outperform humans on physical tasks such as lifting, driving, and table tennis, other robots can already outperform humans on mental tasks including mathematics, problem-solving, and chess. How does awareness of a robot’s ability relative to humans’ impact people’s reactions toward it? Does this further depend on the physical anthropomorphism of the robot? Previous research suggests that first and foremost, a robot should be functional and capable of performing its designated task; only after this does a robot’s other characteristics matter (Lohse, 2011). Moreover, matching a robot’s appearance to its behavior has been found to impact human-robot interaction (Goetz, Kiesler, & Powers, 2003). However, there is little empirical work examining people’s reactions toward robots that can outperform humans.

In human robot interaction (HRI), ability is an important aspect in influencing the degree of trust in a robot (Hancock et al., 2011). Because technological limitations have previously led to anthropomorphism outdoing robot performance, there is little evidence delineating what happens when robots are believed to outperform us. Functionality has been evidenced to be more important than appearance in some cases. For example, in the case of a genomics lab robotthat can produce and analyze data more efficiently and to the standards expected of humans, researchers were welcoming of the new robot because it was believed to be a cost effective and inevitable tool of the future (King, Whelan, Jones, & Philip, 2004). This would suggest that outperforming robots would be perceived more positively than those less capable. However, we argue that when people are exposed to a new generation of robots that can not only outperform humans on a variety of physical and mental tasks while also appearing very humanlike, then people will perceive robots to be a greater threat and show stronger opposition to robotics research, because it may subtly activate fears of robots as competition to humans for jobs, resources, safety, and even to human identity and distinctiveness as a species. By contrast, when a new generation of robots are perceived as outperforming humans but appear sufficiently distinct from humans, then they may not be as threatening, because they are seen as distinct from humans and therefore not as competition to human beings.

Intergroup Relations

Research on the psychology of intergroup relations has shown that people distinguish between ingroups (groups with which one identifies, or the ‘us’) and outgroups (groups with which we do not identify, or ‘them’) (Hewstone, Rubin, & Willis, 2002). Such thinking may even apply in the context of HRI where robots may be perceived as a non-living outgroup that is distinguished from the human ingroup. Such thinking is evident in how robots are often depicted in movies, news, and even among prominent scientists warning us about the dangers of artificial intelligence (Lewis, 2015; Waugh, 2015).

Research on intergroup relations has shown that people can perceive distinct sources of threat from an outgroup, including both realistic and symbolic threats. Realistic threat is a form of resource threat that concerns threats to material resources, safety, or physical wellbeing of the ingroup. Much psychological research has demonstrated that realistic threats (threats to resources, jobs, or safety) are an underlying factor of intergroup bias (LeVine & Campbell, 1972; Riek, Mania, & Gaertner, 2006; Stephan, Ybarra, & Bachman, 1999). Therefore, when robots are perceived as threatening human jobs, material resources, or safety, they may be seen as a realistic threat to humans. By contrast, identity threats refer to concerns over the ingroups’ uniqueness, values, and distinctiveness (Riek et al., 2006; Stephan et al., 1999). As people are motivated to see their own group as positively distinct from other outgroups (Tajfel & Turner, 1986), threats to the ingroup’s distinctiveness, uniqueness, or identity have been found to elicit prejudice and discrimination (Jetten, Spears, & Manstead, 1997, 1998; Yogeeswaran, Dasgupta, & Gomez, 2012). When robots are sufficiently humanlike and integrated into society, they may not only be perceived as a realistic threat to human jobs and resources but may also be perceived as threatening human identity by blurring the lines between what is human and what is machine. This has important implications for HRI, because if robots are considered too similar to humans in appearance or abilities, people may feel that human distinctiveness is threatened—this represents a different form of threat that may underlie reactions to robots.

Present Research

As most members of the public have never interacted with a real-life robot, their attitudes and beliefs about robots are largely shaped by media exposure (i.e., news, movies, and documentaries). Therefore, in the present research, we examine how media exposure to an allegedly new generation of robots can subtly shape both perceived realistic and identity threats to humans as well as support for robotics research. To do so, we manipulate the content of video clips so that participants are exposed to either a highly humanlike robot (an android) or a less humanlike robot (a humanoid). Additionally, they are either told that this new robot can outperform humans on various physical and mental tasks, or they are simply provided with no information about its ability relative to humans. We then measure the extent to which robots in general are perceived to be a realistic threat to human jobs, resources, and safety, or an identity threat to human uniqueness and distinctiveness. And finally, we assess people’s support versus opposition toward robotics research.

Pilot Study

Method

Participants. Eighty-five participants based in the United States were recruited from Amazon’s online Mechanical Turk (MTurk) in exchange for $1 USD. This sample included 53 males and 32 females between the ages 18–70 years (M = 36.42; SD = 13.75).

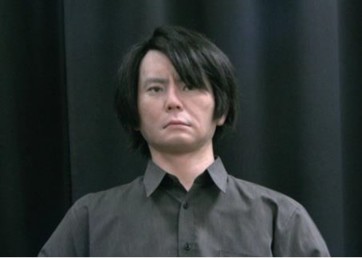

Manipulation. Participants were randomly assigned to watch a one-minute video clip of an interview with either (a) the Geminoid HI-2 robot (see Fig. 1), an android that is more anthropomorphic with hair, skin, and clothing that has been designed to replicate its human engineer; or (b) an identical interview with the NAO robot (see Fig. 2), a humanoid robot that has a human form and some shared characteristics but is easily distinguishable from a human. These robots were chosen as instantiations of humanoid versus android appearance, but do not represent the extreme ends on the human-likeness spectrum. However, according to the uncanny valley hypothesis (Mori, 1970) the choice of a humanoid and android should evoke a stronger effect than if we opted for a machine-like robot. The interviews with the robot were created specifically for the purpose of the study. The robot being interviewed was asked about its capabilities and proceeded to tell viewers of its ability to perform various physical tasks, including weight-lifting and tennis, as well as mental tasks, such as chess and problem- solving.

Figure 1. A screenshot from the video showing the Geminoid HI-2 robot

Figure 2. A screenshot from the video showing the NAO robot Figure 2. A screenshot from the video showing the NAO robot

The Geminoid HI-2 robot used in this study is one of few in the world, while the NAO is more easily available for research purposes; the recording was done to ensure that both robots appeared similar in size in the video (the background was plain color with no other objects visible). The voice recording of what the robot said was identical across both conditions.

Measures. Participants were asked to complete several measures assessing the extent to which they thought the robot in the video was (a) humanlike versus machine-like using a single item, where 1 = ‘very machine-like’ and 7 = ‘very humanlike’; (b) capable of performing physical tasks, including lifting heavy objects and playing sports using 3 items, where 1 = ‘not at all capable’ and 7 = ‘very much capable’ of performing the task (α = .76); and (c) capable of performing mental tasks, such as playing chess and solving puzzles using 2 items, where 1 = ‘not at all capable’ and 7 = ‘very much capable’ of performing the task (α = .86).

Results and Discussion

A one-way ANOVA first revealed that the Geminoid robot (M = 5.10; SD = 1.24) was indeed perceived to be more humanlike than the NAO robot (M = 4.05; SD = 1.49), F(1, 83) = 12.55, p= .001, η2 = .13. However, a one-way ANOVA revealed a non-significant difference between the perceived capability of the Geminoid (M = 5.66; SD = 0.97) and NAO (M = 5.71; SD = 0.97) robots to perform various physical tasks, including lifting heavy objects and playing sports, F(1, 83) < 1, p = .78, η2 = .001. Similarly, a one-way ANOVA revealed a non-significant difference between the perceived capability of the Geminoid (M = 6.04; SD = 1.03) and NAO (M = 5.81; SD = 1.02) robots to perform various mental tasks, such as playing chess and solving puzzles, F(1, 83) = 1.06, p = .31, η2 = .01.

Based on these findings, it appears that the Geminoid robot was indeed perceived to be more humanlike than the NAO robot. However, no significant differences were found in the perceived ability of both the Geminoid and NAO robots to perform various physical and mental tasks.

Main Study

Method

Participants. Three hundred and thirty-one participants were recruited using MTurk in exchange for $2 USD. Of these participants, 20 failed to complete the study or finished in less than 6 minutes, implying that they had not watched the video manipulation (the video itself was over 4 minutes in length, making it impossible to finish the entire study within 6 minutes). This left a final sample of 311 participants (188 females, 123 males). All participants were based in the United States and between the ages of 18–73 years (M = 37.51; SD = 11.65). We intentionally recruited a large sample of about 80 participants per condition, because psychological scientists are increasingly emphasizing the importance of utilizing sufficiently large samples for conducting higher quality research (e.g., Asendorpf et al., 2013; Resnick, 2016).

Manipulations. Four videos were specifically constructed for the purpose of this study to represent the four experimental conditions. These videos were designed to appear like a documentary and lasted approximately four minutes in total length (including the one-minute interview detailed below). Participants watched a series of robots doing a variety of tasks, such as cleaning, driving, and looking after the elderly. The video footage for this segment was taken from real footage of robots available in the public domain. During these visuals, we superimposed a male American voice that documented the recent advances in robotics and the likely future of robotics. Only two aspects of the video were manipulated between-subjects to represent the two independent variables of interest.

To introduce the physical anthropomorphism manipulation, participants either watched (a) a one-minute video clip of an interview with an android robot, Geminoid HI-2 robot (see Fig. 1) or (b) a one-minute video clip of an identical interview with a humanoid robot, NAO (see Fig. 2). Both robots showed similarly subtle head movements in order not to appear as a still image. In order to manipulate the second independent variable of robot ability, the robot being interviewed was asked about its capabilities and proceeded to describe them. The robot (during the interview) and narrator (after the interview) either told viewers that (a) these new robots (such as the one they watched in the interview) could outperform humans on physical tasks, such as weight-lifting and tennis, and on mental tasks, such as chess and problem-solving (i.e., outperforms humans) or (b) they were simply told that the new generation of robots they saw (including the one interviewed) could perform the same tasks (weight-lifting, tennis, chess, and problem-solving), with no information on its ability relative to humans (i.e., no comparative information on ability).

Procedure. Participants were recruited online via MTurk. Participants read an information and consent sheet that outlined the purpose of the study after which they offered consent to participate by checking a required field on the web survey. Participants initially answered demographic questions pertaining to their gender, age, and ethnicity. Once these were completed, participants were randomly assigned to watch one of the four video clips: Geminoid

+ outperforms humans (86 participants); Geminoid + no information on comparative ability (71 participants); NAO + outperforms humans (80 participants); NAO + no comparative information on ability (72 participants). Participants then completed the measures of realistic and identity threat. Finally, participants completed the policy support measure before being probed for suspicion and debriefed. The study was fully reviewed and approved by the Human Ethics Committee at the University of Canterbury.

Materials

Realistic Threat. Participants completed a five-item measure of the extent to which they perceived robots to pose a realistic threat to humans. These items were adapted from previous research using ethnic and national groups (Stephan et al., 1999). Sample items included: “The increased use of robots in our everyday life is causing more job losses for humans,” and “In the long run, robots pose a direct threat to human safety and wellbeing.” These items were measured on a 7-point scale from strongly disagree (1) to strongly agree (7).

Identity Threat. Participants also completed a five-item measure of the extent to which they perceived robots to pose a threat to human identity and distinctiveness. These items were adapted from previous research using ethnic and national groups (Yogeeswaran & Dasgupta, 2014). Sample items included: “Recent advances in robotics technology are challenging the very essence of what it means to be human,” and “Technological advancements in the area of robotics are threatening to human uniqueness.” These items were measured on a 7-point scale from strongly disagree (1) to strongly agree (7).

Support for Robotics Research. Participants read a brief paragraph detailing the creation of the National Robotics Initiative, which was started by the U.S. government through support from the Department of Defense (DoD), National Science Foundation (NSF), National Institutes of Health (NIH), and the National Aeronautics and Space Administration (NASA). After a short description of the initiative, participants were asked to indicate their support for the program and robotics research in general using two items: “How much do you support this initiative?” and “How much do you support the use of tax payer dollars for robotics research?” Participants responded to both items using a 10-point Likert-scale from extremely oppose (1) to extremely favor (10).

Design

The experiment employed a 2 (Physical anthropomorphism: android vs. humanoid appearance) x 2 (Ability: Outperforms humans vs. No information on performance relative to humans) between- subjects design. Participants were randomly assigned to one of these four video conditions. There were three dependent variables in the study, including realistic threat, identity threat, and policy support.

Results and Discussion

Realistic Threat.

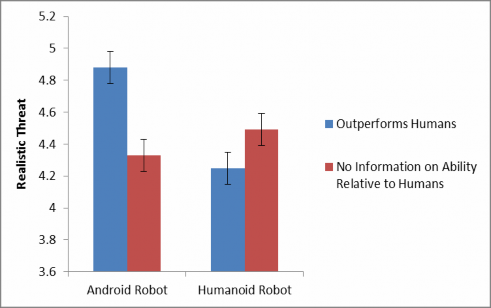

A composite measure of realistic threat was created by averaging all five items on the scale after ensuring that it had strong internal consistency (α = .82). Using this measure, a two-way ANOVA revealed a significant interaction between the physical anthropomorphism of the robot and its ability on perceived realistic threat, F(1, 305) = 6.99, p = .009, η2 = .02 (see Fig. 3). Post-hoc tests using Sidak adjustments for multiple comparisons revealed that participants who saw the android in the video earlier (Geminoid HI-2; M= 4.88; SD = 1.29) perceived robots, in general, to be a greater realistic threat to human jobs, safety, and resources than those who saw the humanoid robot (NAO; M = 4.25; SD = 1.21), but only if they were also told that the new generation of robots could outperform humans on various physical and mental tasks, F(1, 305) = 9.63, p = .002. When participants were not explicitly provided with any information about the this new generation of robots’ ability relative to humans, then no significant differences were found on perceived threat to human jobs, safety, and resources, regardless of whether participants had seen the android (Geminoid HI-2; M = 4.33; SD = 1.43) or humanoid robot (NAO; M = 4.49; SD = 1.32), F < 1, p = .47.

Examining the data another way, post-hoc tests using Sidak adjustments for multiple comparisons revealed that when participants had been exposed to an android robot (Geminoid HI-2) earlier, they perceived robots, in general, to pose significantly greater realistic threats to humans when they were also told that a new generation of robots could outperform humans on several physical and mental tasks (M = 4.88; SD = 1.29) relative to when they were simply told a new generation of robots could perform those very same tasks (M = 4.33; SD = 1.43), F(1, 305) = 6.96, p = .009. By contrast, when participants were exposed to a humanoid robot (NAO), no significant differences were found in perceived realistic threat, regardless of whether participants were told that a new generation of robots could outperform humans (M = 4.25; SD = 1.21) or not (M = 4.49; SD = 1.32), F(1, 305) = 1.23, p = .27.

Figure 3. Threat to human jobs, resources, and safety (realistic threat)

Identity Threat.

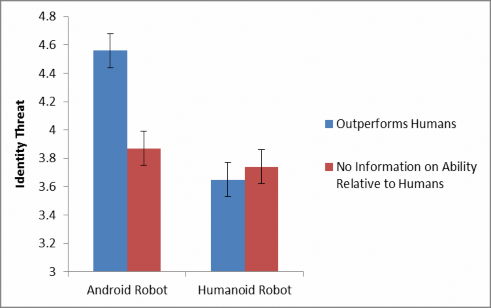

A composite measure of identity threat was created by averaging all five items on the scale after ensuring that it had strong internal consistency ( α = .91). Identity threat and realistic threat were significantly correlated, r(306) = 0.65, p < .01, but conceptually and empirically, there is justification to treat these as separate constructs. A two-way ANOVA revealed a significant interaction of the robot’s physical anthropomorphism and ability on perceived identity threat, F(1, 303) = 4.66, p = .03, η2 = .02 (see Fig. 4). Post-hoc tests using Sidak adjustments for multiple comparisons revealed that participants who saw the android (Geminoid HI-2; M = 4.56, SD = 1.55) perceived robots in general to be a greater threat to human identity and distinctiveness than those who saw the humanoid (NAO; M = 3.65, SD = 1.53), but this effect emerged only when they were also told that robots could outperform humans on various physical and mental tasks, F(1, 303) = 13.49, p < .001. When no information was provided about this new generation of robots’ ability relative to humans, then no significant differences were found in participants’ perceived threat, regardless of whether participants were exposed to the android (Geminoid HI-2; M = 3.87, SD = 1.65) or humanoid robots (NAO; M = 3.74, SD = 1.59), F < 1, p = .63.

Looking at the data another way, post-hoc tests using Sidak adjustments for multiple comparisons revealed that after participants had watched an interview with an android robot (Geminoid HI-2), they perceived robots to be a significantly greater threat to human identity when also told that a new generation of robots could outperform humans (Geminoid HI-2; M = 4.56; SD = 1.55) relative to when no information was provided on its ability relative to humans (M = 3.87; SD = 1.65), F(1, 303) = 7.31, p = .007. However, when participants were exposed to a humanoid robot (NAO), no significant differences were found in their perceived threat to human identity, regardless of whether they were told that a new generation of robots could outperform humans (M = 3.65; SD = 1.53) or not (M = 3.74; SD = 1.59), F < 1, p = .72.

Figure 4. Threat to human uniqueness and distinctiveness (identity threat)

Support for Robotics Research.

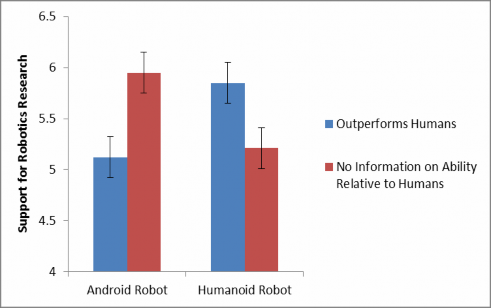

Support for robotics research was calculated by combining the two items described earlier after ensuring they had strong internal consistency ( α = .87). This measure of policy support was significantly correlated with both realistic threat, r(307) = -0.45, p < .01, and identity threat, r(305) = -0.30, p < .01, but the relation appears only weak to moderate in strength. A two-way ANOVA revealed a significant interaction of physical anthropomorphism of the robot and ability on participants’ support for robotics research, F(1,306) = 7.10, p = .008, η2 = .02 (see Fig. 5). Post-hoc tests using Sidak adjustments for multiple comparisons revealed that exposure to the android (Geminoid HI-2; M = 5.12; SD = 2.50) produced decreased support for robotics research than exposure to the humanoid (NAO; M = 5.85; SD = 2.27), but only when participants were also told that these robots could outperform humans on various physical and mental tasks, F(1, 306) = 3.84, p = .05. By contrast, when participants were not provided with any information about robots’ ability relative to humans, then participants showed marginally increased support for robotics research after seeing the android (Geminoid HI-2; M = 5.94; SD = 2.57) than the humanoid robot (NAO; M = 5.21; SD = 2.22), F(1, 306) = 3.30, p = .07.

Exploring the data another way, post-hoc tests using Sidak adjustments for multiple comparisons revealed that when participants were exposed to the android robot (Geminoid HI-2) earlier, being told that a new generation of robots could outperform humans elicited reduced support for robotics research relative to being told that these new robots could simply perform those very same tasks, F(1, 306) = 4.46, p = .04. However, when participants were exposed to a humanoid robot (NAO), being told that a new generation of robots could outperform humans on various physical and mental tasks (M = 5.85; SD = 2.27) did not significantly alter support for robotics research, F(1, 306) = 2.74, p = .10, relative to simply being told that these robots could perform various physical and mental tasks (M = 5.21; SD = 2.22).

Figure 5. Support for robotics research (policy support)

General Discussion

The present research examined the interactive effects of a robot’s physical anthropomorphism and its ability relative to humans on the extent to which people perceive robots in general to be a realistic and identity threat to human beings. We also extend this to examine the impact of these factors on support for robotics research. As most people in the general population have not interacted with a real-life robot and mostly form their opinions and attitudes toward robots based on media exposure, in the present work, we examined how media exposure to robots that vary in their degree of physical anthropomorphism and ability influence the perceived threat of robots and people’s support for robotics research.

To examine these questions, a large sample of American participants were randomly assigned to watch a video depicting either a particularly humanlike android robot (Geminoid HI-2) or a less humanlike humanoid robot (NAO). Participants were then either told that this new generation of robots could perform a range of physical and mental tasks, or specifically, that they could outperform humans on those very same tasks. Data revealed that when participants were told that robots could outperform humans on various physical and mental tasks, then exposure to an android robot elicited greater perceived threat than exposure to a humanoid robot. However, when participants were not provided with any specific information about the robot’s ability relative to humans, then there was no significant difference in perceived threat to either the humanoid or android robots.

While these findings generally fit with previous research suggesting that robot anthropomorphism affects people’s evaluations of robots (Riek, Rabinowitch Chakrabarti, & Robinson, 2009; Rosenthal-von der Pütten & Krammer, 2014; Złotowski et al., 2015), it provides insight into one potential moderator of these effects by demonstrating that exposure to an android robot can backfire when people are simultaneously told that such robots are capable of outperforming humans on physical and mental tasks. By contrast, when a robot is less humanlike (i.e., humanoid in appearance), then its ability relative to humans has little effect on how people perceive it.

Our data also suggests that beyond perceived threat, people show decreased support for funding robotics research when they are exposed to an android that can outperform human relative to a humanoid robot that can outperform humans. However, when participants have no such information about the robot’s ability relative to humans, then they may even show the opposite effect, such that they marginally support robotics research to a greater extent when they are exposed to an android relative to a humanoid robot. The use of such a dependent measure is a new contribution to the literature and offers insight into how the general public may feel about the government’s use of taxpayer dollars for research.

Collectively, these findings offer some insight into when people may be more or less accepting of physically anthropomorphic robots. As detailed before, previous research has shown that the physical anthropomorphism of robots can sometimes lead to positive outcomes, while at other times, it may lead to negative outcomes (Bartneck et al., 2010; Bartneck, Kanda, et al., 2007; Bethel et al., 2009; Fasola & Mataric, 2012; Feil-Seifer & Mataric, 2011; Gong, 2008; Guillian et al., 2010; Kanda et al., 2005; Syrdal et al., 2008; Wade et al., 2011). Our results suggest that very humanlike robots may be perceived to be especially threatening to people when the robot is believed to be capable of outperforming humans, but such anthropomorphism may be less influential when the robot is not believed to be especially capable. Moreover, the present findings suggest that robots may be perceived as threatening to not only human jobs, resources, and safety, but also to human distinctiveness and identity.

Why are very humanlike robots with high capabilities especially threatening to humans? It is possible that it is the result of science fiction movies that often present apocalyptic futures where androids fight against humans (e.g., Terminator, i-Robot, Ex-Machina, and Blade Runner). Androids that can also outperform humans could be difficult to distinguish from human beings and yet out-do humans. In case of a potential conflict, such robots would be especially threatening to human survival. Furthermore, they require humans to redefine what it means to be a human, as neither appearance nor ability could be used to make a distinction in the case of androids outperforming humans. Humanoids that outperform humans, on the other hand, may be perceived as more functional and therefore assumed to be under human control and posing less of a threat.

Contrary to our expectations, performance information on its own did not affect the variables of interest. However, this may be because the present research examined how exposure to media depicting robots with varying levels of ability relative to humans influenced perceived threat of robots and support for robotics research. Such an approach is different from previous work that has mostly examined reactions to a specific robot that one interacts with in real-life, while thepresent work is focused upon how a robot’s physical anthropomorphism interacts with its ability relative to humans to influence people’s reactions toward such technology.

Implications

One implication of the present research is that similar to intergroup relations between human groups, people may be threatened by outgroups even when they are non-living. Perhaps even more telling is that people can perceive robots to be both realistic threats to human safety, jobs, and resources, as well as identity-based threats to human uniqueness and distinctiveness. In the context of the present research, we find that greater similarity to one’s own group can be especially threatening to ingroup distinctiveness similar to how this may emerge with human social groups (see reactive distinctiveness; Spears, Jetten, & Scheepers, 2002). Similarly, the ability to outperform the ingroup can be threatening even when the ingroup is outperformed by a non-living ‘outgroup.’ Research suggests that highly competent groups can pose a realistic threat to the ingroup (Maddux, Galinsky, Cuddy, & Polifroni, 2008). In the present research, we find that strong human-likeness in a robot (greater similarity in appearance to humans) is especially threatening when robots are believed to be simultaneously capable of outperforming humans. It is unclear if these findings would translate to intergroup relations in the context of human social groups given the many differences from human-robot relations, but it raises one avenue for future exploration.

Another interesting avenue for future work would be to examine whether people’s reactions to robots and opposition to robotics research would be even greater if they perceive the future to have greater competition for scarce resources. Previous research in the psychology of intergroup relations has shown that competition for scarce resources fuels intergroup conflict (Sherif, Harvey, White, Wood, & Sherif, 1961). Moreover, recent research suggests that economic threat that produces generalized anxiety about one’s future can produce greater prejudice toward groups that are stereotyped as being highly competent (Butz & Yogeeswaran, 2011). Therefore, making salient the threat of a future with fewer resources or increased job losses may heighten negative reactions toward robots, especially if they are believed to be capable of outperforming humans.

In relation with the uncanny valley hypothesis (Mori, 1970), our results suggest that future research should consider perceived abilities of robots as a factor influencing people’s reactions toward robots. It is possible that identity and realistic threats are responsible for strongly negative feelings experienced by people when they face highly humanlike robots. Humanlike robots can evoke higher expectations regarding their capabilities compared with less humanlike robots, and these expectations can moderate the uncanny valley effect. Therefore, measuring not only objective human-likeness of a robot but also subjective perception of robots’ abilities can provide new insight.

Results of the present research also bring several important contributions for the HRI community and robot design choices. Researchers applying for funding to conduct research on humanlike robots with high capabilities might need to address the potential threat that these robots pose to human identity. Based on recent comments by prominent figures in the news about the dangers of artificial intelligence (AI) and technology getting out of hand (Lewis, 2015; Waugh, 2015), people may be especially concerned about robots that look like us and are also able to outperform us. Perhaps people perceive a less humanlike robot as more of a machine and therefore do not perceive it to be a direct threat to human distinctiveness (provided the robot can simultaneously outperform humans).

Furthermore, in the broader context, androids outperforming humans might be rejected by customers if they are developed without means that can be used to easily distinguish them from humans. Therefore, it is worthwhile investigating whether humanlike robots that outperform humans, but can still be easily distinguished from humans, pose the same threat as robots that are virtually indistinguishable. In the book ‘Do Androids Dream of Electric Sheep?’ a device called Voigt-Kampff was proposed to distinguish replicants from humans based on their physiological reactions in response to emotionally provocative questions. Developing a similar test or machine that is easily available to humans might be required in the future once the technology allows us to create androids physically indistinguishable from humans.

Limitations and Future Work

In the presented experiment, we used only two robots, Geminoid HI-2 and NAO. Future research should use a broader range of robot appearances to examine whether perceived threat and support for robotics research varies as a function of whether the robot is very machine-like as opposed to very humanlike. In the present research, we have only focused on two robots that vary only partially in their degree of humanlike appearance (i.e., the Geminoid is a very humanlike android, while the NAO robot is less humanlike and is a humanoid). However, it would be beneficial to take this further and examine reactions to a non-humanoid robot that is quite machine-like as well. Would a sufficiently machine-like robot that outperforms humans be perceived as completely non- threatening because it looks too much like an appliance (i.e., people may think of it in terms of functionality)? Such a machine-like robot is unlikely to pose any threat to human identity as it would be categorized as simply that—a machine. Future work would benefit from examining the interactive effects of robot appearance and ability with a broader spectrum of physical anthropomorphism. Relatedly, future work would also benefit from investigating whether there are other dimensions that affect the threat posed by robots. As with any comparison involving different types of robots, ensuring that they differ only along one dimension (e.g., human-likeness) is not possible. Therefore, future studies should control for differences on other potential dimensions, such as perceived friendliness or repulsion.

Future research should also investigate whether the presented results are culture-specific or universal. It is possible that humanlike robots that outperform humans could be more accepted in some cultures than others. Past research has shown that culture determines preferences toward androids (Bartneck, 2008; Haring et al., 2014; Lee & Sabanović, 2014), and it is possible that the observed effect in our study would be even stronger with participants from cultures that have unfavorable attitudes toward androids in the first place. Arguably, robots have the potential to revolutionize the way humans go about their daily business, but ultimately, this is only possible through careful consideration of how to implement such a radical change in society. This study revealed that the degree of anthropomorphism of a robot can impact how threatening robots, in general, are perceived to be for humans; however, this effect is moderated by perceived ability of robots relative to humans. Nevertheless, future research is needed to ascertain whether or not a robot’s capabilities in other domains and during live HRI will interact with anthropomorphism and negatively influence people’s evaluations of robots.

Another interesting direction for future research is to investigate how the possibility of interacting with humanlike robots affects people's views of them. As the present research exposed participants to videos of two different robots with varying ability levels relative to humans, it is important to examine whether such effects would emerge following real-life interaction with similar robots possessing varied abilities. From our previous work, we know that people can get used to unfamiliar appearance of androids after a few interactions (Złotowski et al., 2015). Moreover, workers who worked together with a robot that had some human-like features held a more favorable view of it (Sauppé & Mutlu, 2015). Although that robot was distinctly different from a human, it is possible that an android that helped a human would also evoke positive reaction.

Furthermore, this study did not distinguish between mental and physical skills of a robot. Future investigation is needed to explore whether the ability of highly-humanlike robots to outperform humans on mental tasks is more threatening than outperformance on physical tasks (or indeed, it is only the combination that is threatening). This study could assist roboticists in building better robots for integration into society that limit negative backlash from perceived threats to human resources and identity, making the transition of such technology into our everyday lives as smooth as possible.

Acknowledgements

We are very thankful to Dr. Andy Martens who did the voice recordings for the videos used in this study. We are also very grateful to Mitchell Adair for putting together video and audio stimuli in order to create the videos that served as the independent variables in the study. This study would not have been possible without both these important contributions.

References

- Aggarwal, P., & McGill, A. L. (2007). Is that car smiling at me? Schema congruity as a basis for evaluating anthropomorphized products. Journal of Consumer Research, 34(4), 468–479.

- Asendorpf, J. B., Conner, M., De Fruyt, F., De Houwer, J., Denissen, J. A., Fiedler, K., . . . Wicherts, J. M. (2013). Recommendations for increasing replicability in psychology. European Journal of Personality, 27(2), 108–119. doi:10.1002/per.1919

- Bartneck, C. (2008). Who like androids more: Japanese or US Americans? In Proceedings of the 17th IEEE International Symposium on Robot and Human Interactive Communication, RO-MAN (pp. 553–557). Munich, Germany.

- Bartneck, C., Bleeker, T., Bun, J., Fens, P., & Riet, L. (2010). The influence of robot anthropomorphism on the feelings of embarrassment when interacting with robots. Paladyn, 1–7.

- Bartneck, C., Kanda, T., Ishiguro, H., & Hagita, N. (2007). Is the uncanny valley an uncanny cliff? In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication (pp. 368–373). Jeju, Republic of Korea. doi:10.1109/ROMAN.2007.4415111

- Bartneck, C., Verbunt, M., Mubin, O., & Al Mahmud, A. (2007). To kill a mockingbird robot. In Proceedings of the 2007 ACM/IEEE Conference on Human-Robot Interaction (HRI 2007): Robot as Team Member (pp. 81–87). Arlington, VA.

- Bethel, C. L., Salomon, K., & Murphy, R. R. (2009). Preliminary results: Humans find emotive non-anthropomorphic robots more calming. In Proceedings of the 4th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2009) (pp. 291–292). San Diego, CA.

- Butz, D. A., & Yogeeswaran, K. (2011). A new threat in the air: Macroeconomic threat increases prejudice against Asian Americans. Journal of Experimental Social Psychology, 47(1), 22–27.

- Duffy, B. R. (2003). Anthropomorphism and the social robot. Robotics and Autonomous Systems, 42(3-4), 177–190.

- Fasola, J., & Matarić, M. J. (2012). Using socially assistive human-robot interaction to motivate physical exercise for older adults. In Proceedings of the IEEE—Special Issue on Quality of Life Technology, T. Kanade, ed. Vol. 100(August), pp. 2512–2526. Piscataway, NJ.

- Feil-Seifer, D., & Matarić, M. J. (2011). Automated detection and classification of positive vs. negative robot interactions with children with autism using distance-based features. In Proceedings of the 6th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2011), pp. 323–330. Lausanne, Switzerland.

- Giullian, N., Ricks, D., Atherton, A., Colton, M., Goodrich, M., & Brinton, B. (2010). Detailed requirements for robots in autism therapy. In Proceedings of the IEEE International Conference on Systems, Man and Cybernetics. (pp. 2595–2602). Istanbul, Turkey.

- Goetz, J., Kiesler, S., & Powers, A. (2003). Matching robot appearance and behavior to tasks to improve human-robot cooperation. In Proceedings of the 12th IEEE International Workshop on Robot and Human Interactive Communication (ROMAN 2003) (pp. 55– 60). doi:10.1109/ROMAN.2003.1251796

- Gray, K., & Wegner, D. M. (2012). Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition, 125(1), 125–130.

- Haring, K. S., Silvera-Tawil, D., Matsumoto, Y., Velonaki, M., & Watanabe, K. (2014). Perception of an android robot in Japan and Australia: A cross-cultural comparison. In

- M. Beetz, B. Johnston, & M.-A. Williams (Eds.), Social Robotics (pp. 166–175). Springer International Publishing: Sydney, Australia.

- Hancock, P.A., Billings, D.R., Schaefer, K. E., Chen, J.Y.C., de Visser, E.J., & Parasuraman, R. (2011). A meta-analysis of factors affecting trust in human-robot interaction. Human Factors, 53(5), 517–527.

- Hewstone, M. H., Rubin, M., Willis, H. (2002). Intergroup bias. Annual Review of Psychology, 53, 575–604.

- Jetten, J., Spears, R., & Manstead, A. R. (1998). Defining dimensions of distinctiveness: Group variability makes a difference to differentiation. Journal of Personality and Social Psychology, 74(6), 1481–1492. doi:10.1037/0022-3514.74.6.1481

- Jetten, J., Spears, R., & Manstead, A. R. (1997). Distinctiveness threat and prototypicality: Combined effects on intergroup discrimination and collective self-esteem. European Journal of Social Psychology, 27(6), 635–657.

- Kanda, T., Miyashita, T., Osada, T., Haikawa, Y., & Ishiguro, H. (2005). Analysis of humanoid appearances in human-robot interaction. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2005), (pp.62–69). Edmonton, AB, Canada.

- Kätsyri, J., Förger, K., Mäkäräinen, M., & Takala, T. (2015). A review of empirical evidence on different uncanny valley hypotheses: Support for perceptual mismatch as one road to the valley of eeriness. Frontiers in Psychology, 6, 390. doi:10.3389/fpsyg.2015.00390

- Kiesler, S., & Hinds, P. (2004). Introduction to this special issue on human-robot interaction. Human-Computer Interaction, 19(1), 1–8.

- King, R. D., Whelan, K. E., Jones, F. M., & Philip, G. K. (2004). Functional genomic hypothesis generation and experimentation by a robot scientist. Nature, 427, 247–252.

- Lee, H. R., & Sabanović, S. (2014). Culturally variable preferences for robot design and use in South Korea, Turkey, and the United States. In Proceedings of the 2014 ACM/IEEE International Conference on Human-robot Interaction (pp. 17–24). New York, NY: ACM. doi:10.1145/2559636.2559676

- LeVine, R. A., & Campbell, D. T. (1972). Ethnocentrism: Theories of conflict, ethnic attitudes, and group behavior. Oxford, England: John Wiley & Sons.

- Lewis, T. (2015). Don’t let artificial intelligence take over, top scientists warn. Live Science. Retrieved from: http://www.livescience.com/49419-artificial-intelligence-dangers- letter.html

- Lohse, M. (2011). Bridging the gap between users’ expectations and system evaluations. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication (pp. 485–490). Atlanta, GA.

- MacDorman, K. F., & Chattopadhyay, D. (2016). Reducing consistency in human realism increases the uncanny valley effect; increasing category uncertainty does not. Cognition, 146, 190–205. doi:10.1016/j.cognition.2015.09.019

- MacDorman, K. F., & Ishiguro, H. (2006). The uncanny advantage of using androids in cognitive and social science research. Interaction Studies, 7(3), 297–337.

- MacDorman, K. F., Srinivas, P., & Patel, H. (2013). The uncanny valley does not interfere with level 1 visual perspective taking. Computers in Human Behavior, 29(4), 1671–1685.

- MacDorman, K. F., Vasudevan, S. K., & Ho, C.-C. (2008). Does Japan really have robot mania? Comparing attitudes by implicit and explicit measures. AI & SOCIETY, 23(4), 485– 510. doi:10.1007/s00146-008-0181-2

- Maddux W. W., Galinsky A. D., Cuddy A. J., Polifroni, M. (2008). When being a model minority is good…and bad: Realistic threat explains negativity toward Asian Americans. Personality and Social Psychology Bulletin. 34(1), 74–89.

- Mori, M. (1970). The uncanny valley. Energy, 7(4), 33–35.

- Resnick, B. (2016). What psychology’s crisis means for the future of science. Vox. Retrieved from: http://www.vox.com/2016/3/14/11219446/psychology-replication-crisis

- Riek, L. D., Rabinowitch, T. C., Chakrabarti, B., & Robinson, P. (2009). Empathizing with robots: Fellow feeling along the anthropomorphic spectrum. In Proceedings of the 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, (ACII 2009) (pp. 1–6). doi:10.1109/ACII.2009.5349423

- Riek, B. M., Mania, E. W., & Gaertner, S. L. (2006). Intergroup threat and outgroup attitudes: A meta-analytic review. Personality and Social Psychology Review, 10(4), 336-353. doi:10.1207/s15327957pspr1004_4

- Ripley, W. (2014). Domo arigato, Mr Roberto: Japan’s robot revolution. CNN. Retrieved from: http://edition.cnn.com/2014/07/15/world/asia/japans-robot-revolution

- Rosenthal-von der Pütten, A. M., & Krämer, N. C. (2014). How design characteristics of robots determine evaluation and uncanny valley related responses. Computers in Human Behavior, 36, 422–439. doi:10.1016/j.chb.2014.03.066

- Rosenthal-von der Pütten, A. M., & Krämer, N. C. (2015). Individuals’ evaluations of and attitudes towards potentially uncanny robots. International Journal of Social Robotics, 7(5), 799–824. doi:10.1007/s12369-015-0321-z

- Sauppé, A., & Mutlu, B. (2015). The social impact of a robot co-worker in industrial settings. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (pp. 3613–3622). New York, NY: ACM. doi:10.1145/2702123.2702181

- Saygin, A. P., Chaminade, T., Ishiguro, H., Driver, J., & Frith, C. (2012). The thing that should not be: Predictive coding and the uncanny valley in perceiving human and humanoid robot actions. Social Cognitive and Affective Neuroscience, 7(4), 413–422. doi:10.1093/scan/nsr025

- Sherif, M., Harvey, O. J., White J., Hood, W. R., Sherif, C. W. (1961). Intergroup conflict and cooperation: the robbers cave experiment. Norman, OK: University of Oklahoma Book Exchange.

- Spears R, Jetten J, & Scheepers D. (2002). Distinctiveness and the definition of collective self: A tripartite model. In A. Tesser, D. A. Stapel, & J. V. Wood (Eds.), Self and motivation: Emerging psychological perspective (pp. 141–171). Lexington, KY: American Psychological Association.

- Stephan, W. G., Ybarra, O., & Bachman, G. (1999). Prejudice toward immigrants. Journal of Applied Social Psychology, 29(11), 2221-2237. doi:10.1111/j.1559-1816.1999.tb00107.x

- Stewart, J. (2011). Ready for the robot revolution? BBC News. Retrieved from: http://www.bbc.com/news/technology-15146053

- Syrdal, D. S., Dautenhahn, K., Walters, M. L., & Koay, K. L. (2008). Sharing spaces with robots in a home scenario: Anthropomorphic attributions and their effect on proxemic expectations and evaluations in a live HRI trial. In Proceedings of the AAAI Fall Symposium – Technical Report (Vol. FS-08–02, pp. 116–123). Arlington, VA.

- Tajfel, H., & Turner, J. (1986). The social identity theory of intergroup behavior. In S. Worchel & W. G. Austin (Eds.), The Psychology of Intergroup Relations (pp. 7–25). Chicago, IL: Nelson-Hall.

- Wade, E., Parnandi, A. R., & Matarić, M. J. (2011). Using socially assistive robotics to augment motor task performance in individuals post-stroke. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems (pp. 2403–2408). San Francisco, CA.

- Wang, S., Lilienfeld, S. O., & Rochat, P. (2015). The uncanny valley: Existence and explanations. Review of General Psychology, 19(4), 393–407. doi:10.1037/gpr0000056

- Wang, L., Rau, P.-L. P., Evers, V., Robinson, B. K., & Hinds, P. (2010). When in Rome: The role of culture and context in adherence to robot recommendations. In Proceedings of the 5th ACM/IEEE International Conference on Human-robot Interaction (pp. 359–366). Piscataway, NJ: IEEE Press. Retrieved from http://dl.acm.org/citation.cfm?id=1734454.1734578

- Waugh, R. (2015). Stephen Hawking warns of the danger of ‘intelligent’ robots. Metro, UK. Retrieved from: http://metro.co.uk/2015/01/13/stephen-hawking-warns-of-the-dangers- of-intelligent-robots-5020270

- Yogeeswaran, K., & Dasgupta, N. (2014). The devil is in the details: Abstract versus concrete construals of multiculturalism differentially impact intergroup relations. Journal of Personality and Social Psychology, 106(5), 772–789. doi:10.1037/a0035830

- Yogeeswaran, K., Dasgupta, N., & Gomez, C. (2012). A new American dilemma? The effect of ethnic identification and public service on the national inclusion of ethnic minorities. European Journal of Social Psychology, 42(6), 691–705. doi:10.1002/ejsp.1894

- Zlotowski, J., Sumioka, H., Nishio, S., Glas, D., Bartneck, C., & Ishiguro, H. (2015). Persistence of the uncanny valley: Influence of repeated interactions and a robot’s attitude on its perception. Frontiers in Cognitive Science, 6(883), 1–13. doi:10.3389/fpsyg.2015.00883

- Zlotowski, J., Proudfoot, D., & Bartneck, C. (2013). More human than human: Does the uncanny curve really matter? In Proceedings of the 8th ACM/IEEE International Conference on Human-Robot Interaction 2013 (HRI 2013): Workshop on Design of Human Likeness in HRI From Uncanny Valley to Minimal Design (pp. 7–13). Tokyo, Japan.

- Zlotowski, J., Proudfoot, D., Yogeeswaran, K., & Bartneck, C. (2015). Anthropomorphism: Opportunities and challenges in human-robot interaction. International Journal of Social Robotics, 7, 347–360. doi:10.1007/s12369-014-0267-6

This is a pre-print version | last updated January 10, 2017 | All Publications