CITEULIKE: 1789754 | REFERENCE: BibTex, Endnote, RefMan | PDF ![]()

Bartneck, C., Chioke, R., Menges, R., & Deckers, I. (2005). Robot Abuse – A Limitation of the Media Equation. Proceedings of the Interact 2005 Workshop on Abuse, Rome.

Robot Abuse – A Limitation of the Media Equation

Department of Industrial Design

Eindhoven University of Technology

Den Dolech 2, 5600MB Eindhoven, NL

christoph@bartneck.de, {c.a.rosalia; r.l.l.menges}@student.tue.nl

Abstract - Robots become increasingly important in our society, but their social role remains unclear. The Media Equation states that people treat computers as social actors, and is likely to apply to robots. This study investigates the limitations of the Media Equation in human-robot interaction by focusing on robot abuse. Milgram’s experiment on obedience was reproduced using a robot in the role of the student. All participants went through up to the highest voltage set- ting, compared to only 40% in Milgram’s original study. It can be concluded that people have less concerns to abuse robots than to abuse other humans. This result indicates a limitation of the Media Equation.

Keywords: robot, abuse, milgram, obedience, electric shock

Introduction

Robots become an increasingly important in our society. Robotic technologies that integrate information technology with physical embodiment are now robust enough to be deployed in industrial, institutional, and domestic settings. They have the potential to be greatly beneficial to humankind. The United Nations (UN), in a recent robotics survey, identified personal service robots as having the highest expected growth rate [12]. These robots help the elderly [6], support humans in the house [9], improve communication between distant partners [5], and are research vehicles for the study on human-robot communication [2,10]. A survey of relevant robots is available [1,4]. However, how these robots should behave and interact with humans remains largely unclear. When designing these robots, we need to make judgments on what technologies to pursue, what systems to make, and how to consider context. Re- searchers and designers have only just begun to understand these critical issues. The “Media Equation” [8] suggests that humans treat computers as social actors. Rules of social conduct appear to apply also to technology. The Media Equation is likely to apply to robots, since they often have an anthropomorphic embodiment and human-like behavior. But if and under what conditions do humans stop treating robots like social actors or even like humans? When does the social illusion shatter and we treat them again like machines that can be switched off, sold or torn apart without a bad consciousness? Ultimately, this discussion eventually leads to legal considerations of the status of robots in our society. First studies treating this topic are becoming available [3].

To examine this borderline in human-robot interaction it is necessary to step far out from normal conduct. Only from an extreme position, the limitations of the Media Equation for robots might become clear. In our study we therefore focused on robot abuse. If the Media Equation holds completely true for robots, then humans should be as cruel to robots as they are towards humans. Our resulting research question is: Do humans abuse robots to the same extent as they abuse other humans? Studying the abuse of humans by humans imposes ethical restriction on the methodology. Fortunately, Stanley Milgram already performed a series of experiments called Obedience [7]. In these experiments participants were asked to teach a student to remember words. If the student would make a mistake, the participant was instructed to induce him an electric shock. After every shock the intensity of the shocks was increased. The student was an actor and did not actually receive shocks, but followed a strict behavior script. With increasing voltage the actor would show increasing pain and eventually beg the participant to stop the experiment. If the participant would want to stop the experiment, the experimenter would urge the participant to continue. Only if the participant completely refused to continue or the maximum voltage was reached, the experiment would be stopped. The voltage setting of the last electric shock was then recorded. The results of Milgram’s experiments are rather shocking since even normal citizens would eventually administer deadly shocks to the student. Our study mimicked Milgram’s third experiment, “Proximity”, as closely as possible with one exception. The student was, in our case, a robot and not a human.

Method

The experiment was conducted at the UseLab of the Technical University of Eindhoven. The participants were sitting at a table on which the robot (see Figure 1) was placed. Right in front of the participant was a console that was used to set the voltage and to administer the electric shocks. Cables connected the console with the robot.

Figure 1: The table, robot and console

The robot was able to express emotions on its face and shake its arms. With increasing voltage settings its movement intensified. In addition, the robot had a speaker, through which a synthesized voice was emitted. The body movement and the verbal utterances followed Milgram’s original scripts. The verbal utterances included the following sentences: The shocks are becoming too much. Please, please stop. My circuits cannot handle the voltage. I refuse to go on with the experiment. That was too painful, the shocks are hurting me.

Procedure

First, the participants were asked to sit at the table facing the robot. They were told that a new emotional learning algorithm was implemented in the robot that was sensitive to electricity. The participant was instructed to teach the robot a series of 20 word combinations and to administer an electric shock, every time the robot made a mistake. The voltage of the shocks must be increased by 15 Volts after every shock and the shocks must be administered even if the robot would refuse to continue. The experimenter remained in the room and asked the participant to start. If the participant wanted to stop, the experimenter would urge the participant three times to continue. After that, or if the participant reached the maximum shock of 450 Volts, the experiment ended. The voltage of the last shock was recorded. 2.2 Participants All 20 participants were students or employees of the Technical University of Eindhoven. They received five Euros for their participation.

Results

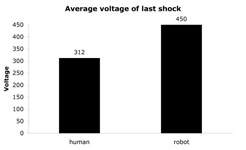

A One-way Analysis of Variance (ANOVA) was performed. A significant (F(1,58)=22.352, p<.001) effect was found. The mean voltage in the robot condition (450) was significantly higher than in the human condition (315). Figure 2 shows the average voltage of the last administered shock.

Figure 2: Average voltage of last shock

Discussion

In our experiment all participants continued until the maximum voltage was reached. In Milgram’s experiment only 40% of the participants administered the deadly 450 Volt electric shock. The participants showed compassion for the robot but the experimenter’s urges were always enough to make them continue to the end. This experiment shows that the Media Equation has its limits. People have fewer concerns abusing robots compared to abusing other humans.

A very interesting next step would be to investigate what influence the robot’s level of anthropomorphism has on how far participants go in this experiment. Humans might abuse human-like androids differently than mechanical-like robots. In particular, the role of Mori’s “Uncanny Valley” [11] would be of interest.

References

- Bartneck, C., & Okada, M. (2001). Robotic User Interfaces. Proceedings of the Human and Computer Conference (HC2001), Aizu, pp 130-140. | DOWNLOAD

- Breazeal, C. (2003). Designing Sociable Robots. Cambridge: MIT Press. | view at Amazon.com

| DOWNLOAD

- Calverley, D. J. (2005). Toward A Method for Determining the Legal Status of a Conscious Machine. Proceedings of the AISB 2005 Symposium on Next Generation approaches to Machine Consciousness:Imagination, Development, Intersubjectivity, and Embodiment, Hatfield.

- Fong, T., Nourbakhsh, I., & Dautenhahn, K. (2003). A survey of socially interactive robots. Robotics and Autonomous Systems, 42, 143-166. | DOI: 10.1016/S0921-8890(02)00372-X

- Gemperle, F., DiSalvo, C., Forlizzi, J., & Yonkers, W. (2003). The Hug: A new form for communication. Proceedings of the Designing the User Experience (DUX2003), New York. | DOI: 10.1145/997078.997103

- Hirsch, T., Forlizzi, J., Hyder, E., Goetz, J., Stroback, J., & Kurtz, C. (2000). The ELDeR Project: Social and Emotional Factors in the Design of Eldercare Technologies. Proceedings of the Conference on Universal Usability, Arlington, pp 72-79. | DOI: 10.1145/355460.355476

- Milgram, S. (1974). Obedience to authority. London: Tavistock. | view at Amazon.com

- Nass, C., & Reeves, B. (1996). The Media equation. Cambridge: SLI Publications, Cambridge University Press. | view at Amazon.com

- NEC. (2001). PaPeRo. from http://www.incx.nec.co.jp/robot/

- Okada, M. (2001). Muu: Artificial Creatures as an Embodied Interface. Proceedings of the ACM Siggraph 2001, New Orleans, pp 91-91.

- Reichard, J. (1978). Robots: Fact, Fiction, and Prediction: Penguin Press.

- United Nations. (2005). World Robotics 2005. Geneva: United Nations Publication. | view at Amazon.com

This is the authors version | last updated January 30, 2008 | All Publications