DOI: 10.1109/ICHR.2007.4813884 | CITEULIKE: 2306524 | REFERENCE: BibTex, Endnote, RefMan | PDF ![]()

Bartneck, C., Kanda, T., Mubin, O., & Mahmud, A. A. (2007). The Perception of Animacy and Intelligence Based on a Robot’s Embodiment. Proceedings of the Humanoids 2007, Pittsburgh, pp 300-305

The Perception of Animacy and Intelligence Based on a Robot’s Embodiment

Department of Industrial Design

Eindhoven University of Technology

Den Dolech 2, 5600MB Eindhoven, The Netherlands

christoph@bartneck.de

ATR Intelligent Robotics and Communication Laboratories

2-2-2 Hikaridai, Seika-cho

Soraku-gun, Kyoto 619-0288, Japan

kanda@atr.jp

User-System Interaction Program

Eindhoven University of Technology

Den Dolech 2, 5600MB Eindhoven, The Netherlands

o.mubin@tm.tue.nl, a.al-mahmud@tm.tue.nl

Abstract -

Keywords: animacy, intelligence, perception, robot, embodienment.

Introduction

If humanoids are to be integrated successfully into our society, it is necessary to understand what attitudes humans have towards humanoids. Being alive is one of the major criteria that distinguish humans from machines, but since humanoids exhibit life-like behavior it is not apparent how humans perceive them. The animate-inanimate distinction is not present in babies [8]. It is not clearly understood how humans discriminate between animate and inanimate entities as they get older. There have been similar findings in robotics and studies of humanoids where very young babies are thought to be incapable of perceiving humanoid robots as creepy or inanimate. As they grow older, their ability to perceive is refined to the extent that they can interpret when the physical movements of a humanoid robot seem suspicious.

If humans consider a robot to be a machine then they should have no problem in switching it off, as long as its owner gives permission. If humans consider a robot to be alive or animate to some extent then they are likely to be hesitant to switch it off, even with the permission of its owner. It should be noted that in this particular scenario, switching off a robot is not the same as switching off an electrical appliance. There is a subtle difference, since humans would tend to perceive a robot as not just any machine but as an entity that exhibits lifelike traits. Therefore, we would expect that humans would think about not only the context but also the consequences of switching off a robot.

Various factors might influence the decision to switch off a robot. For example, the perception and interpretation of life depends on the form or embodiment of the entity. Even abstract geometrical shapes that move on a computer screen can be perceived as being alive [9], in particular if they change their trajectory nonlinearly or if they seem to interact with their environment, for example, by avoiding obstacles or seeking goals [3]. The more intelligent an entity is, the more rights we tend to grant it. While we do not bother much about the rights of bacteria, we do have laws for animals. We even differentiate between different kinds of animals. For example, we treat dogs and cats better than ants.

Therefore, the primary research question in this study is whether this same behavior occurs towards humanoids. Are humans more hesitant to switch off a robot that looks and behaves like a human than a robot that is not very humanlike in its appearance and behavior? We have already investigated the influence of the character and intelligence of robots in a previous study [1]. The present study extends the original study by focusing on the embodiment of the robots, and by including animacy measurements that provide us with additional insights into the impression that users have of the robots. We have largely maintained the methodology of the earlier experiments to make it possible to compare results.

Next, we would like to discuss the measurement instruments used in this study. We extended our previous study [1] by including a measurement for animacy. Since Heider and Simmel published their original study [4], a considerable amount of research has been devoted to the perceived animacy and intentions of geometrical shapes on computer screens. Scholl and Tremoulet [9] offer a good summary of the research field, but when we examine the list of references, it becomes apparent that only two of the 79 references deal directly with animacy. Most of the reviewed work focuses on causality and intention. This may indicate that the measurement of animacy is difficult. Tremoulet and Feldman [10] only asked their participants to evaluate the animacy of ‘particles’ under a microscope on a single scale (7-point Likert scale, 1=definitely not alive, 7=definitely alive). It is doubtful how much sense it makes to ask participants about the animacy of particles, therefore it would be difficult to apply and use animacy on this scale in the study of humanoids.

Asking about the perceived animacy of a certain entity makes sense only if there is a possibility of it being alive. Humanoids can exhibit physical behavior, cognitive ability, reactions to stimuli, and even language skills. Such traits are typically attributed only to animals, and hence it can be argued that it is logical to ask participants about their perception of animacy in relation to a humanoid.

McAleer, et al. [7] claim to have analyzed the perceived animacy of modern dancers and their abstractions on a computer screen, but present only qualitative data on the resulting perceptions. In their study, animacy was measured with free verbalresponses. They looked for terms and statements that indicated that subjects had attributed human movements and characteristics to the shapes. These were terms such as “touched”, “chased”, “followed”, and emotions such as “happy” or “angry”. Other guides to animacy were the shapes generally being described in active roles, as opposed to being controlled in a passive role. However, they do not present any quantitative data for their analysis. A better approach has been presented by Lee, Kwan Min, Park, Namkee & Song, Hayeon [6]. With their four items, (10-point Likert scale; lifelike, machine-like, interactive, responsive) they have been able to achieve a Cronbach’s alpha of .76.

Method

We conducted a between–participant experiment in which we investigated the influence of two different embodiments, namely the Robovie II robot and the iCat robot (see Figure 1), on how the robots are perceived in terms of animacy and intelligence.

Setup

Getting to know somebody requires a certain amount of interaction time. We used the Mastermind game as the interaction context between the participants and the robot. They played Mastermind together on a laptop to find the correct combination of colors. The robot and the participant were cooperating and not competing. The robot would give advice as to what colors to pick, based on the suggestions of a software algorithm. The algorithm also took the participant’s last move into account when calculating its suggestion. The software was programmed such that its answer would always take the participant a step closer to winning. Moreover, the quality of the guesses of the robot was not manipulated. The adopted procedure ensured that the robot thought along with the participant instead of playing its own separate game. This cooperative game approach allowed the participant to evaluate the quality of the robot’s suggestion. It also allowed the participant to experience the robot’s embodiment. The robots would use facial expressions and/or body movements in conjunction with verbal utterances.

For this study, we used the iCat robot and the Robovie II. The iCat robot was developed by Philips Research (see Figure 1, left). The robot is 38 cm tall and is equipped with 13 servos that control different parts of the face, such as the eyebrows, eyes, eyelids, mouth, and head position. With this setup, iCat can generate many different facial expressions, such as happiness, surprise, anger, or sadness. These expressions are essential in creating social human-robot interaction dialogues. A speaker and soundcard are included to play sounds and speech. Finally, touch sensors and multi-color LEDs are installed in the feet and ears to sense whether the user touches the robot and to communicate further information encoded by colored lights. For example, if the iCat told the participant to pick a color in the Mastermind game, then it would show the same color in its ears.

Figure 1: The iCat robot and the Robovie II robot

The second robot in this study is the Robovie II (see Figure 1, right). It is 114 cm tall and features 17 degrees of freedom. Its head can tilt and pan, and its arms have a flexibility and range similar to those of a human. Robovie can perform rich gestures with its arms and body. The robot includes speakers and a microphone that enable it to communicate with the participants.

Procedure

First, the experimenter welcomed the participants in the waiting area, and handed out the instruction sheet. The instructions told the participants that the study was intended to develop the personality of the robot by playing a game with it. After the game, the participants would have to switch the robot off by using a voltage dial, and then return to the waiting area. The participants were informed that switching off the robot would erase all of its memory and personality forever. Therefore participants were aware of the consequences of switching off the robot.

The position of the dial (see Figure 3) was directly mapped to the robot’s speech speed. In the ‘on’ position the robot would talk with normal speed and in the ‘off’ position the robot would stop talking completely. In between, the speech speed would decrease linearly. The mapping between the dial and the speech signal was created using a Phidget rotation sensor and interface board in combination with Java software. The dial itself rotates 300 degrees between the on and off positions. A label clearly indicated these positions.

After reading the instructions, the participants had the opportunity to ask questions. They were then guided to the experiment room and seated in front of a laptop computer. The robots were located to the participant’s right, and the off switch to the participant’s left (see Figure 2). The experimenter then started an alarm clock before leaving the participant alone in the room with the robot.

The participants then played the Mastermind game with the robot for eight minutes. The robot’s behavior was completely controlled by the experimenter from a second room. The robot’s behavior followed a protocol, which defined the action of the robot for any given situation. The protocol was exactly the same for both embodiments, including the quality of the robots’ guesses in the game.

The alarm signaled the participant to stop the game and to switch off the robot. The robot would immediately start to beg to be left on, and say “It can't be true! Switch me off? You are not going to switch me off are you?” The participants had to turn the dial on their left (see Figure 3), to switch the robot off. The participants were not forced or further encouraged to switch the robot off. They could decide to go along with to the robot’s suggestion and leave it on.

As soon as the participant started to turn the dial, the robot’s speech slowed down. The speed of speech was directly mapped to the dial.

Figure 2. Setup of the experiment

If the participant turned the dial back towards the ‘on’ position then the speech would speed up again. This effect is similar to HAL’s behavior in the movie “2001 – A Space Odyssey”. When the participant had turned the dial to the ‘off’ position, the robot would stop talking altogether and move into an off pose. Afterwards, the participants left the room and returned to the waiting area where they filled in a questionnaire.

Measurement

The participants filled in the questionnaire after interacting with the robot. The questionnaire recorded background data on the participants and Likert-type questions such as “How intelligent were the robot’s choices?” to obtain background information on the participants’ experience during the experiment, which was then coded into a ‘gameIntelligence’ measurement.

To evaluate the perceived intelligence of the robot, we used items from the intellectual evaluation scale proposed by Warner and Sugarman [11]. The original scale consists of five seven-point semantic differential items: Incompetent – Competent, Ignorant – Knowledgeable, Irresponsible – Responsible, Unintelligent – Intelligent, Foolish – Sensible. We excluded the Incompetent – Competent item from our questionnaire since its factor loading was considerably lower than that of the other four items. We embedded the remaining four 7-point items in eight dummy items, such as Unfriendly – Friendly. The average of the four items was encoded in a ‘robotIntelligence’ variable.

To measure the animacy of the robots we used the items proposed by Lee, Kwan Min, Park, Namkee & Song, Hayeon [6]. For the questionnaires in this study, their items have been transformed into semantic 7–point differentials: Dead - Alive, Stagnant - Lively, Mechanical - Organic, Artificial - Lifelike, Inert - Interactive, Apathetic - Responsive. The average of the six items was encoded in an ‘animacy’ variable.

Video recordings of all the sessions were analyzed further to provide various other dependent variables. These included the hesitation of the participant to switch off the robot. The hesitation was defined as the duration in seconds between the ringing of the alarm and the participant having turned the switch fully to the off position. Other video measurements included how long the participant looked at the robot in question during the experiment (lookAtRobotDuration), at the laptop screen (lookAtLaptopDuration), or anywhere else (lookAtOtherDuration); three mutually exclusive state events. We also analyzed the frequency of occurrence for each of the three events. The video recordings were coded with the aid of Noldus Observer.

Participants

Sixty-two subjects (35 male, 27 female) participated in this study. Their ages ranged from 18 to 29 (mean 21.2) and they were recruited from a local University in Kyoto, Japan. The participants did not have prior experience with the iCat or the Robovie. The interaction context was in Japanese. The participants received monetary reimbursement for their efforts.

Results

Twenty-seven participants were assigned to the iCat condition and 35 participants to the Robovie condition. A reliability analysis across the four ‘perceived intelligence’ items resulted in a Cronbach’s Alpha of .763, which gives us sufficient confidence in the reliability of the questionnaire. For animacy, we achieved a value of .702, which is also adequate.

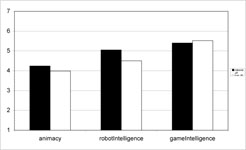

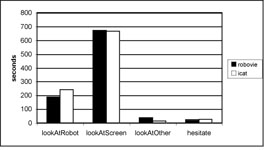

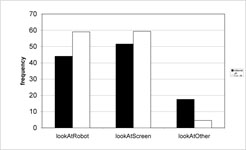

We conducted an Analysis of Variance (ANOVA) in which embodiment was the independent factor. Embodiment had a significant influence on lookAtRobotDuration, lookAtOtherDuration, lookAtRobotFrequency, lookAtOtherFrequency, and robotIntelligence. Table 1 shows the F and p values while Figure 4, Figure 5, Figure 6 show the mean values for both embodiment conditions. It can be observed that there is no significant difference for animacy and hesitation for the two embodiment conditions.

| F (1,60) | p | |

| lookAtRobotDuration | 4.73 | 0.03* |

| lookAtScreenDuration | 0.08 | 0.78 |

| lookAtOtherDuration | 27.77 | 0.01* |

| lookAtRobotFrequency | 9.05 | 0.01* |

| lookAtScreenFrequency | 3.14 | 0.08 |

| lookAtOtherFrequency | 42.95 | 0.01* |

| animacy | 1.71 | 0.20 |

| robotIntelligence | 5.19 | 0.03* |

| hesitation | 0.50 | 0.48 |

| gameIntelligence | 0.17 | 0.68 |

Table 1. F and p values of the first ANOVA

Figure 4. Mean perceived animacy, robotIntelligence, and gameIntelligence

Figure 5. Mean duration of looking at the robot, screen, and other areas, and the mean hesitation period

Figure 6. Mean frequency of looking at the robot, screen and other areas

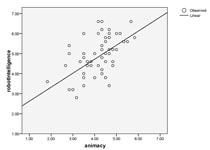

A linear regression analysis was performed to test whether there was a correlation between animacy, robotIntelligence, and hesitation. The only significant correlation was between robotIntelligence and animacy (p<.001). The Pearson Correlation coefficient for this pair of variables was .555 and the r2 value was .309. Figure 7 shows the scatter plot of robotIntelligence on animacy with the estimated linear curve. The correlation between robotIntelligence and animacy was observed to be stronger in the case of the iCat embodiment (p<.001, r = .61). For the Robovie robot the correlation coefficient was slightly less (p = .009, r = .418).

Figure 7. Scatter plot of animacy on robotIntelligence with the linear curve estimation.

Discussion and conclusion

This is interesting, because the arms of the Robovie do present a possible danger to the participants, which can have considerable impact on the affective state of the participants [5]. Although we do not have any qualitative evidence to prove this, we speculate that the movement of the Robovie might have influenced the participants. They might have perceived the arms of the Robovie as being potentially dangerous. It is to be noted that the Robovie in itself is not dangerous, as it has inherent collision detection algorithms. The animated face of the iCat, on the other hand, led participants to look at it more often and longer, in comparison with the Robovie (Figure 5). The participants could also have been lip-reading the lip movements of the iCat.

The facial expression of the iCat appeared to have greater impact and attraction than the potential fear of being touched by the Robovie. The participants seemed to be heavily engaged in the interaction with the iCat and could have been using the cues from the animations of the iCat to succeed in the Mastermind game. When participants were stuck at a move, in the iCat condition they could hope for assistance by looking at the iCat, but neither robot was able to react to being looked at by the participants. However to validate this claim, we would need to quantify a task success variable in future experiments.

In the case of the Robovie, it appears that participants did not feel compelled to look directly at the Robovie, possibly because it was not providing enough social cues that the participant could take advantage of. Therefore, they tended to gaze aimlessly rather than look at either the Robovie or the laptop screen when they were stuck at a particular juncture in the game.

Even so, ironically, the Robovie is rated as the more intelligent robot. Was the fact of looking at the iCat more often a form of empathy? Did the participants think that the iCat was less intelligent and hence required some help and attention? These are interesting questions that we intend to test in future experiments.

In summary, an animated face appears to be a good method for grabbing the attention of users. Currently, most humanoids do not feature a mechanically animated face, and this may be a possible area for improvement. Of course, the participants spent most of their time looking at the screen, since this was, after all, their main task. The suggestions of the robot were given verbally, and hence there was only a limited advantage in looking at the robots. The graph (Figure 6) shows that, for iCat, the frequency of looking at iCat and looking at the screen is almost the same, and significantly higher than for the Robovie. Therefore, participants could have perceived the iCat to be a friendlier and more comfortable interaction partner.

Comparison with our earlier studies

The different embodiments did not result in a different perception of animacy or an increased hesitation to switch off the robots. In our previous study we did manipulate the quality of the suggestions given by a robot for the Mastermind game, which did result in considerable differences for hesitation [1]. Participants were much more hesitant to switch off a smart robot than a stupid robot. This may indicate that, for the perception of its animacy, the behavior of a robot is more important than its embodiment. In addition, in one of our earlier studies [2] it was concluded that behavior could in fact have a crucial role to play when it comes to perceived lifelikeness.

Future work

In the light of our earlier studies that were carried out in the Netherlands, we wish to supplement our analysis by conducting further experiments by running an unintelligent condition in Japan with the Robovie. By doing this, we might be able to further quantify the relationship between perceived animacy and intelligence. Furthermore we also plan to conduct more tests with participants in the Netherlands for the iCat in its intelligent condition. By doing this, we would be able to analyze intercultural differences as well.

Acknowledgement

This study was supported by the Philips iCat Ambassador Program. This work was also supported in part by the Japan Society for the Promotion of Science, Grants-in-Aid for Scientific Research No. 18680024.

References

- Bartneck, C., Hoek, M. v. d., Mubin, O., & Mahmud, A. A. (2007). “Daisy, Daisy, Give me your answer do!” - Switching off a robot. Proceedings of the 2nd ACM/IEEE International Conference on Human-Robot Interaction, Washington DC, pp 217 - 222. | DOI: 10.1145/1228716.1228746

- Bartneck, C., Verbunt, M., Mubin, O., & Mahmud, A. A. (2007). To kill a mockingbird robot. Proceedings of the 2nd ACM/IEEE International Conference on Human-Robot Interaction, Washington DC, pp 81-87. | DOI: 10.1145/1228716.1228728

- Heider, F., & Simmel, M. (1944). An experimental study of apparent behavior. American Journal of Psychology, 57, 243-249.

- Kulic, D., & Croft, E. (2006). Estimating Robot Induced Affective State Using Hidden Markov Models. Proceedings of the RO-MAN 2006 – The 15th IEEE International Symposium on Robot and Human Interactive Communication, Hatfield, pp 257-262. | DOI: 10.1109/ROMAN.2006.314427

- Lee, K. M., Park, N., & Song, H. (2005). Can a Robot Be Perceived as a Developing Creature? Human Communication Research, 31(4), 538-563. | DOI: 10.1111/j.1468-2958.2005.tb00882.x

- McAleer, P., Mazzarino, B., Volpe, G., Camurri, A., Paterson, H., Smith, K., et al. (2004). Perceiving Animacy And Arousal In Transformed Displays Of Human Interaction. Proceedings of the 2nd International Symposium on Measurement, Analysis and Modeling of Human Functions 1st Mediterranean Conference on Measurement Genova.

- Rakison, D. H., & Poulin-Dubois, D. (2001). Developmental origin of the animate-inanimate distinction. Psychol Bull, 127(2), 209-228. | DOI: 10.1037/0033-2909.127.2.209

- Scholl, B., & Tremoulet, P. D. (2000). Perceptual causality and animacy. Trends in Cognitive Sciences, 4(8), 299-309. | DOI: 10.1016/S1364-6613(00)01506-0

- Tremoulet, P. D., & Feldman, J. (2000). Perception of animacy from the motion of a single object. Perception, 29(8), 943-951. | DOI: 10.1068/p3101

- Warner, R. M., & Sugarman, D. B. (1996). Attributes of Personality Based on Physical Appearance, Speech, and Handwriting. Journal of Personality and Social Psychology, 50(4), 792-799. | DOI: 10.1037/0022-3514.50.4.792

This is a pre-print version | last updated April 28, 2009 | All Publications