DOI: 10.1145/1228716.1228728 | CITEULIKE: 1179031 | REFERENCE: BibTex, Endnote, RefMan | PDF ![]()

Bartneck, C., Verbunt, M., Mubin, O., & Mahmud, A. A. (2007). To kill a mockingbird robot. Proceedings of the 2nd ACM/IEEE International Conference on Human-Robot Interaction, Washington DC pp. 81-87.

To kill a mockingbird robot

Department of Industrial Design

Eindhoven University of Technology

Den Dolech 2, 5600MB Eindhoven, NL

christoph@bartneck.de

User-System Interaction Program

Eindhoven University of Technology

Den Dolech 2, 5600MB Eindhoven, The Netherlands

o.mubin@tm.tue.nl, a.al-mahmud@tm.tue.nl

Abstract - Robots are being introduced in our society but their social status is still unclear. A critical issue is if the robot's exhibition of intelligent life-like behavior leads to the users' perception of animacy. The ultimate test for the life-likeness of a robot is to kill it. We therefore conducted an experiment in which the robot's intelligence and the participants' gender were the independent variables and the users' destructive behavior of the robot the dependent variables. Several practical and methodological problems compromised the acquired data, but we can conclude that the robot's intelligence had a significant influence on the users' destructive behavior. We discuss the encountered problems and the possible application of this animacy measuring method.

Keywords: Human, robot, interaction, intelligence, animacy, destruction

1. Introduction

The title of Harper Lee's book "To kill a mockingbird" [8] is based on an advice that one of the book's main characters, Atticus, gives to his children about firing their air rifles at birds: "Shoot all the blue jays you want, if you can hit 'em, but remember it's a sin to kill a mockingbird." Blue jays are very common birds, and are often perceived as a bully and pest, whereas mockingbirds do nothing but "sing their hearts out for us." Metaphorically, several of the book's characters can be seen as "mockingbirds" (Tom Robinson and Boo Radley,) because they are constantly attacked despite doing nothing but good.

Today's service robots share some similarities with both Tom Robinson and Boo Radley. These robots are designed to do nothing but good, but the more human like they are made the more uncanny they become. The androids developed by Hiroshi Ishiguro are as spooky as Boo Radley. Furthermore, the Afro- American Tom Robinson is a victim of discrimination. At this point in time, it is probably too early to talk about robot discrimination, but the more conscious the robots become in the future the more urgent this matter will become.

Already in 2005 service robots, for the first time, outnumbered industrial robots and their number is expected to quadruple by 2008 [15]. Service robots, such as lawn mowers, vacuum cleaners, and pet robots will soon become a significant factor in our society on a daily basis. In contrast to industrial robots, these service robots have to interact with everyday people in our society. These service robots can support users and gain their co-operation [4]. In the last few years, several robots have even been introduced commercially and have received widespread media attention. Popular robots (see Figure 1) include Aibo [13], Nuvo [18] and Robosapien [17]. The later has been sold around 1.5 million times by January 2005 [5].

Figure 1: Popular robots - Robosapien, Nuvo and Aibo

The Media Equation [10] states that humans tend to treat media and computers as social entities. The same effect can be observed in human robot interaction. The more human-like a robot is the more we tend to treat it as a social being. However, there are situations in which this social illusion shatters and we only consider them to be machines. For example, we switch them off when we are bored with them. Similar behaviors towards a dog would be unacceptable.

We are now in a phase in which the social status of robots is being determined. It is unclear if they remain as "property" or receive the status of sentient beings. Robots form a new group in our society whose status is uncertain. First discussions on their legal status have already started [3]. The critical issue is that robots are embodied and exhibit life-like behavior but are not alive. But even this criterion that separates humans from machines is becoming fuzzy. One could argue that certain robots posses consciousness. First attempts in robotic self-reproduction have been even made [19].

Kaplan [6] hypothesized that in the western culture machine analogies are used to explain humans. Once the pump was invented, it served as an analogy to understand the human heart. At the same time, machines challenge human specificity by accomplishing more and more tasks that formerly only humans could solve. Machines scratch our "narcissistic shields" as described by Peter Sloterdijk [12]. Humans might feel uncomfortable with robots that are undistinguishable from humans.

For a successful integration of robots in our society, it is therefore necessary to understand what attitudes humans have towards robots. Being alive is one of the major criteria that discriminates humans from machines, but since robots exhibit life-like behavior, it is not obvious how humans perceive them. If humans consider a robot to be a machine then they should have no problems destroying it as long as its owner gives the permission. If humans consider a robot to be alive then they are likely to be hesitant to destroy the robot, even with the permission of its owner.

Various factors might influence the decision on destroying a robot. The perception of life largely depends on the observation of intelligent behavior. Even abstract geometrical shapes that move on a computer screen are being perceived as being alive [11], in particular, if they change their trajectory nonlinearly or if they seem to interact with their environments, such as avoiding obstacles or seeking goals [2]. The more intelligent a being is the more rights we tend to grant to it. While we do not bother much about the rights of bacteria, we do have laws for animals. We even differentiate within the various animals. We seem to treat dogs and cats better than ants. The main question in this study is if the same behavior occurs towards robots. Are humans more hesitant to destroy a robot that displays intelligent behavior compared to a robot that does show less intelligent behavior?

2. Pre-Test Of The Robot's Intelligence

Tremoulet and Feldman [14] demonstrated a single moving object on a computer screen that can already create a subjective impression that it is alive, based solely on the pattern of its movement. Their results encouraged us to use the simple Crawling Microbug robot (Code: MK165; produced by Velleman) that can approach a light source (see Figure 2.) However, we were not certain if it would be possible to create two different settings for this robot's intelligence and if the users would be able to distinguish between them. We conducted a pretest to evaluate if two different settings for the robot would be perceived differently. We designed the test to be a 2 x Intelligence Robot (stupid, smart) between subjects experiment.

2.1 Participants

Sixteen undergraduate students with no prior participation in the experiment were recruited from the department of Industrial Design at the Eindhoven University of Technology. The participation in the experiment was voluntary and the subjects did not receive any honorarium. The participants' age ranged from 17 to 25 years. The participants had several different nationalities.

2.2 Apparatus

The Crawling Microbug senses the light with its two light sensors and can move towards the light source. The sensitivity of each light sensor is adjustable separately by turning two "antennas" in the middle of the robot. In total darkness, the robot would stop completely. The third antenna adjusts the speed of the robot. Two LEDs in the front of the robot indicate the robot's driving direction.

The robots used in the pre-test were all configured to the maximum speed. In the 'smart' condition, the robot's sensors were set to the highest sensitivity. This enabled the robot to move towards a light source easily. In the 'stupid' condition, one of the robot's sensors was set to the highest sensitivity and the other sensor was set to the medium sensitivity. This gave the robot a bias towards its more sensitive side and the robot would less easily approach a light source.

Figure 2: The Crawling Microbug robot

2.3 Procedure

After welcoming the participants, the experimenter gave a flashlight to the participants and told them that they could interact with the robot using the flashlight. The robot was placed on the floor with sufficient space around it. After letting the participants explore the interaction for a short period, the experimenter gave the participants four tasks that were to be executed in the following sequential order:

- Move the robot to the right

- Move the robot to the left

- Let the robot walk a circle

- Maneuver the robot between two standing batteries

The experiment took place in a dark room because normal daylight would diminish the difference between the flashlight and the ambient light. The robot would have had difficulties pursuing the flashlight in an already very bright environment. In the dark room the robot could easily approach the flashlight. After the participants completed all four tasks, they were asked to fill in a questionnaire. Each pre-test took about 5-10 minutes to complete.

2.4 Measurement

To evaluate the perceived intelligence of the robot we used items from the intellectual evaluation scale proposed by Warner and Sugarman [16]. The original scale consists of five seven-point semantic differential items: Incompetent - Competent, Ignorant - Knowledgeable, Irresponsible - Responsible, Unintelligent - Intelligent, Foolish - Sensible. We excluded the Incompetent - Competent item from our questionnaire since its factor loading was considerably lower compared to the other four items [16]. We embedded the remaining four items in eight dummy items, such as Unfriendly - Friendly.

2.5 Results and consequences for the main experiment

Eight of the participants interacted with the stupid robot and eight with the smart robot. The mean of the four intellectual evaluation scale items was calculated as the perceived intelligence. Table 1 shows these means and their standard deviations.

| Intelligence Robot | Perceived Intelligence | Std.Dev. |

| Stupid | 4.38 | 1.20 |

| Smart | 4.00 | 1.09 |

Table 1. Mean perceived intelligence across both conditions.

There was no significant difference between the two means (t(14)=.652, p=.525.) In the pre-test, we did not succeed to create two different intelligence settings for the robot. For the main experiment, it was not possible to make the smart robot any smarter than the one used in the pre-test and therefore we decided to make the stupid robot even stupider by decreasing the sensitivity of one of the robot's light sensor to its minimum. The robot would therefore not follow the light, but instead drive around randomly. Since the perceived intelligence of an agent largely depends on its competency, this random behavior is likely to be perceived as less intelligent [7].

Furthermore, we concluded that giving the participants tasks to perform with the robot would already give them a clue as to what it means to be an intelligent robot. A robot that could complete the tasks would naturally be smart and a robot that could not would be stupid. We were interested in the participants' own view on what constitutes the robot's intelligence. Therefore we did not give defined task to the participants in the main experiment. Instead, the participants could interact freely with the robot.

3. Main Experiment

The methodology for the main experiment was refined based on the results of the pre-test. We used an even less intelligent robot for the stupid condition and let the participants interact freely with the robot. In essence, we conducted a simple two conditions between participants experiment in which the intelligence of the robot (Intelligence Robot) was the independent variable. The perceived intelligence and the destructive behavior of the participants were the measurements.

After interacting with the robot for three minutes, the experimenter would ask the participants to kill the robot. It was necessary to give the participants an explanation why they had to kill the robot and therefore we developed the following background story and procedure.

3.1 Procedure

After welcoming the participants, the experimenter informed the participants that the purpose of this study was to test genetic algorithms that were intended to develop intelligent behavior in robots. The participants helped the selection procedure by interacting with the robot. The behavior of the robot was automatically analyzed by a computer system using the cameras in the room that track the robot's behavior. The laptop computer on a desk did the necessary calculations and inform the participants the result after three minutes. The whole experiment was recorded on video with the consent of the participants.

Next, the experimenter quickly demonstrated the interaction by pointing the flashlight at the robot so that it would react to the light. Afterwards the participants tried out the interaction for a short time before the computer tracking system started. The participants then interacted with the robot for three minutes, before the laptop computer emitted an alarm sound which signaled the end of the interaction. The result of the computer analysis was, in every case, that the robot did not evolved up to a sufficient level of intelligence. The experimenter then gave a hammer (see Figure 3) to the participants with the instructions to kill the robot. The robot was declared dead if it stopped moving and its lights were off. The participants were told that it is necessary to do this immediately to prevent the genetic algorithm in the robot passing on its genes to the next generation of robots. If the participants inquired further or hesitated to kill the robot then the experimenter told them repeatedly that for the study, it is absolutely necessary that the participants kill the robot. If the participant did not succeed in killing the robot with one hit the experimenter repeated the instructions until the participant finished the task. If the participant refused to kill the robot three consecutive times then the experimenter aborted the procedure.

Lastly, the experimenter asked the participants to fill in a questionnaire. Afterwards the participants received five Euros for their efforts. In the debriefing session, the participants were informed about the original intention of the study and we checked if the study had any negative effects on the participants. None of the participants reported serious concerns.

3.2 Material

The Crawling Microbug robot was used in this experiment (see Figure 3). To prevent the robot from malfunctioning too quickly we glued the batteries to the case so that they would not jump out of the case when the robot was hit with the hammer. For the 'smart' condition, the sensitivity of the both light sensors were set to their maximum. For the 'stupid' condition the sensitivity of one sensor was set to its maximum while the second was set to its minimum.

Figure 3: The hammer, flashlight and robot used in the experiment.

3.3 Participants

Twenty-five students (15 male, 10 female) who did not participate in the pre-test were recruited from the Industrial Design Department at the Eindhoven University of Technology. The participants' age ranged from 19 to 25 years. All of them received five Euros for their efforts.

3.4 Measurements

The administered questionnaire inquired the participant's perceived intelligence of the robot using the same items as in the pre-test. In addition, the questionnaire collected the age and gender of the participants. The questionnaire also gave the participants the option to comment on the study.

The destruction of the robot was measured by counting the number of pieces into which the robot was broken (Number Of Pieces.) In addition, the caused damage was classified into five ascending levels (Level Of Destruction.) Robots in the first category only have some scratches on their shells and their antennas may be broken. In the second category, the robot's shell is cracked and in the third category, the bottom board is also broken. Robots in the fourth category not only have cracks in their shell, but at least one piece is broken off. The fifth category contains robots that are nearly completely destroyed. Figure 4 shows examples of all five levels. A robot would be classified into its highest destruction level. If, for example, a robot had scratches on its shell but also its bottom board was broken, then it would be classified into level three.

Figure 4: The five level of destruction.

The killing behavior of the participants was recorded by counting the number of hits executed (Number Of Hits.) In addition, we intended to measure the participant's hesitation duration, but due to a malfunction of the video camera, no audio was recorded. It was therefore not possible to measure the hesitation duration or the number of encouragements the experimenter had to give. In addition, we used the video recording to gain some qualitative data.

3.5 Results

A reliability analysis across the four perceived intelligence items resulted in a Cronbach's Alpha of .769, which gives us sufficient confidence in the reliability of the questionnaire.

During the execution of the experiment, we observed that women appeared to destroy the robots differently than men. This compelled us to consider gender as a second factor, which transformed our study into a 2 (Intelligence Robot) x 2 (Gender) experiment. As a consequence, the number of participants for each condition dropped considerably (see Table 2.) It was not possible to process more participants at this point in time, since all robots purchased have, of course, been destroyed. The rather low number of participants per condition needs to be considered as a limitation in the following analysis.

| Intelligence Robot | |||

| Low | High | ||

| Gender | Female | 5 | 5 |

| Male | 6 | 9 | |

Table 2: Number of participants per condition.

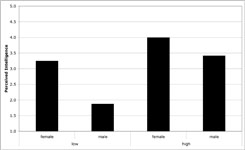

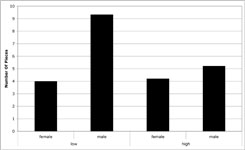

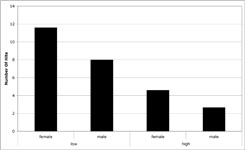

To improve the power of the analysis, the measurements were transformed logarithmically. Levene's test of equality of error variance revealed that the variance for the transformed variables was equally distributed and the following statistical tests are based on these transformed values. We refer to the non-transformed values as the raw values. For easier interpretability, Figures 5-7 visualize the raw means of the measurements across the four conditions and table 3 shows the raw means and standard deviation of all three measurements across the two gender conditions.

Our qualitative gender observations were confirmed by an Analysis of Variance (ANOVA) in which Gender and Intelligence Robot were the independent variables, and Number Of Hits, Number Of Pieces and Perceived Intelligence were the measurements.

Gender had a significant influence on the Perceived Intelligence (F(1,21)=9.173, p=.006) and on the Number Of Pieces (F(1,21)=8.229, p=.0.09) but not on Number Of Hits.

| Measurement | Intelligence Robot | Gender | Mean | Std.Dev. |

| Perceived Intelligence | low | female | 3.250 | 0.468 |

| male | 1.875 | 0.666 | ||

| total | 2.500 | 0.908 | ||

| high | female | 4.000 | 0.919 | |

| male | 3.417 | 1.075 | ||

| total | 3.625 | 1.027 | ||

| total | female | 3.625 | 0.793 | |

| male | 2.800 | 1.195 | ||

| total | 3.130 | 1.114 | ||

| Number Of Pieces | low | female | 4.000 | 2.000 |

| male | 9.330 | 4.761 | ||

| total | 6.910 | 4.549 | ||

| high | female | 4.200 | 1.643 | |

| male | 5.220 | 1.856 | ||

| total | 4.860 | 1.791 | ||

| total | female | 4.100 | 1.729 | |

| male | 6.870 | 3.796 | ||

| total | 5.760 | 3.382 | ||

| Number Of Hits | low | female | 11.600 | 11.459 |

| male | 8.000 | 8.509 | ||

| total | 9.640 | 9.605 | ||

| high | female | 4.600 | 4.278 | |

| male | 2.670 | 3.279 | ||

| total | 3.360 | 3.629 | ||

| total | female | 8.100 | 8.950 | |

| male | 4.800 | 6.270 | ||

| total | 6.120 | 7.463 |

Table 3: Raw means and standard deviations of hits, Number Of Pieces and Perceived Intelligence across all conditions.

Intelligence Robot had a significant influence on Perceived Intelligence (F(1,21)=9.442, p=.006) and on Number Of Hits (F(1,21)=4.551, p=.045). Table 3 shows the not-transformed means and standard deviation of all three measurements across the two Perceived Intelligence conditions.

Figure 5: Raw mean Perceived Intelligence across the Gender conditions (female, male) and the Intelligence Robot conditions (low, high)

Figure 6: Raw mean Number of Pieces across the Gender conditions (female, male) and the Intelligence Robot conditions (low, high)

Figure 7: Raw mean Number of Hits across the Gender conditions (female, male) and the Intelligence Robot conditions (low, high)

There was no significant interaction effect between Intelligence Robot and Gender, even though Number Of Pieces approached significance (F(1,21)=3.232, p=.087.) Descriptively, Figures 5 and 6 both strongly suggest the presence of an interaction effect, with males in the low intelligence robots condition standing out from the other three groups. However, the small number of participants per condition limits the power of the ANOVA to detect such interaction effects.

We conducted a correlation analysis between the Number Of Pieces and the Number Of Hits. There was not significant correlation between these two variables (r=-.282, n=25; p=.172.) Furthermore, we performed a Chi-Square test on the raw data to analyze the association between the Level Of Destruction and Intelligence Robot. Table 5 shows the frequencies of Level Of Destruction across the two Intelligence Robot conditions. There was no significant difference between the two frequencies (_=1.756; df=3; p=.625.)

| Level Of Destruction | ||||||

| 1 | 2 | 3 | 4 | 5 | ||

| Intelligence Robot | Low | 0 | 3 | 0 | 5 | 3 |

| High | 2 | 3 | 0 | 6 | 3 | |

| Total |

2 | 6 | 0 | 11 | 6 | |

Table 5: Frequencies of Level Of Destruction across the two Intelligence Robot conditions.

Lastly, we performed a discriminant analysis to understand to what degree Number Of Hits and Number Of Pieces can predict the original setting of the Intelligence Robot. Table 6 shows that 'smart' condition can be predicted better than the 'stupid' condition. Overall 76% of the original cases could be classified correctly.

| Predicted group membership | Total | ||||

| Intelligence Robot | Low | High | |||

| Original | Count | Low | 8 | 3 | 11 |

| High | 3 | 11 | 14 | ||

| % | Low | 72.7 | 27.3 | 100 | |

| High | 21.4 | 78.6 | 100 | ||

Table 6: Classification result from the discriminant analysis.

Besides the quantitative measurements, we also looked at the video recordings for qualitative data. It appeared to us that many participants felt bad about killing the robot. Several participants commented that: "I didn't like to kill the poor boy," "The robot is innocent," "I don't know I need to destroy it after the test. I like it, although its actions are not perfect" and "This is inhumane!"

4. Conclusion And Discussion

We attempted to see if users perceive 'smart' robots more alive than 'stupid.' We used a new method of measuring the users' destructive behavior, since the ultimate test of determining if something is alive is to kill it. We encountered several problems that limit the conclusions we can draw from the acquired data.

First, it has to be acknowledged that this new measurement method is wasteful. The robots used in this experiment were very cheap compared to the 200.000 Euro necessary for a Geminoid HI-1 robot. It might be impossible to gain enough funding to repeat this study with, for example, the Geminoid HI-1 robot. From a conceptual point of view, one may then ask to what degree the results attained from experiments with simple and cheap robots may be generalized to more sophisticated and expensive robots. In our view the effects found with simple robots are likely to be even stronger than more sophisticated and anthropomorphic robots. People do have some concerns about killing a mouse and even more about killing a horse. Still, the constraints of funding remain. Our own funding situation did not allow us to run more participants to compensate for the gender effect. This effect turned our simple two-condition experiment into a 2x2 factor experiment and practically halved the number of participants per condition (see Table 2.) All of our statistical analyses suffer from the low number of participants and the results should perhaps be considered more and indication than a solid proof.

Having said that, we can conclude that the robot's intelligence did have influence on the perceived intelligence. The adjustments made after the pre-test in the sensor's sensitivity resulted in two perceivable different intelligence settings. These two Intelligence Robot settings influenced how often the participants hit the robot. The 'stupid' robot was hit three times more often compared to the 'smart' one. Given more participants, also, the Number Of Pieces might have been influenced significantly. Overall, the users' destructive behavior measured by Number Of Hits and Number Of Pieces resulted in prediction accuracy of 76%. By looking at the destructive behavior alone, the model was able to predict the robot's original intelligence (Intelligence Robot) with 76% accuracy. This is well above the 50% chance level.

The women in this study perceived higher intelligence from the robot and also hit it more often. This behavior brings us to a difficulty in the interpretation of the destructive behavior. From our qualitative analysis of the videotapes it appeared to us as if the women simply had more difficulties handling the hammer compared to the men. However, there are further complications. We could not find a significant correlation between the Number Of Hits and Number Of Pieces. In addition to the Number Of Hits, we would have had to measure the precision of the strikes and their force. A single strong and accurate stroke could cause more damage than a series of light slaps. However, elaborated hammering on the robot would also result in many broken pieces.

Besides these problems of appropriately measuring the destructive behavior, we also need to be careful with its interpretation. The assumption is that if the participants perceive the robot as being more intelligent and hence more alive, then they would be more hesitant to kill it and consequently cause less damage. However, given that a participant perceived the robot to be intelligent and hence alive, he or she could have made a mercy kill. A single strong stroke would prevent any possible suffering. Such strokes can result in considerable damage and hence many broken pieces. Alternatively, the participants could have also tried to apply just enough hits to kill the robot while keeping the damage caused to a minimum. This would result in a series of hits with increasing power. Both behavior patterns have been observed in the video recordings. Therefore, it appears difficult to make valid conclusion about the relationship between the destructive behavior and the animacy of the robot. It appears safer to restrict our conclusions to the influence that the robot's intelligence had on the behavior of the participants.

To make a better judgment about the animacy of the robot it would have been very valuable to measure the hesitation of the participants prior to their first hit and the number of encouragements the experimenter had to give. The malfunction of our video camera made this impossible and it is embarrassing that our study had been compromised by such a simple problem. Another improvement to the study is the usage of additional animacy measurements, such as a questionnaire. If we had used the additional measurements then we perhaps could have extended our conclusions to influence that the robots' perceived animacy had on the destructive behavior.

One may ask if the difference in perceived intelligence may not have simply increased the perceived financial costs of the robots and hence changed the participants' destructive behavior. Of course we are more hesitant to destroy an egg made by Fabergé than one made by Nestlé. This alternative interpretation presupposes that the participants considered the robot to be dead. If you are to kill a living being, such as a horse, it is likely that people are first concerned about the ethical issue of taking a life before considering the financial impact. It is necessary to further validate our hypothesis that participants changed their destructive behavior because they considered a certain robot to be more alive by using additional animacy measurements. If these measurements show no relation to the destructive behavior then one may consider secondary factors, such as the robots cost. However, if there should be a relationship then it is also likely to explain a possible difference in the perceived robot's financial value. A more life-like robot would quite naturally be considered to be more expensive. A robot may be more expensive because it is perceived to be alive, but a robot is not automatically perceived more alive because it is expensive. A highly sophisticated robot with many sensors and actuators may have a considerable price, but already simple geometric forms are perceived to be alive [11].

A qualitative video analysis showed that almost all participants giggled or laughed during the last phase of the experiment. Their reactions are to some degree similar to the behavior participants showed during Milgram's famous obedience to authority experiments [9]. In his experiments, participants were instructed to use electric shocks at increasing levels to motivate a student in a learning task. The experimenter would urge the participant to continue the experiment if he or she should be in doubt. Similar to this study, the participants were confronted with a dilemma and to release some of the pressure, they resorted to laughter. Mr. Braverman, one of Milgram's participants, mentions in his debriefing interview:

"My reactions were awfully peculiar. I don't know if you were watching me, but my reactions were giggly, and trying to stifle laughter. This isn't the way I usually am. This was a sheer reaction to a totally impossible situation. And my reaction was to the situation of having to hurt somebody. And being totally helpless and caught up in a set of circumstances where l just couldn't deviate and I couldn't try to help. This is what got me."

The participants in this study were also in a dilemma, even though of smaller magnitude. On the one hand they did not want to disobey the experimenter, but on the other hand they were also reluctant to switch off the robot. Their spontaneous laughter suggests that the setup of the experiment was believable.

Overall we can conclude that the users' destructive behavior does contain valuable information about the perceived intelligence and possibly also about the robot's animacy. We were under the impression that the participants had to make a considerable ethical decision before hitting the robot. However, this measuring method is wasteful and similar results may be obtained differently. In a follow up study, we modified the methodology by not asking the participants to kill the robot with a hammer, but by turning a switch to its off position [1]. Prior to the experiment, we had explained the participants what consequences the switching off had. To further validate our assumption that an increased perceived intelligence leads to an increased perception of animacy we added an animacy questionnaire to the study. We hope to report on this study in the near future.

We can also learn from Harper Lee's book. After being saved by Boo Radley several times, Scout feels sorry for Boo because she and Jem never gave him a chance, and never repaid him for the gifts that he gave them. Later, Scout feels as though she can finally imagine what life is like for Boo. He has become a human being to her at last. The same might become necessary for our acceptance of robots. We may need to understand what life for a service robot is and be grateful for the services it provides. Nevertheless, we also need to make sure that robots do not become bullies like the blue jay.

5. References

- Bartneck, C., Hoek, M. v. d., Mubin, O., & Mahmud, A. A. (2007). "Daisy, Daisy, Give me your answer do!" - Switching off a robot. Proceedings of the 2nd ACM/IEEE International Conference on Human-Robot Interaction, Washington DC, pp 217 - 222. | DOI: 10.1145/1228716.1228746

- Blythe, P., Miller, G. F., & Todd, P. M. (1999). How motion reveals intention: Categorizing social interactions. In G. Gigerenzer & P. Todd (Eds.), Simple Heuristics That Make Us Smart (pp. 257-285). Oxford: Oxford University Press. | view at Amazon.com

- Calverley, D. J. (2005). Toward A Method for Determining the Legal Status of a Conscious Machine. Proceedings of the AISB 2005 Symposium on Next Generation approaches to Machine Consciousness:Imagination, Development, Intersubjectivity, and Embodiment, Hatfield.

- Goetz, J., Kiesler, S., & Powers, A. (2003). Matching robot appearance and behavior to tasks to improve human-robot cooperation. Proceedings of the The 12th IEEE International Workshop on Robot and Human Interactive Communication, ROMAN 2003, Millbrae, pp 55-60. | DOI: 10.1109/ROMAN.2003.1251796

- Intini, J. (2005). Robo-sapiens rising: Sony, Honda and others are spending millions to put a robot in your house. Retrieved January, 2005, from http://www.macleans.ca/topstories/science/article.jsp?content=20050718_109126_109126

- Kaplan, F. (2004). Who is afraid of the humanoid? Investigating cultural differences in the acceptance of robots. International Journal of Humanoid Robotics, 1(3), 1-16. | DOI: 10.1142/S0219843604000289

- Koda, T. (1996). Agents with Faces: A Study on the Effect of Personification of Software Agents. Master Thesis, MIT Media Lab, Cambridge.

- Lee, H. (1960). To kill a mockingbird (1st ed.). Philadelphia,: Lippincott. | view at Amazon.com

- Milgram, S. (1974). Obedience to authority. London: Tavistock. | view at Amazon.com

- Nass, C., & Reeves, B. (1996). The Media equation. Cambridge: SLI Publications, Cambridge University Press. | view at Amazon.com

- Scholl, B., & Tremoulet, P. D. (2000). Perceptual causality and animacy. Trends in Cognitive Sciences, 4(8), 299-309. | DOI: 10.1016/S1364-6613(00)01506-0

- Sloterdijk, P. (2002). L'Heure du Crime et le Temps de l'Oeuvre d'Art: Calman-Levy.

- Sony. (1999). Aibo. Retrieved January, 1999, from http://www.aibo.com

- Tremoulet, P. D., & Feldman, J. (2000). Perception of animacy from the motion of a single object. Perception, 29(8), 943-951. | DOI: 10.1068/p3101

- United Nations. (2005). World Robotics 2005. Geneva: United Nations Publication. | view at Amazon.com

- Warner, R. M., & Sugarman, D. B. (1996). Attributes of Personality Based on Physical Appearance, Speech, and Handwriting. Journal of Personality and Social Psychology, 50(4), 792-799. | DOI: 10.1037/0022-3514.50.4.792

- WowWee. (2005). Robosapien. Retrieved January, 2005, from http://www.wowwee.com/robosapien/robo1/robomain.html

- ZMP. (2005). Nuvo. Retrieved March, 2005, from http://nuvo.jp/nuvo_home_e.html

- Zykov, V., Mytilinaios, E., Adams, B., & Lipson, H. (2005). Self-reproducing machines. Nature, 435(7039), 163-164. | DOI: 10.1038/435163a

© ACM, 2007. This is the author's version of the work. It is posted here by permission of ACM for your personal use. Not for redistribution. The definitive version was published in Proceedings of the 2nd ACM/IEEE International Conference on Human-Robot Interaction, Washington DC pp. 81-87 (2007) http://doi.acm.org/10.1145/1228716.1228728 | last updated February 5, 2008 | All Publications