DOI: 10.1145/2559636.2559679 | CITEULIKE: 13359160 | REFERENCE: BibTex, Endnote, RefMan | PDF ![]()

Zlotowski, J., Strasser, E., & Bartneck, C. (2014). Dimensions of Anthropomorphism - From Humanness to Humanlikeness. Proceedings of the ACM / IEEE International Conference on Human-Robot Interaction, Bielefeld pp. 66-73.

Dimensions of Anthropomorphism - From Humanness to Humanlikeness

HIT Lab NZ, University of Canterbury

PO Box 4800, 8410 Christchurch

New Zealand

jakub.zlotowski@pg.canterbury.ac.nz,christoph@bartneck.de

HCI & Usability Unit, ICT&S Center

University of Salzburg, Salzburg, Austria

ewald.strasser@sbg.ac.at

Abstract - In HRI anthropomorphism has been considered to be a uni-dimensional construct. However, social psychological studies of the potentially reverse process to anthropomorphisation - known as dehumanization - indicate that there are two distinct senses of humanness with different consequences for people who are dehumanized by deprivation of some of the aspects of these dimensions. These attributes are crucial for perception of others as humans. Therefore, we hypothesized that the same attributes could be used to anthropomorphize a robot in HRI and only a two-dimensional measures would be suitable to distinguish between different forms of making a robot more humanlike. In a study where participants played a quiz based on the TV show “Jeopardy!" we manipulated a NAO robot’s intelligence and emotionality. The results suggest that only emotionality, not intelligence, makes robots be perceived as more humanlike. Furthermore, we found some evidence that anthropomorphism is a multi-dimensional phenomenon.

Keywords: human, robot, interaction, dehumanization, anthropomorphism

Introduction

Anthropomorphism, understood as the attribution of humanlike properties or characteristics to real or imagined nonhuman agents and objects [4], is a common theme appearing regularly in the field of HRI. However, it was described for the first time a long time ago by Xenophanes in the 6th century BC [18]. It has been proposed that anthropomorphism in those times had an adaptive function as it allowed early humans to interpret ambiguous shapes as human in order to minimize the risk of being killed by enemy or increase the chances of finding companions [12]. In today’s society, despite the widespread tendency to anthropomorphize other objects, it has been suggested that people’s mental models of autonomous robots are especially anthropomorphic [8].

A good example of this is the 2012 science fiction movie “Prometheus” that started its promotional marketing campaign in the form of short viral videos showing a fictional future and products developed by a company known as Wayland Corporation. One of these viral ads presented the latest generation of robots known as “David 8” that are almost indistinguishable from mankind itself in a Wayland Corporation’s commercial recommending this technology. The commercial ends with a slogan “Technological, intellectual, physical, emotional”, referring to the robot. In other words, in the fictional future, the key aspects that make a machine humanlike are intelligence, physical appearance and emotions.

This is a science fiction vision of the future, but the interest in understanding what makes robots anthropomorphic is not new in the field of HRI. Previous studies proposed multiple factors as affecting humanlikeness of robots: embodiment [7], movement [25], verbal communication [23 24], emotions [5], and gestures [22]. However, all of these factors were investigated separately and there is no unifying theory of anthropomorphism that could explain what robot specific attributes will lead people to perceive a robot as more humanlike. Epley and colleagues [4] proposed a three-factor theory of anthropomorphism that identified three psychological determinants that affect the likelihood of anthropomorphizing an object: elicited agent knowledge (accessibility and applicability of anthropocentric knowledge), effectance motivation (motivation of a person for being an effective social agent) and sociality motivation (desire for engaging in social contact). This general theory of anthropomorphism is also applicable for robots. However, only some of these factors can be applied to a robot’s design in order to affect its humanlikeness and this theory does not accommodate the above mentioned factors affecting anthropomorphism in HRI. Since the core aspect of anthropomorphism is humanness, instead of looking at what makes an object humanlike, we could look at what makes a human less humanlike. We thereby take the opposite approach to the problem as humanness can be a key to understanding why these numerous factors affect robots’ anthropomorphism and what is the relation between them. The potential of this approach in HRI can be seen in the work of Gray et al. [11] who showed that the uncanny valley is affected only by some factors of humanlikeness, but not all.

1.1 Dehumanization

Waytz et al. [27] proposed that dehumanization, defined as "representing human agents as nonhuman objects or animals and hence denying them human-essential capacities" could be a reverse process to anthropomorphism. The research on dehumanization has gathered new interest in social psychology in the last decade after the publication of Leyens et al. [19] who showed that people attribute differently certain emotions to in-group and out-group members. Those characteristics perceived to be the essence of humanness were attributed to in-group members, but not out-group members. Therefore, the out-group members were perceived as less human.

This work was developed further by Haslam [13] who proposed a model of dehumanization that involves two distinct senses of humanness. Denying characteristics that are Uniquely Human (UH) leads to perception of humans as animal-like. On the other hand, denying attributes that are Human Nature (HN) leads to mechanistic dehumanization where people are seen as automata. The UH dimension reflects mainly high cognition and some of the key attributes of it are intelligence, intentionality, secondary emotions or morality. The HN dimension is represented by primary emotions, sociability or warmth. These two senses of humanness differ in the consequences when people are deprived of them, e.g. a person deprived of UH traits is perceived as disgusting, while lack of HN traits implies indifference and lack of empathy [13].

Another research focus on perception of the mind led to supporting findings. Gray et al. [9] found that the mind perception of human and nonhuman agents was explained by two factors: Agency and Experience. Characteristics that form Agency are: morality, memory, planning or communication. Those which form Experience include feeling fear, pleasure or having desires. What is important is that these two dimensions map well on those proposed for humanness [14]. Agency corresponds to UH and Experience to HN.

The work on dehumanization recently started receiving attention in the context of HRI. However, these studies used dehumanization mainly as a measurement tool for the anthropomorphism directed at robots e.g. [5 17 22]. The previous work on humanlikeness in HRI considered anthropomorphism as a uni-dimensional space from a machine to a human. However, since humanness is the essence of anthropomorphism, the studies of dehumanization can indicate that anthropomorphism can be at least two-dimensional. Therefore, in order to accurately represent a robot’s perceived humanlikeness it would be necessary to measure it on these two dimensions (an exception is [6] where a robot’s anthropomorphism was measured on two scales of humanness, but they were not considered as different dimensions of that construct).

Furthermore, that would also mean that not only the level of a robot’s anthropomorphism affects HRI, but also the way in which people anthropomorphize it. HN traits distinguish humans from machines. It is possible that if we attribute these traits to a robot it will not only be perceived as more humanlike, but at the same time as less machine-like. However, in the case of attributing UH traits the perception of a robot could be more humanlike and less animal-like, but not necessarily less machine-like.

The relationship between the two-dimensional model and previously investigated factors affecting anthropomorphism in HRI will need to be established. Some of these factors, such as verbal communication [23 24] or emotions [5], can be easily attributed to one of the dimensions. Other factors, that were not included in the model of dehumanization can affect both dimensions of anthropomorphism, such as predictability of a robot’s behavior [6], or only one, such as embodiment [11].

If anthropomorphism is a reverse process to dehumanization and there are two distinct and independent senses of humanness, a robot can be perceived differently depending on which dimension it is being anthropomorphised. Therefore, a robot provided HN traits should be rated higher mainly on that dimension. Similarly, a robot provided UH attributes should be rated higher on the UH dimension. A uni-dimensional scale of anthropomorphism should be affected by both factors, but it will be unable to distinguish between their effects. In other words, uni-dimensional measures of anthropomorphism treat HN and UH traits equally, when theoretically they could be distinct. This distinction may be necessary in order to understand better the impact of anthropomorphism on HRI, e.g. it has been suggested that the uncanny valley [20] is caused only by the HN (Experience), but not the UH (Agency) dimension [11]. As it is not possible to include all factors affecting both dimensions in a single experiment, we decided to choose intelligence from the UH dimension and emotionality from HN dimension as previous studies showed them to significantly affect robots perceived animacy and humanlikeness, e.g [3 5]. Therefore, we wanted to investigate how these two factors would affect two-dimensional measures of anthropomorphism and whether it provides any additional information over well established uni-dimensional measure. These assumptions lead us to the following research questions:

- RQ1: Are there two dimensions of anthropomorphism?

- RQ2a: Does a robot’s intelligence affect mainly UH dimension?

- RQ2b: Does a robot’s emotionality affect mainly HN dimension?

2 Method

Our study was conducted using a 2x2 between-subjects design: robot’s emotionality (emotional vs unemotional) and intelligence (high vs low intelligence). We have measured a robot’s anthropomorphism using the scale derived from [16], and attribution of traits for the UH and HN dimensions [15].

2.1 Materials and apparatus

All the questionnaires were conducted using PsychoPy v1.77 [21] that was run on a PC. During the experiment, participants interacted with a NAO - a small humanoid robot. As the robot does not have the capability to express facial expressions, we implemented positive reactions by making characteristic sounds, such as “Yippee”, and gestures, such as rising hands. It should be noted that body cues are reported to be more important than facial expressions for discriminability of intense positive and negative emotions [1] as used in this experiment. The negative reactions were represented by negative characteristic sounds, such as “Ohh”, and gestures, such as lowering the head and torso. For each feedback provided by a participant the robot’s reaction was slightly different. Therefore, there were 5 positive and 5 negative animations that were presented in random order. Unemotionality was implemented by the robot saying “OK” and randomly moving its hands in order to ensure a similar level of animacy as in the emotional conditions. For every utterance made by the participants the robot acted slightly differently.

The robot’s responses to the quiz questions were prepared prior to the experiment. The NAO robot was controlled by a wizard sitting behind a curtain and ensuring appropriate reactions. In the intelligent condition, the robot’s responses were always correct. In the unintelligent condition they were based on the computer Watson’s most probable wrong answers as presented on the second day of “Jeopardy! Watson challenge”. We did this in order to ensure that the manipulation of intelligence represents the latest state of the art in AI and even the wrong answers should make sense and be possible. There is definitely a question regarding what is the appropriate level of intelligence of a robot. Previous studies manipulated a robot’s intelligence on a very low level, such as following a light [3]. In Wizard-of-Oz type of experiments it is also possible to drift to fictional future where robots can have knowledge far superior than humans with unrestricted access to information. However, we believe that the current state of the art of AI in some conditions allows robots to reach the level of intelligence that is close to human intelligence. Watson’s victory in “Jeopardy!” with multi-champion human opponents was an example of this that received a lot of publicity. Watson’s responses even if incorrect, were mainly realistic although at times exhibited its non-human nature. As in the near future it will be possible to provide similar level of knowledge to robots, it is important to explore how it is going to affect HRI.

2.2 Measurements

In the experiment we have used several questionnaires as dependent measures. Individual Differences in Anthropomorphism Questionnaire (IDAQ) [26] was used to ensure that participants did not differ in their general tendency to anthropomorphize between the conditions. Participants rated on a Likert scale from 0 (not at all) to 10 (very much) to what extent different non-human objects possess humanlike characteristics (e.g. “To what extent does a tree have a mind of its own?”). We conducted manipulation checks to ensure that we had successfully manipulated the factors. We used the Godspeed perceived intelligence scale [2] for the robot’s intelligence, that measures five items on a 5-point Likert scale (e.g. “Please rate your impression of the NAO on these scales: 1 - incompetent, 5 - competent”). The manipulation of the robot’s emotionality was measured using the extent to which NAO is capable of experiencing primary emotions [5] that had ten items on a 1 - not at all, to 7 - very much, Likert scale (e.g. “To what extent is the NAO capable of experiencing the following emotion? Joy”).

As a uni-dimensional scale of anthropomorphism we used the questionnaire from [16]. It measures six items on a 5-point Likert scale (e.g. “Please rate your impression of the NAO on these scales: 1 - artificial, 5 - natural”. We included this scale in order to confirm that both factors affect robots perceived anthropomorphism and investigate the relation between uni- and two-dimensional scales. The two-dimensional scale of anthropomorphism was based on the degree to which participants attributed UH and HN traits to the robot [15]. Both dimensions had 10 items (see Table 1 ) and were measured together as a single 20-item questionnaire with Likert scale from 1 (not at all) to 7 (very much) (e.g. “The NAO is ... shallow”).

Table 1: List of Uniquely Human and Human Nature traits used as a measurement of two dimensions.

| Uniquely Human | Human Nature |

| broadminded | curious |

| humble | friendly |

| organized | fun-loving |

| polite | sociable |

| thorough | trusting |

| cold | aggressive |

| conservative | distractible |

| hard-hearted | impatient |

| rude | jealous |

| shallow | nervous |

2.3 Participants

Forty participants were recruited at the University of Canterbury. They were paid by $5 vouchers as time compensation. They were all native or fluent English speakers. This paper is part of a bigger study that also included implicit measurement of anthropomorphism that required exclusion of non-native English speaking participants due to their weaker implicit association for UH and HN traits. In order to keep the results consistent we have discarded the data of these five participants from all the analyses. Out of the remaining 35 participants, 19 were female. There were 24 undergraduate and 8 postgraduate students, 1 staff member and 2 participants not associated with the university. All of them had normal or corrected to normal vision and none of them had ever interacted with a robot. Their ages ranged from 18 to 48 years with a mean age of 26.69. The participants were mostly New Zealanders (28) or British (4). Six participants indicated that they had previously watched a “Jeopardy!” TV show at least once.

2.4 Procedure

Participants were told before the experiment that they would play a quiz with a robot. They entered the experimental room together with the experimenter and were sited in front of a computer. At the other end of the room was sitting NAO. After reading an information sheet about the study and filling out consent forms the participants were asked to answer demographic and IDAQ questionnaires. Then they were informed that they would now interact with the robot. They were told that the robot was called NAO as the word would appear later in the tasks to be completed on the computer, and they would play a quiz based on the “Jeopardy!” TV show. Participants were told that in this game show contestants are presented with general knowledge clues that are formed as answers and they must give their responses in form of a question. Therefore, it does not only require great knowledge, but also good language understanding skills, so it should not be surprising that a computer called Watson that managed to beat human champions in “IBM Challenge” in 2011 was regarded as proof of great progress in AI development. In the game with NAO, participants took the role of the host reading clues to NAO, which took the role of the contestant.

On the standing next to the robot were placed cards with clues and above them categories of clues. On each card, there was also written the correct response. The cards were placed upside-down with clues facing the table. On top of each card was a value of the clues, which was supposed to represent the theoretical difficulty of a question as in the real show. In total there were six categories of clues. All these categories and clues were identical as in the second match of “IBM Challenge”. Participants were explained the rules of the game based on an example clue and asked to read the clues at their normal pace. Compared with the original show, they were told that the correct response of the robot must be identical to what was on the paper. Otherwise the response was incorrect, even if the meaning of NAO’s response was similar. They were also asked to provide feedback to the robot on whether its response was correct or wrong and then proceed to the next question. After participants confirmed that they understood the instructions they were told that they are assigned to the “EU, the European Union” category, and had to ask five questions from that category in any order they wished and remember how many answers the robot provided correctly. All the participants were assigned to this category in order to ensure that there would be no potential differences in difficulty between the categories. Then the experimenter touched the robot’s head and said that he will leave the participant alone with the robot for the task. The wizard started the robot that stood up and introduced itself as NAO. At that point the participants started reading clues for the robot. Depending on the condition the robot either responded correctly or wrongly and waited for a participant’s feedback. In emotional condition the positive feedback led to a positive response and a negative feedback to negative response. In unemotional condition the robot always behaved unemotionally. After answering the fifth question, the robot reminded the participants that it was the last question, thanked them for participation, asked them to inform the experimenter who was waiting outside and finally sat down.

When participants called the researcher, they had to say how many times the robot had answered correctly and they were asked to continue the tasks on the computer. Participants completed implicit measurement that is not included in this paper and then filled in the remaining questionnaires. At the end of the experiment they were debriefed and released. The whole procedure took approximately 25 minutes.

3 Results

We have conducted analyses of the data in three steps. Firstly, we checked whether the manipulations introduced in the experiment worked as expected. Secondly, we looked at the factors affecting the robot’s anthropomorphism. Thirdly, we investigated the relationships between the measures of anthropomorphism and its dimensionality. The assumptions of all the presented statistical analyses were checked and met, unless otherwise specified.

3.1 Manipulation check

As a pre-assumption the random assignment of subjects to the experimental groups should result in lack of differences in general tendency to anthropomorphize objects between the groups as measured with IDAQ questionnaire. This was confirmed as 2-way ANOVA with intelligence and emotionality conditions as between-subjects factors did not indicate statistically significant differences between the experimental conditions 1 (see Table 2 1 and 3 1). Furthermore, we did not find any interaction effects between IDAQ scores and experimental conditions on measurements of two-dimensional anthropomorphism. Therefore, the general tendency to anthropomorphize was dropped from further analysis.

Table 2: Results of 2-way ANOVAs with intelligence and emotionality conditions as between-subjects factors for all dependent variables.

| Intelligence | Emotionality | Intelligence * Emotionality | ||||||||||

| Measures | dfb | dfw | F | η2G | dfb | dfw | F | η2G | dfb | dfw | F | η2G |

| 1IDAQ | 1 | 31 | .65 | .02 | 1 | 31 | 2.96 | .09 | 1 | 31 | .17 | .006 |

| 2Perceived Intelligence | 1 | 31 | 28.35*** | .48 | 1 | 31 | 2.17 | .07 | 1 | 31 | .2 | .006 |

| 3Emotions | 1 | 31 | <.001 | <.001 | 1 | 31 | 7.99** | .21 | 1 | 31 | 2.26 | .07 |

| 4Anthropomorphism | 1 | 31 | .63 | .02 | 1 | 31 | 9.28** | .23 | 1 | 31 | 2.13 | .06 |

| 5UH | 1 | 31 | 1.62 | .05 | 1 | 31 | .20 | .006 | 1 | 31 | 1.6 | .05 |

| 6HN | 1 | 31 | .04 | .001 | 1 | 31 | 5.28* | .15 | 1 | 31 | 1.42 | .04 |

| 7UH - HN | 1 | 31 | 1.48 | .05 | 1 | 31 | 10.37** | .25 | 1 | 31 | .002 | <.001 |

| *p < .05, **p < .01, ***p < .001 | ||||||||||||

Table 3: Mean scores and standard deviations for dependent measures presented by the levels of experimental conditions.

| 1IDAQ | 2Perc. Intel. | 3Emotions | 4Anthro. | 5UH | 6HN | 7UH - HN | |||||||||

| Conditions | Levels | M | σ | M | σ | M | σ | M | σ | M | σ | M | σ | M | σ |

| Emotionality | Low | 2.75 | 1.17 | 3.59 | .93 | 1.62 | .98 | 1.86 | .59 | 3.11 | .92 | 2.08 | .67 | 1.03 | .83 |

| High | 3.45 | 1.27 | 3.8 | .78 | 2.86 | 1.53 | 2.51 | .65 | 2.92 | .89 | 2.77 | 1.07 | .15 | .72 | |

| Intelligence | Low | 2.99 | 1.27 | 3.13 | .78 | 2.38 | 1.6 | 2.34 | .74 | 2.82 | .88 | 2.47 | 1.02 | .35 | .73 |

| High | 3.26 | 1.27 | 4.24 | .46 | 2.22 | 1.3 | 2.09 | .65 | 3.18 | .9 | 2.44 | .94 | .74 | .99 | |

Furthermore, we have manipulated the robot’s emotionality and intelligence and hoped that our manipulation will significantly affect the degree to which a robot is perceived as emotional and intelligent. A robot that expresses emotionality should be attributed more primary emotions, but its perceived intelligence should not be affected by this manipulation. Moreover, manipulation of a robot’s intelligence should affect its perceived intelligence, but not attribution of emotions. Since knowledge does not equate to intelligence, it was possible that the robot in unintelligent condition would be perceived simply as less knowledgeable, but not necessarily less intelligent.

Two-way ANOVA with intelligence and emotionality conditions as between-subjects factors showed that there was only the main effect of manipulation of the robot’s emotional response on attribution of primary emotions to it (see Table 2 3 and 3 3). The robot was perceived as more emotional when it expressed emotions compared with the condition where its feedback was unemotional.

Similarly, 2-way ANOVA with intelligence and emotionality conditions as between-subjects factors indicated only the main effect of intelligence condition on perceived intelligence of the robot (see Table 2 2 and 3 2). The robot that was in the intelligent condition (provided correct responses) was perceived as significantly more intelligent than when it provided incorrect responses (unintelligent condition). These results indicate that our manipulations were successful and more knowledgeable robot was also perceived as more intelligent.

3.2 Factors of anthropomorphism

In the next stage of the analyses we investigated what factors affect anthropomorphism. First, we analyzed how the robot’s humanlikeness is affected by its intelligence and emotionality using the questionnaire of anthropomorphism. We hypothesized that both factors will significantly affect perceived anthropomorphism. Two-way ANOVA with intelligence and emotionality conditions as between-subjects factors was conducted to establish the impact of these factors on anthropomorphism. Our hypothesis was only partially confirmed. There was a significant main effect of the emotionality condition on perceived anthropomorphism (see Table 2 4 and 3 4).

A robot was perceived as more anthropomorphic when it provided emotional feedback than when its feedback was unemotional. The main effect of the intelligence condition was not significant. In other words, the robot’s intelligence did not affect its perceived anthropomorphism. Furthermore, we did not distinguish between positive and negative emotional responses of the robot, which could potentially be confounded with the intelligence condition. However, the interaction effect between the two factors was not significant, which implies that the type of the emotional response (positive or negative) did affect the perceived humanlikeness.

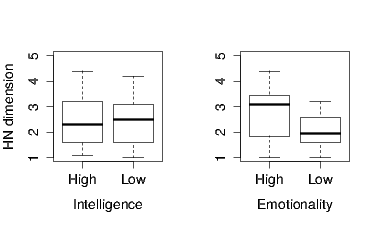

Second, we analyzed data for two dimensions of anthropomorphism based on studies of dehumanization. Both scales had sufficient reliability: UH Cronbach’s α = .71 and HN Cronbach’s α = .84. In particular, we hypothesized that the HN dimension would be affected only by the emotionality factor, but not the intelligence factor. As hypothesized using 2-way ANOVA with intelligence and emotionality conditions as between-subjects factors we found only the main effect of emotionality on HN to be statistically significant (see Table 2 6 and 3 6). When the robot reacted emotionally it was attributed more HN traits than when it was unemotional as shown on Figure 1 .

Figure 1: Rating of HN dimension. Attribution of HN traits in experimental conditions for intelligence and emotionality factors.

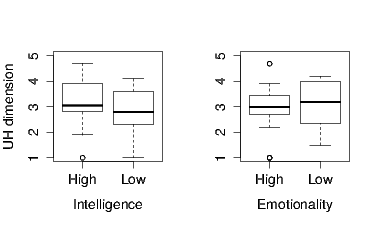

Similarly, we hypothesized that the UH dimension will be affected only by the intelligence factor, but not emotionality. Our results did not support this hypothesis. Two-way ANOVA with intelligence and emotionality conditions as between-subjects factors showed that neither of the factors, nor the interaction between them significantly affected the attribution of UH traits (see Table 2 5 and 3 5, and Figure 2 ).

Figure 2: Rating of UH dimension. Attribution of UH traits in experimental conditions for intelligence and emotionality factors.

3.3 Relationship between different dimensions

In the following step of the analyses we calculated a single score of the robot’s humanlikeness that could exhibit if one of the dimensions is dominant. In order to do that we deducted the HN rating from the UH rating, whereby a positive score means that the robot was anthropomorphised more strongly on the UH dimension and a negative score indicates that the robot was anthropomorphised more strongly on the HN dimension. A score close to 0 indicates that the robot was anthropomorphised equally on both dimensions or it was not anthropomorphised on either of them.

We have conducted another 2-way ANOVA with intelligence and emotionality conditions as between-subjects factors and found only the emotionality condition to significantly affect UH - HN score (see Table 2 7 and 3 7). A robot that had high emotionality was given a significantly lower score of UH - HN. This supports our hypothesis that a robot that expresses emotions will be anthropomorphised more strongly on the HN dimension. Furthermore, although intelligence did not statistically significantly affect the score, it is worth noting that looking at the mean scores (see Table 3 7), the data exhibits the opposite trend - increase of a robot’s intelligence leads to a higher UH - HN score. That can suggest a closer relation between intelligence and the UH dimension.

Finally, in order to understand better the relation between various dependent variables used in this study we have conducted a series of correlations (see Table 4 ). Due to the fact that the UH - HN score is a function of UH and HN dimensions we found a strong positive correlation between the UH dimension and the UH - HN score. Similarly, there was a strong negative correlation between HN and the score of difference between the dimensions. What is more interesting is that only HN was significantly and strongly positively correlated with anthropomorphism, but UH was not correlated with the latter. However, for our model the most important is the relation between UH and HN in order to establish whether there are two distinct dimensions of anthropomorphism or only one. We found a strong and highly significant correlation between these two dimensions that could indicate that they are not independent and measure identical constructs.

Based on these results, we have followed our analyses a step further and explored that relation. We divided HN and UH scores into two categories (high vs low) using median splits - scores below the median were qualified as low HN or UH, while the scores above the median formed the high HN and UH groups. Finally, we correlated the UH and HN scores for each of the categories separately. There was a strongly positive and statistically significant correlation for low HN subjects between their HN and UH scores, r = .67, N = 18, p = .003. However, for high HN subjects their HN and UH scores were not correlated, r = .15, N = 17, p = .58. Similarly, we found a strongly significant and positive correlation for low UH subjects, between their UH and HN scores, r = .65, N = 18, p = .004, but no correlation for high UH subjects between their UH and HN scores, r = .22, N = 17, p = .4. These results indicate that the relation between these two dimensions is not completely straightforward. The dimensions are related for half the subjects, but not the other half, which might indicate that UH and HN do not measure exactly the same aspects of humanlikeness.

Finally, we investigated the dimensionality of anthropomorphism by conducting a confirmatory factor analysis. We have used maximum likelihood estimator in order to establish how well a model with 2 factors (UH and HN) and 10 parameters on each factor can explain the total score of HN and UH. Our two factor model showed bad fit to the data χ2(169, 35) = 358.15, p < .001; RMSEA = .18; SRMR = .19. However, a single factor model of anthropomorphism was also a bad fit χ2(170, 35) = 370.92, p < .001, RMSEA = .18, SRMR = .16. This indicates that future studies should have much bigger sample size in order to establish the optimal number of dimensions of anthropomorphism.

Table 4: Correlations Between Measures with Pearson’s r coefficient.

| Measure | UH | HN | Anthro. | UH - HN |

| UH | .55*** | .14 | .41** | |

| HN | .55*** | .47** | -.53*** | |

| Anthro. | .14 | .47** | -.38* | |

| UH - HN | .41** | -.53*** | -.38* | |

| *p < .05, **p < .01, ***p < .001 | ||||

4 Discussion

In this study we investigated whether anthropomorphism similar to humanness is a two dimensional rather than uni-dimensional phenomenon. In particular, we hypothesized that the dimensions of humanness (UH and HN) and the factors that affect them could be used as a model of anthropomorphism for HRI.

Previous studies showed that a robot’s intelligence and emotionality affect its perceived life-likeness and humanlikeness. Our study confirmed that a robot that expressed emotions was perceived as more anthropomorphic than when it was reacting unemotionally. However, its intelligence did not affect the measure of anthropomorphism. This result is in contradiction with [3]. We think that different results obtained in these two studies is mainly due to the manipulation used and measurement. Bartneck and colleagues used a very low level of intelligence - following light - by a robot. On the other hand, the intelligence manipulation used in our study represents the latest state of the art in AI and refers to knowledge-based type of intelligence that is specific to humans. It is possible that participants saw the intelligent robot as too intelligent and that led to lower anthropomorphization. In other words, the questions asked in this quiz are not easy to answer even by humans. Therefore, it is possible that participants did not themselves know the answers to some of the questions and perceived the robot as too intelligent for a human being, which would not make it be perceived as more humanlike compared to a robot that answered incorrectly.

However, more plausible explanation is that even in unintelligent condition, the robots responses, although incorrect, still made sense and were possible rather than being completely random. In that sense, the robot still expressed high communication skills and good understanding of human language. Although in the intelligent condition it was perceived as more intelligent, it is possible that in the unintelligent condition its responses were humanlike enough for subjects to anthropomorphize it. This explanation is further supported by the fact that even in highly emotional condition, the robot was still stronger anthropomorphised on UH than HN dimension as indicated by mean UH - HN scores that never drop below 0. That means that irrespective of the experimental conditions participants attributed more UH than HN traits to the robot.

The second big difference between Bartneck et al. [3] study and ours is the measurement of anthropomorphism. We measured the robot’s humanlikeness using a questionnaire, whereas in the other study anthropomorphism was measured by the number of hits inflicted upon the robot that was supposed to be killed. However, the higher tendency to kill a robot under certain conditions does not necessarily mean that it is perceived as more anthropomorphic, but animate. Therefore, although intelligence can make a robot more life-like, it does not necessarily make it more humanlike. There could be different factors affecting these two phenomena. It is possible that intelligence may be less important as a factor of anthropomorphism in the context of robotics as people can expect them to be intelligent. As such, there could be a high anthropomorphism baseline that includes high intelligence expectations, which cannot be easily exceeded.

The analyses of two dimensions of anthropomorphism provided only a partial confirmation for our hypothesis for RQ1. The HN dimension, also known as Experience, was only affected by the robot’s emotionality, but not intelligence. This finding is consistent with previous work on dehumanization (e.g. [13]), but it contradicts previous work in HRI [6] who found no difference between the two dimensions as dependent variables. The second dimension - UH (Agency) was supposed to be influenced by intelligence; however in our study neither of these factors had influence on it. Intelligence did not have an effect on anthropomorphism measured on either a uni-dimensional or two-dimensional scale.

It is possible that other factors affect stronger the UH dimension, such as intentionality or communication capabilities [13]. Therefore, future studies should explore further the role of agency and its different sub-factors in order to understand better its outcomes in HRI. An alternative explanation could be that only the factors from one dimension (HN) play a role in a robot’s perceived humanlikeness. HN is the dimension that is supposed to distinguish humans from automata [13]. It is possible that only the factors from this dimension affect a robot’s anthropomorphism. In particular, intelligence did not significantly affect anthropomorphism, which can mean that dehumanization is not necessarily the reverse process to anthropomorphism. This emphasizes the need for researchers to be considerate when applying findings from research of dehumanization in the context of robotics.

However, even if the model of humanness cannot be directly applied to anthropomorphism it is possible that both are multi-dimensional phenomena. We believe that although our results cannot provide the definite answer due to limited impact of intelligence on humanlikeness, they are promising enough to spur further research on this topic. Our data showed that the robot’s emotional capabilities made it being anthropomorphised stronger on the HN dimension. Although not statistically significant, we have noticed the opposite trend for the intelligence that affected mainly the UH dimension. This kind of different form of anthropomorphization cannot be distinguished using only uni-dimensional measurement tools of anthropomorphism. However, in order to fully benefit from such a distinction it is necessary to research what are different consequences for HRI depending on how a robot is being anthropomorphised. In our future research we plan to address the question of whether people will have different mental models of a robot and behave differently when interacting with it, similarly to their changed behaviour towards dehumanized others, e.g. [10 13].

Apart from investigating further what factors affect anthropomorphism, and how and what are its consequences, it is crucial to investigate their existence and the relationship between them. In order for anthropomorphism to be at least two-dimensional, it is necessary for the dimensions to be to a certain degree independent. If both dimensions measured the same, there would be hardly any potential benefit of measuring anthropomorphism on these dimensions rather than using uni-dimensional scales. However, our data not only suggested different effects of independent variables on two-dimensions of anthropomorphism, but also showed that these dimensions are at least partially independent. The initial correlation between UH and HN dimensions was significant. However, further analyses revealed that they are only correlated when a robot was attributed no or few characteristics of these dimensions. On the other hand, when the robot was anthropomorphised on either of the dimensions, they were independent.

In summary, we believe that a question of dimensionality of anthropomorphism should receive further attention by the HRI community. It can not only help us to understand better what are the factors affecting a robot’s perceived humanlikeness, but also to measure more accurately how it is being anthropomorphised. Ultimately, HRI can be improved by attributing a robot only with humanlike characteristics that can facilitate the interaction and avoiding implementing human attributes that could hamper it.

5 Acknowledgments

The authors would like to thank Dieta Kuchenbrandt and anonymous reviewers for invaluable feedback that made this paper more compelling and interesting. Furthermore, we thank Anthony Poncet, Eduardo Sandoval and Jürgen Brandstetter for help with running the experiment.

Footnotes

1The results of all conducted ANOVAs described in this paper are in Table 2. For the mean scores and standard deviations of all dependent measures please refer to Table 3. In the results section each described statistical test has a reference to the specific row and column in the tables with numeric values that are relevant for that analysis, e.g. see Table 21 and 31 means that the results of the presented ANOVA can be found in Table 2 row number 1, and mean score and standard deviations are in Table 3 column number 1.

6 References

[1] H. Aviezer, Y. Trope, and A. Todorov. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science, 338(6111):1225–1229, Nov. 2012.

[2] C. Bartneck, D. Kulic, E. Croft, and S. Zoghbi. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. International Journal of Social Robotics, 1(1):71 – 81, 2009.

[3] C. Bartneck, M. Verbunt, O. Mubin, and A. Al Mahmud. To kill a mockingbird robot. In HRI 2007 - Proceedings of the 2007 ACM/IEEE Conference on Human-Robot Interaction - Robot as Team Member, pages 81 – 87, Arlington, VA, United states, 2007.

[4] N. Epley, A. Waytz, and J. T. Cacioppo. On seeing human: A three-factor theory of anthropomorphism. Psychological Review, 114(4):864–886, Oct. 2007.

[5] F. Eyssel, F. Hegel, G. Horstmann, and C. Wagner. Anthropomorphic inferences from emotional nonverbal cues: A case study. In Proceedings - IEEE International Workshop on Robot and Human Interactive Communication, pages 646 – 651, Viareggio, Italy, 2010.

[6] F. Eyssel, D. Kuchenbrandt, and S. Bobinger. Effects of anticipated human-robot interaction and predictability of robot behavior on perceptions of anthropomorphism. In HRI 2011 - Proceedings of the 6th ACM/IEEE International Conference on Human-Robot Interaction, pages 61 – 67, Lausanne, Switzerland, 2011.

[7] K. Fischer, K. S. Lohan, and K. Foth. Levels of embodiment: Linguistic analyses of factors influencing HRI. In HRI’12 - Proceedings of the 7th Annual ACM/IEEE International Conference on Human-Robot Interaction, pages 463–470, 2012.

[8] B. Friedman, P. H. Kahn,Jr., and J. Hagman. Hardware companions?: what online AIBO discussion forums reveal about the human-robotic relationship. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, CHI ’03, pages 273–280, New York, NY, USA, 2003. ACM.

[9] H. M. Gray, K. Gray, and D. M. Wegner. Dimensions of mind perception. Science, 315(5812):619, 2007.

[10] K. Gray and D. Wegner. Moral typecasting: Divergent perceptions of moral agents and moral patients. Journal of Personality and Social Psychology, 96(3):505–520, 2009.

[11] K. Gray and D. Wegner. Feeling robots and human zombies: Mind perception and the uncanny valley. Cognition, 125(1):125–130, 2012.

[12] S. Guthrie. Faces in the clouds: A new theory of religion. Oxford University Press, USA, 1995.

[13] N. Haslam. Dehumanization: An integrative review. Personality and Social Psychology Review, 10(3):252–264, 2006.

[14] N. Haslam, B. Bastian, S. Laham, and S. Loughnan. Humanness, dehumanization, and moral psychology. 2012.

[15] N. Haslam, S. Loughnan, Y. Kashima, and P. Bain. Attributing and denying humanness to others. European Review of Social Psychology, 19(1):55–85, 2009.

[16] C. Ho and K. MacDorman. Revisiting the uncanny valley theory: Developing and validating an alternative to the godspeed indices. Computers in Human Behavior, 26(6):1508–1518, 2010.

[17] D. Kuchenbrandt, F. Eyssel, S. Bobinger, and M. Neufeld. When a robot’s group membership matters. International Journal of Social Robotics, 5(3):409–417, 2013.

[18] J. H. Lesher et al. Xenophanes of Colophon: Fragments: A Text and Translation With a Commentary, volume 4. University of Toronto Press, 2001.

[19] J. Leyens, P. Paladino, R. Rodriguez-Torres, J. Vaes, S. Demoulin, A. Rodriguez-Perez, and R. Gaunt. The emotional side of prejudice: The attribution of secondary emotions to ingroups and outgroups. Personality and Social Psychology Review, 4(2):186–197, 2000.

[20] M. Mori. The uncanny valley. Energy, 7(4):33–35, 1970.

[21] J. W. Peirce. PsychoPy-psychophysics software in python. Journal of neuroscience methods, 162(1):8–13, 2007.

[22] M. Salem, F. Eyssel, K. Rohlfing, S. Kopp, and F. Joublin. Effects of gesture on the perception of psychological anthropomorphism: A case study with a humanoid robot, volume 7072 LNAI of Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2011.

[23] V. K. Sims, M. G. Chin, H. C. Lum, L. Upham-Ellis, T. Ballion, and N. C. Lagattuta. Robots’ auditory cues are subject to anthropomorphism. In Proceedings of the Human Factors and Ergonomics Society, volume 3, pages 1418–1421, 2009.

[24] M. L. Walters, D. S. Syrdal, K. L. Koay, K. Dautenhahn, and R. Te Boekhorst. Human approach distances to a mechanical-looking robot with different robot voice styles. In Proceedings of the 17th IEEE International Symposium on Robot and Human Interactive Communication, RO-MAN, pages 707–712, 2008.

[25] E. Wang, C. Lignos, A. Vatsal, and B. Scassellati. Effects of head movement on perceptions of humanold robot behavior. In HRI 2006: Proceedings of the 2006 ACM Conference on Human-Robot Interaction, volume 2006, pages 180–185, 2006.

[26] A. Waytz, J. Cacioppo, and N. Epley. Who sees human? the stability and importance of individual differences in anthropomorphism. Perspectives on Psychological Science, 5(3):219–232, 2010.

[27] A. Waytz, N. Epley, and J. T. Cacioppo. Social cognition unbound: Insights into anthropomorphism and dehumanization. Current Directions in Psychological Science, 19(1):58–62, 2010.

This is a pre-prJanuary 28, 2014e -->e -->e -->e -->e -->e --> | All Publications