DOI: 10.1145/2783446.2783582 | CITEULIKE: 13706515 | REFERENCE: BibTex, Endnote, RefMan ,PDF ![]()

Omar Mubin and Christoph Bartneck. 2015. Do as I say: exploring human response to a predictable and unpredictable robot. In Proceedings of the 2015 British HCI Conference (British HCI '15). ACM, New York, NY, USA, 110-116.

Do as I say: Exploring human response to a predictable and unpredictable robot

HIT Lab NZ, University of Canterbury

PO Box 4800, 8410 Christchurch

New Zealand

christoph@bartneck.de

University of Western Sydney and MARCS Institute Sydney

Australia

o.mubin@uws.edu.au

Abstract - Humans are known to feel engaged and at the same time apprehensive when presented with unpredictable behaviour of other agents or humans. Predictable behaviour is thought to be reliable but boring. We argue that is imperative to evaluate human response to (un)predictable robots for a better understanding of Human Robot Interaction Scenarios, manipulated across robot embodiment. The results of our controlled experiment with 23 participants showed that predictable robot behaviour resulted in more patience on behalf of the user and robot embodiment had no significant effect. In conclusion, we also discuss the importance of robot role on the perception of predictability in robot behaviour.

Keywords: Human-Robot Interaction.Reciprocity.Game Theory.Prisoner's Dilemma.UltimatumGame.Cooperation

Introduction

Humans by their very nature are at times unpredictable and the unexpected tendencies of any human creates social nuances that other humans must learn to detect and adapt [17]. The topic of debate is, on the other hand; how do humans react to artificial stimuli that are unpredictable. Psychological research [14] has shown that unpredictable and aversive stimuli led to sustained anxiety as compared to stimuli that was predictable and aversive. On the contrary, agent behaviour research [3] shows that unpredictable behaviour by the computing system leads to engagement on behalf of the user.Similarly, within Human Computer Interaction (HCI), we observe different viewpoints regarding preferences for both predictable and unpredictable machine/product/technology behaviour.Creating social machines and investigating various mechanisms in order to attribute a personality to a computer or machine has been studied in HCI since many years ago [20]. More recently, unpredictable behaviour in tangible artifacts was employed to indicate and portray emotion and actuation by the machine towards the user [6]. In game design [1], it is argued that a computer/agent that is unpredictable creates extra challenge for the user and leads to higher levels of interest and engagement. Non-determinism in interface behaviour has been shown to lead to spontaneous and playful responses from a user [16]. On the other hand it is commonly acknowledged that unpredictable interface design in websites leads to frustration on behalf of the user [7]. In addition, it has been shown that system delays that are constant in duration lead to reduced user response time [24].

| Predictable | Unpredictable | |||

| Positive | Negative | Positive | Negative | |

| Human | Reliable | Boring | Creative | Unreliable |

| Agent | Non-distracting | Boring | Interesting | Frightening |

Table 1: Pros and Cons of Human and Agent Predictability

Manipulation of robot behaviour plays a key role in conveying a certain level of anthropomorphism [25]. Researchers in HRI strive for humanlike behaviour in robots and go to lengths to achieve this by not only focusing on the physical embodiment of a robot but also designing lifelike and animate gestures, behaviour and personalities. Prior work in HRI [12] indicates that unpredictability of a robot as perceived by a user can lead to higher levels of perceived anthropomorphism. Can we therefore state that unpredictable behaviour can be used as a tool to increase perceived anthropomorphism and attributions of robot personality and ultimately elicit richer HRI?

We have already hinted on the importance of robot behaviour on perceived anthropomorphism. It can be expected that embodiment would also interact with predictability. Given that humans are unpredictable by nature would therefore a more human-like looking robot be expected and preferred to show unpredictable behaviour. Would the trend be reversed in a robot, which does not resemble a humanoid? (i.e. a robot thought more of as a machine would be expected to show consistent behavior). In sum, we believe that in order to decide the appropriate level of predictability in a robot's or agent's behaviour attention will need to be paid to at least the following factors: interaction context, desired level of perceived anthropomorphism and robot/agent embodiment.

Prior research from HRI and Social Cognition [10,11,12] has investigated the perception of robot predictability by humans and all studies comment that humans prefer predictable behavior in robots, as this gives them a visionary feeling of control. However the following gaps and critique emerge on those studies. Firstly, results were gathered superficially via subjective questionnaires [10], secondly, there has been no actual interaction with a robot [11] (a pet dog was used and hence results were extrapolated to apply to an HRI context) and lastly there has been no manipulation of embodiment nor employment of real (i.e. non simulated) HRI [12] (a picture of a robot was used with accompanying text). Therefore in this research, via lab based HRI, we aim to explore firstly, if human perception of robots is influenced by the predictability in the behavior of the robots (as evidenced through actual interaction with the robot) and secondly, if this perception is associated with how humanlike the robot looks (i.e. the embodiment of the robot). It is worth mentioning that (un)predictability can have both positive and negative connotations, therefore we introduce another variable functionality, as a robot can be functional while being unpredictable and can be dysfunctional while being predictable. In sum, functionality can be simply defined as the ability to achieve a successful/correct outcome. Based on prior literature we hypothesized that high predictability [10,11,12] and high functionality [5] would in general lead to more patience/tolerance being shown by humans towards the robot. In addition, we believed that unpredictability in a humanoid robot would be tolerated more as compared to a non humanoid robot [9].

Method

We conducted an empirical study where all robot behaviours were controlled in a wizard of oz setup. The experiment was setup as a 2 (embodiment type: humanoid vs non-humanoid) within (counterbalanced) X 2 (predictability: high and low) between X 2 (functionality: high and low) between design. Ethics clearances were received prior to running the study (UWS Approval Number: H10875).

Procedure

Each individual participant was invited to a university room where they were requested to play 2 rounds of a shooting game. A round was played with either the humanoid or the non humanoid robot (order was counterbalanced across participants). The participant first read the information sheet, signed the consent form and then the facilitator explained the rules of the game. The participant was informed (as a cover-up story) that the purpose of the experiment was to evaluate the speech understanding capabilities of the robot. The facilitator would then leave the room. At the end of the game round, the facilitator would re-enter the room and the participant would be requested to fill in a questionnaire. Prior to commencing the second round of the game the participant would then take part in a robot speech calibration task (details described in measurement section). The same process (game-questionnaire-calibration task) would repeat for the second round. Before leaving the participants were debriefed on the exact purposes of the experiment.

Game Design

The game was adapted from [18]. The participants were required to navigate the robot from a starting point to four intermediate checkpoints by giving it verbal commands through a dummy microphone. The list of commands that could be used were: Go forward, Go backwards, Turn Left (90 degrees), Turn Right (90 degrees), Stop, Sense Color and Shoot. The aim of the game was to shoot 4 balls in the target as quickly as possible. However, a single shoot could only be unlocked when a specific checkpoint was passed, i.e. a successful scanning of a predefined colored rectangle by the robot's color sensor (see Figure 1). The process would repeat for the other three rectangles. Each game round followed a predefined traversal order of the rectangles. Both sequences were different but spatially symmetric and hence of equal difficulty.

Figure 1: Game Setup

Materials and Setup

The robots used for the experiment were built using LEGO Mindstorms (see Figure 2). We designed both robots to include a wheel base and shooting assembly as in the Shooterbot (a commonly advertised Mindstorm robot). The upper torso of the humanoid robot was identical to the Alpha Rex robot (another commonly designed Mindstorm robot); comprising of arms and a face. One of the primary reasons of using LEGO Mindstorms was that we would be able to create two robots that only differed in embodiment but would be same in speed, size and capabilities (for e.g. we used an identical wheel assembly and shooting setup for both robots). The afore-mentioned benefit of the Mindstorms to manipulate only robot embodiment has been advocated by other researchers [15]; where variants of the two robots Shooterbot and Alpha Rex were used to manipulate embodiment. The robots were controlled by the wizard through the LeJoS API. A computer that was placed in the experiment room ran the application to interface with the robots using bluetooth. The wizard accessed this computer from another room remotely and kept an eye on proceedings using video/audio feed. The wizard would simply press the corresponding button based on the command given by the participant to generate a response from the robot. A wireless speaker was also connected to this computer to amplify the audio feedback from the robot; with five outputs possible (command received, error, sensing color, color detected-activated shooting and sorry correcting error). The participants were told that the wireless speaker was also functioning as the microphone, sending their speech signals to the robot.

Figure 2: The two robots employed in the experiment; Alpha Rex humanoid robot (L) and Shooterbot (R)

Conditions

The following four conditions were utilised with each participant being assigned to one condition only for both of the two game rounds.

High Predictable, High Functional

The robot would follow all commands accurately and simply do what it was told; essentially behaving as a good robot as described in [5].

High Predictable, Low Functional

The robot would be unable to follow one command only (such as turn left in the non humanoid condition and turn right in the humanoid condition). The response of the robot was to carry out the opposite of the intended behaviour, i.e. the robot would turn left whenever turn right was said in the humanoid condition. Turn right would work accurately in the humanoid condition. In the non humanoid condition the command of Turn left would always result in Turn right.

Low Predictable, High Functional

For a predefined set of randomly allocated commands, the robot would do a wrong action but immediately correct itself (and say "sorry correcting error"). This predefined set would be different for the two types of robots but was same across participants.

Low Predictable, Low Functional

For a predefined set of randomly allocated commands, the robot would do a wrong action; i.e. a bad robot as in [5]. Similar to condition 3, this predefined set would be different for the two types of robots but was same across participants.

For both conditions 3 and 4, the wrong action was a linear transition through the possible navigation behaviours. It was ensured that for both conditions 3 and 4, 30% of commands (in blocks of 3 out of 10) resulted in the erroneous behaviour from the robot. Which command number was meant to be erroneous was randomly generated and was the same across participants but different across the two game rounds. Therefore, prior to data collection we specified two randomly generated number sequences (one for each embodiment) which were applied to all participants.

Measurements

following behavioural measurements were logged automatically by the game software. They are described hereunder alongside the questionnaire scales.

Game Duration (GD)

The total time taken to finish the game session; measured from when the facilitator left the room to when the last ball was shot.

Total Commands Issued in the game (CI)

The total number of commands uttered by a participant in one game session; essentially measured by the number of button presses by the wizard in response to a command.

Average Duration between Commands (ADC)

The average inter-command duration for a participant in a single game session; also measured by logging the time between two button presses by the wizard in response to commands.

Average Duration between the Wrong behaviour of the robot and the next command (ADWC)

For conditions 2 and 4 only, we logged the time taken by participants to correct the robot's action which translated to the time between the wrong behaviour of the robot and the next command.

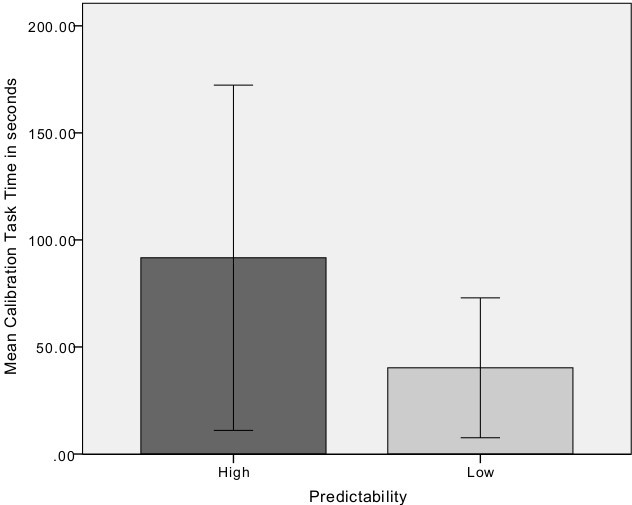

Calibration Task Time (CTT)

After filling in the questionnaire, participants would take part in a never ending calibration task in the absence of the facilitator. The participants were informed that their goal was to calibrate the speech system of the robot. The robot would produce a pair of tones (differing on frequency) and the participants would state if they were same or different. If the tones were different the next pair would be played out. The tones would never be the same in reality. The task would stop when the participants would perceive the tones to be the same. Therefore, via this task we believed we would be able to measure the extent of patience/tolerance that the participant had with the robot (similar to ADC and ADWC, the lower the time; the lower the tolerance shown). Repetitive and tedious tasks have been employed in prior HRI research to measure human tolerance and compliance towards a robot [8].

Questionnaire

5 point likert scales were administered that measured two factors, namely: Perceived Anthropomorphism (P-ANT) and Perceived Intelligence (P-I) of the robot. The scales were utilised from the GodSpeed Questionnaire [2], one of the commonly used scales to determine human perception of robots. As a manipulation check a 5 point predictability item was also included.

Participants

A total of 24 participants (10 female) were recruited for the experiment and were divided equally over the 4 conditions (6 each). All participants were either university students or university staff members. The data from one participant (condition 4) was excluded from analysis because the batteries of the robot died midway through the experiment.

Results

A repeated measures ANOVA was conducted with embodiment as the within subjects factor and predictability and functionality as the between subject variables. We first report on the results from the questionnaire and then the behavioural measures.

Questionnaire Results

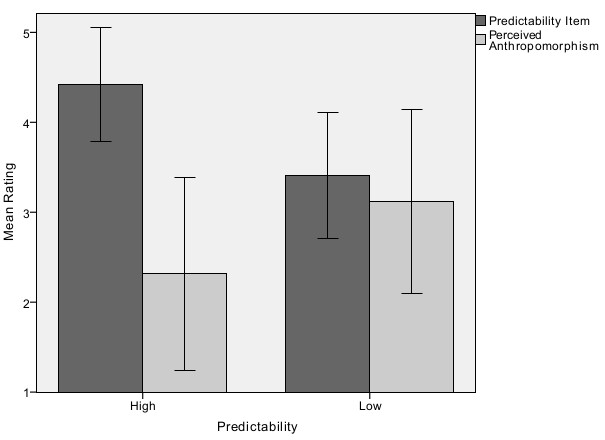

In order to check the reliability of our scale, initially Cronbach Alphas were computed for the factors Perceived Anthropomorphism and Perceived Intelligence and sufficient reliability was observed (a > 0.8 for both factors). The results for the ANOVA revealed that Embodiment did not have an effect on any of the questionnaire factors. Predictability as a between subject factor had an effect on the predictability item, F(1, 19)=12.289, p = 0.002. This result indicated that participants were overall correct in their judgement of the robot; thus confirming the manipulation of predictability. Predictability also had a near significant effect on Perceived Anthropomorphism F(1, 19)=3.58, p = 0.07 with an observed power of 44%. Therefore it can be speculated that lower predictability was being associated with higher attributions of perceived anthropomorphism. Functionality did not have an effect on any of the questionnaire factors. The relevant subjective measurements across predictability are summarised in a barchart averaged across embodiment and functionality (see Figure 3).

Figure 3: Bar Chart for Mean and Std Dev for Predictability Item and Perceived Anthropomorphism

Behavioural Measure Results

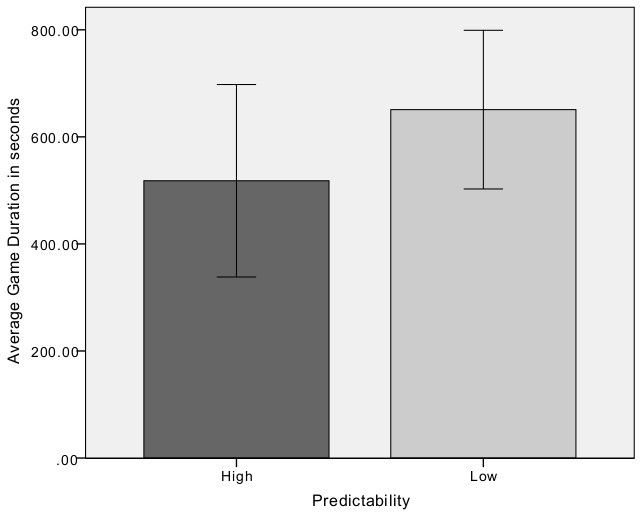

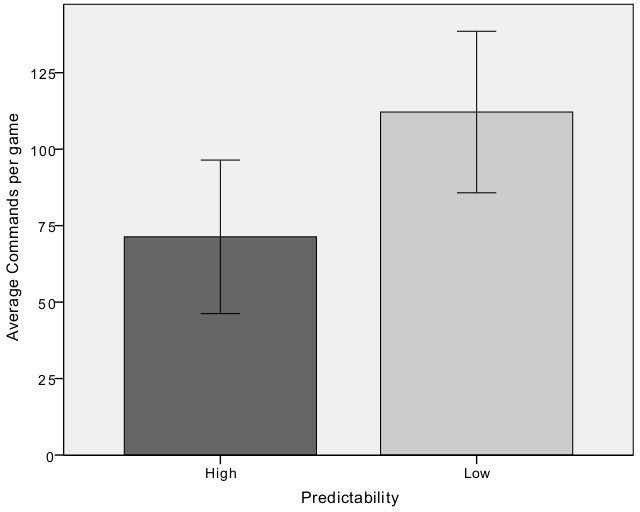

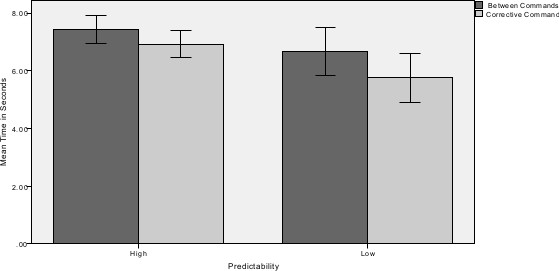

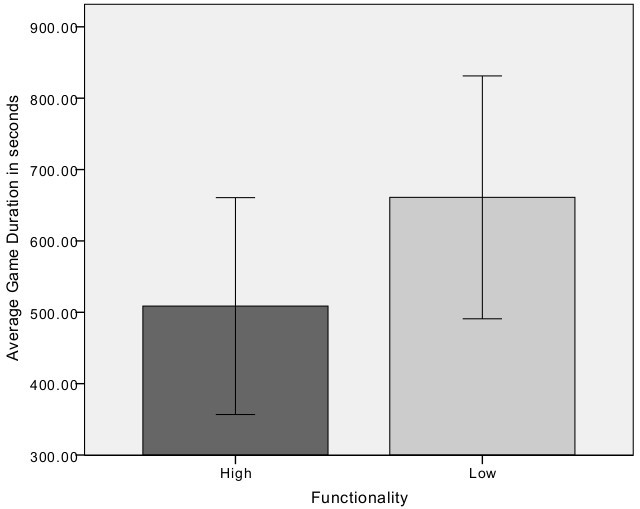

A repeated measures ANOVA revealed that embodiment did not have an effect on any of the five measures. Predictability had a significant effect on all five measures: Game Duration (F(1, 22)=4.96,p=0.04), Commands Issued (F(1, 22)=14.84,p=0.001), Average Duration Between Commands (F(1, 22)=30.05,p < 0.001), Average Duration between Wrong Behaviour and the next command (F(1, 10)=8.52,p=0.02) and Calibration Task Time (F(1, 22)=4.04,p=0.05). Higher predictability led to lower game duration, lower number of commands issued, longer duration between commands and longer calibration task times. Bar charts summarising means and standard deviations of the five behavioural measures across predictability are presented in Figure 4 - 6.

Figure 4: Bar charts summarising mean and std dev across predictability for game duration and total commands per game

Figure 5: Bar Chart for Mean/Std Dev for Duration between Commands and Time to corrective Command across predictability

Figure 6: Bar chart summarising mean and std dev across predictability for calibration task time (L) and Bar chart summarising mean and std dev across functionality for game duration (R)

Functionality had a significant effect on Game Duration (F(1, 22)=6.36,p=0.02) and Average Duration Between Commands (F(1, 22)=4.23,p=0.05). Functionality did not have an effect on Commands Issued and Calibration Task Time. The only significant interaction effect was between predictability and functionality on average duration between commands (F(1, 22)=6.55,p=0.02). A bar chart summarising mean and standard deviation of Game Duration across functionality is presented in Figure 6.

At first sight it may appear that Game Duration could consequently be effecting other measurements, such that lower game duration results in higher overall patience towards the robot. However, a Pearson correlation revealed that for both embodiments Game Duration had a significant correlation with Commands Issued only (r=0.91, p < 0.001 - non humanoid) (r=0.84, p < 0.001 - humanoid) and not with the other measurements (the strongest correlation being only r=-0.34). This provides some credibility to the main trend of an overall higher preference towards predictability.

Discussion

Our results reveal that participants showed greater patience when the robot behaved in a consistent or predictable manner. This result is in line with prior research [10,11,12], where it was also argued that humans prefer predictable behaviour in robots. Furthermore, the calibration task time showed that the participants were willing to help a predictable robot. Participants seemed intolerant when the robot behaved unpredictably. This can possibly be explained by the nature of the game that we employed. It was cooperative in nature and any variations in robot behaviour had a direct effect on the participants performance. Participants simply wanted the robot to do as told. In our experiment the user-robot relation was somewhat of a master-slave relationship and hence participants expected that the robot would follow their command. Prior research [23] shows that human participants in most instances negatively evaluate robot disagreement; a robot may disagree accidentally (an error) or intentionally (based on the context of interaction) however in the latter; the ensuing HRI must be carefully designed so as to the user is informed in the most appropriate manner. In our experiment, when the robot had low functionality, participants perceived as the robot committing an error and wished to correct its behaviour.

In addition, in our game the robot was primarily a service robot and consistent behaviour (even if it was incorrect) was preferred - with some participants ultimately ending up avoiding the erroneous command in Condition 2. This is akin to how we operate service appliances; if a feature malfunctions we tend to abandon that feature but carry on using the device. Prior work [22] that reported higher engagement when the robot was unpredictable utilised a task that was competitive in nature. Research investigations into Sony's social companion robot Aibo has revealed that users would feel engaged by its unpredictable behavior [13]. Therefore it is clear that not only the type/context of interaction but the consequent role of the robot (service or collaborative or cooperative) plays a significant part towards human perception of robot behaviour; a factor which has been suggested in [4]. Our interpretation of robot role having a direct impact on human perception thereof is also confirmed through [19], where it was reported that humans were much more sociable and helpful towards a collaborative robot as compared to when the robot took on the role of a competitor; furthermore in the latter condition the participants showed higher engagement with the task.

We did not observe a main effect of embodiment. Perhaps in our setup embodiment was controlled too rigidly. Some participants were unable to perceive the robots as different simply because they were both LEGO Mindstorm robots, even though they were two separate entities. Had we employed a robot from another manufacturer for the humanoid condition, we would not have been able to employ the interaction task that we used. In subsequent future work we aim to incorporate different robot embodiments to further investigate the effect of robot embodiment on the perceived value of predictability in robot behaviour. One of the other interesting results observed was a near significant effect of predictability on perceived anthropomorphism. Perceived anthropomorphism was higher (nearly significant) when the robot was unpredictable, as was also shown in [12]. Clearly, there is potential in utilising unpredictable robot behaviour but as discussed prior with reference to the implications of predictable robot behaviour, this must be associated with robot role and interaction context. Implications of our results will allow HRI and HCI designers to contemplate the nature of predictability in robot/agent behaviour considering not only the context of interaction but also robot role (service or active).

Conclusion

We would like to point out some limitations to our research conducted so far. We aim to recruit more participants to confirm our results. In addition, we did not control for demographics of participants, such as cultural background or familiarity with robots. We also aim to extend our research by employing robot role as an independent variable. Consequently, we will be able to ascertain the importance of robot role towards the value of predictability in robot behaviour as perceived by humans. Our results have indicated that higher predictability in robots was tolerated more and participants were willing to help a robot that was obedient. In our setup the physical outlook of a robot did not effect user preferences of perceived predictability. We have also discussed the importance of robot role and interaction context as to influencing this perception.

References

-

J.Alvarez. Machine learning in video games: The importance of ai logic in gaming.2013.

-

C.Bartneck, D.Kuli\'c, E.Croft, and S.Zoghbi.Measurement instruments for the anthropomorphism, animacy,likeability, perceived intelligence, and perceived safety of robots.International journal of social robotics, 1(1):71-81, 2009.

-

T.Bickmore, D.Schulman, and L.Yin.Maintaining engagement in long-term interventions with relational agents.Applied Artificial Intelligence, 24(6):648-666, 2010.

- M.Brandon.Effect personality matching on robot acceptance: effect of robot-user personality matching on the acceptance of domestic assistant robots for elderly. 2012.

- E.Broadbent, B.MacDonald, L.Jago, M.Juergens, and O.Mazharullah.Human reactions to good and bad robots.In Conference on Intelligent Robots and Systems., pages 3703-3708. IEEE, 2007.

- E.Burneleit, F.Hemmert, and R.Wettach.Living interfaces: the impatient toaster.In Proceedings of the 3rd International Conference on Tangible and Embedded Interaction, pages 21-22. ACM, 2009.

- J.L. K. B.I. Ceaparu and J.R.B. Shneiderman.Help! im lost: User frustration in web navigation.IT and Society, 1(3):18-26, 2003.

- D.Cormier, J.Young, M.Nakane, G.Newman, and S.Durocher.Would you do as a robot commands? an obedience study for human-robot interaction.In International Conference on Human-Agent Interaction, 2013.

- K.Dautenhahn.Robots as social actors: Aurora.In Cognitive Technology Conference, volume 359, pages 374-389,1999.

- K.Dautenhahn, S.Woods, C.Kaouri, M.L. Walters, K.L. Koay, and I.Werry.What is a robot companion-friend, assistant or butler?In Conference on Intelligent Robots and Systems, pages 1192-1197. IEEE, 2005.

- N.Epley, A.Waytz, S.Akalis, and J.T. Cacioppo.When we need a human: Motivational determinants of anthropomorphism.Social Cognition, 26(2):143-155, 2008.

- F.Eyssel, D.Kuchenbrandt, and S.Bobinger.Effects of anticipated human-robot interaction and predictability of robot behavior on perceptions of anthropomorphism.In Conference on Human-robot interaction, pages 61-68. ACM,2011.

- J.Fink, O.Mubin, F.Kaplan, and P.Dillenbourg.Anthropomorphic language in online forums about roomba, aibo and the ipad.In IEEE Workshop on Advanced Robotics and its Social Impacts (ARSO), pages 54-59. IEEE, 2012.

- C.Grillon, J.P. Baas, S.Lissek, K.Smith, and J.Milstein. Anxious responses to predictable and unpredictable aversive events.Behavioral neuroscience, 118(5):916, 2004.

- V.Groom, L.Takayama, P.Ochi, and C.Nass.I am my robot: the impact of robot-building and robot form on operators. In Conference on Human-Robot Interaction (HRI), pages 31-36.IEEE, 2009.

- D.Kirk, S.Izadi, O.Hilliges, R.Banks, S.Taylor, and A.Sellen. At home with surface computing?In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pages 159-168. ACM, 2012.

- P.Lieberman.The unpredictable species: what makes humans unique.Princeton University Press, 2013.

- O.Mubin, C.Bartneck, L.Feijs, H.Hooft ;van Huysduynen, J.Hu, and J.Muelver.Improving speech recognition with the robot interaction language.Disruptive Science and Technology, 1(2):79-88, 2012.

- B.Mutlu, S.Osman, J.Forlizzi, J.Hodgins, and S.Kiesler. Perceptions of asimo: an exploration on co-operation and competition with humans and humanoid robots.In Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-robot interaction, pages 351-352. ACM, 2006.

- C.Nass, J.Steuer, and E.R. Tauber.Computers are social actors.In Proceedings of the SIGCHI conference on Human factors in computing systems, pages 72-78. ACM, 1994.

- B.Shneiderman.Designing the user interface strategies for effective human computer interaction.Pearson Education India, 1986.

- E.Short, J.Hart, M.Vu, and B.Scassellati.No fair!! an interaction with a cheating robot.In Conference on Human-Robot Interaction (HRI), pages 219-226.IEEE, 2010.

- L.Takayama, V.Groom, and C.Nass.I'm sorry, dave: I'm afraid i won't do that: social aspects of human-agent conflict.In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pages 2099-2108. ACM, 2009.

- R.Thomaschke and C.Haering.Predictivity of system delays shortens human response time.International Journal of Human-Computer Studies,72(3):358-365, 2014.

- J.Zlotowski, D.Proudfoot, K.Yogeeswaran, and C.Bartneck.Anthropomorphism: Opportunities and challenges in human robot interaction.International Journal of Social Robotics, pages 1-14, 2014.

This is a pre-print version | last updated October 2, 2015 | All Publications